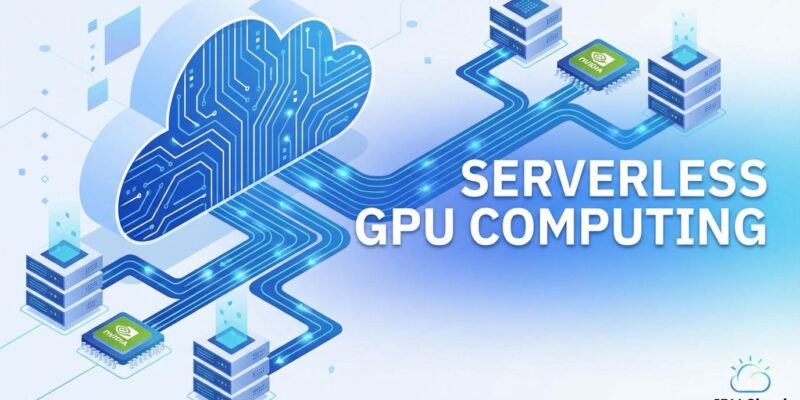

IBM just entered the serverless GPU race with Cloud Code Engine Serverless Fleets, packing NVIDIA L40 GPUs into a pay-as-you-go model. It’s not the first—Google Cloud Run beat them to it—but IBM’s betting enterprises will choose managed simplicity over AWS and Azure, which still don’t offer serverless GPUs at all.

What IBM Launched

Cloud Code Engine Serverless Fleets lets you submit large batch jobs to a single endpoint. IBM automatically provisions GPU-backed virtual machines, executes your workload, and tapers off resources when complete. You pay only for actual GPU usage—no idle costs.

The hardware: NVIDIA L40 GPUs with 48GB GDDR6 memory, 568 fourth-generation Tensor Cores, and 90.5 teraflops of FP32 performance. That’s enterprise-grade AI acceleration with 2X faster training versus previous-generation GPUs, delivered through a managed serverless platform.

The killer feature is scale-to-zero. When your workload finishes, your bill stops. Traditional GPU infrastructure charges you 24/7 whether you’re training models or staring at idle instances.

The Competitive Landscape

IBM isn’t alone here—or even first. Google Cloud Run already offers serverless GPU support with NVIDIA L4 chips and per-second billing. AWS Lambda and Azure Functions? Neither supports GPUs at all. If you’re on AWS or Azure and need GPU acceleration, you’re stuck with traditional EC2 instances or specialized third-party providers like Beam or Modal.

That makes IBM vs. Google the real serverless GPU competition for enterprise teams. Both offer managed platforms, automatic scaling, and pay-per-use pricing. The difference: IBM targets organizations already invested in its cloud ecosystem, while Google appeals to teams starting fresh with transparent pricing and mature documentation.

The Cost Question

Serverless GPU promises up to 90% cost savings for variable workloads compared to traditional infrastructure. The math works if you run batch jobs or have bursty demand patterns. Traditional GPUs bill 24/7—even a community-hosted RTX 3090 costs $0.22/hour around the clock. Enterprise H100s run $2.60 to $4.10/hour. With serverless, you pay only during execution.

But IBM hasn’t disclosed public pricing. You need to contact sales for rates, which is a red flag for cost-conscious teams. Compare that to Google Cloud Run’s transparent per-second billing or specialized providers like RunPod, which openly lists A100 pricing at $2.17/hour serverless.

And there’s a catch: cold starts. Loading AI frameworks like TensorFlow can take 29 seconds on a cold start, potentially causing timeouts. Warm calls? Under one second. If you’re running latency-sensitive applications, those cold start costs add up in both time and money.

The Reality Check

Serverless GPU works brilliantly for specific use cases: batch AI processing, periodic model training, on-demand inference, and compute-intensive simulations. It struggles with real-time, latency-sensitive applications where cold starts kill performance.

IBM’s focus on batch jobs is smart. Serverless Fleets is designed for run-to-completion tasks that scale elastically, not sub-second API responses. The system provisions optimal worker instances, executes tasks in parallel on NVIDIA L40 GPUs, and automatically removes resources when done.

But if you’re training models 24/7 or need consistent sub-second inference latency, reserved GPU instances might deliver better performance and potentially lower costs than paying for repeated cold starts.

Who Should Care

This targets enterprise AI teams already using IBM Cloud. If you’re invested in IBM’s ecosystem, Serverless Fleets offers GPU access without managing infrastructure. The NVIDIA L40 positions well as a cost-effective alternative to pricier A100 or H100 GPUs while delivering strong AI performance.

If you’re not on IBM Cloud? Google Cloud Run offers more mature serverless GPU with transparent pricing and better documentation. Specialized providers like Beam advertise cold starts under one second—a significant advantage for latency-sensitive workloads.

The surprising story isn’t IBM’s launch—it’s that AWS and Azure still lack serverless GPU in their function platforms. The gap creates opportunity for IBM and Google to capture enterprise AI teams looking for managed GPU infrastructure.

Serverless GPU is becoming standard infrastructure, not a niche offering. IBM’s entry validates the market, but execution matters: pricing transparency, cold start performance, and ecosystem maturity will determine whether enterprises choose IBM’s catch-up play or stick with Google’s first-mover advantage.