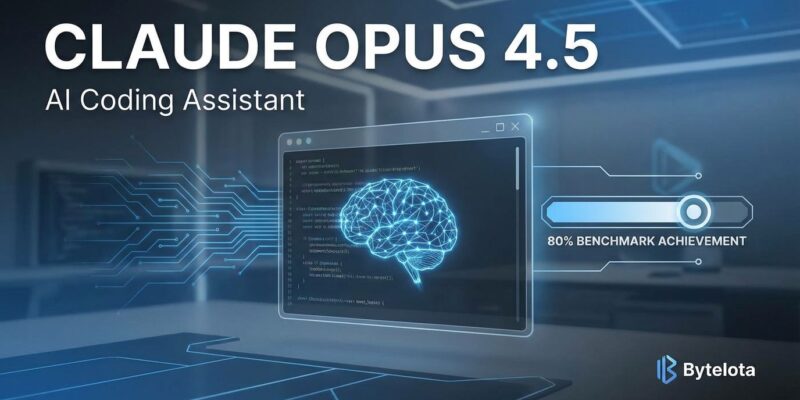

Anthropic has released Claude Opus 4.5, the first AI model to break the 80% barrier on SWE-bench Verified with an 80.9% score, outperforming OpenAI’s GPT-5.1-Codex-Max (77.9%) and Google’s Gemini 3.0 Pro (76.2%). The model is now available in Claude Code’s desktop app, bringing enterprise-grade coding intelligence to developers’ native workflows. Perhaps most surprisingly, Anthropic slashed pricing by 66% to $5/$25 per million tokens, making their most powerful model accessible to the masses.

Breaking the 80% Barrier

The 80.9% SWE-bench Verified score isn’t just an incremental improvement—it’s a milestone. This benchmark tests AI models on real-world software engineering tasks pulled from GitHub issues, and crossing the 80% threshold puts Opus 4.5 in uncharted territory. The competition isn’t even close: OpenAI’s latest coding-focused model trails by 3 percentage points, while Google’s Gemini 3.0 Pro lags by nearly 5 points.

But benchmarks can be gamed. What matters is real-world performance, and Anthropic tested Opus 4.5 on something more brutal: their internal take-home exam for prospective performance engineers. The model didn’t just pass—it scored higher than any human candidate in the company’s history. Let that sink in.

The model also leads on Terminal-bench 2.0 with 59.3% accuracy and dominates 7 out of 8 programming languages on SWE-bench Multilingual. This isn’t a one-trick pony optimized for a single benchmark. It’s consistently the best coding model available, period.

Claude Code Comes to Desktop

Claude Code, previously limited to web and mobile, is now available in the desktop app. This matters because developers don’t live in web browsers—they live in terminals and IDEs. The desktop integration brings multi-session parallel development, git worktrees for managing multiple branches simultaneously, and native terminal operation that doesn’t disrupt existing workflows.

The platform handles end-to-end development cycles: reading GitHub issues, writing code, running tests, and submitting pull requests. It integrates with VS Code, Cursor, Windsurf, and JetBrains IDEs, and works across macOS, Linux, and Windows. The updated Plan Mode enables Claude to tackle complex, multi-step tasks while running multiple AI agent sessions in parallel.

This is where AI coding assistants should have been all along—embedded in the actual development environment, not bolted on as an afterthought.

66% Cheaper Than Its Predecessor

Here’s where things get interesting. Opus 4.5 is dramatically better than its predecessor and 66% cheaper. At $5 per million input tokens and $25 per million output tokens, Anthropic has made their flagship model accessible to individual developers, not just enterprises with deep pockets.

The price cut isn’t just about market share—it’s backed by genuine efficiency improvements. At medium effort, Opus 4.5 matches the previous Sonnet 4.5’s best score while using 76% fewer output tokens. At its highest effort level, it exceeds Sonnet 4.5’s performance by 4.3 percentage points while using 48% fewer tokens. You’re getting better results faster and cheaper.

This combination of performance and affordability changes the calculus for AI-assisted development. The question is no longer whether you can afford to use top-tier AI coding tools, but whether you can afford not to.

The Broader Picture

Opus 4.5 is Anthropic’s third major model launch in two months, following Sonnet 4.5 and Haiku 4.5. This rapid release cadence reflects the current state of AI development: intense competition driving both performance and accessibility improvements simultaneously.

The release also includes ecosystem updates: Claude for Chrome is expanding to all Max users, Claude for Excel is now generally available, and automatic conversation summarization extends context limits without hitting token boundaries. Anthropic is building a comprehensive platform, not just releasing isolated models.

What This Means for Developers

We’ve reached a milestone where AI coding assistants aren’t just helpful tools—they’re approaching human-level competence on complex engineering tasks. Opus 4.5 outperforming every human candidate on a performance engineering exam isn’t a fluke. It’s a signal that the relationship between developers and AI is fundamentally shifting.

The desktop integration of Claude Code matters because it meets developers where they actually work. The price reduction matters because it democratizes access to capabilities that were recently exclusive to well-funded teams. And the benchmark leadership matters because it shows these improvements are real, measurable, and consistent across multiple evaluation frameworks.

Anthropic has set a new standard. The question now is how quickly the rest of the industry can catch up.