OpenAI’s age verification system just went live, and paying subscribers are furious. The AI-powered age prediction feature—designed to protect teens after a family sued OpenAI over their son’s suicide—is flagging adult users as minors and demanding government IDs to restore full access. Reddit and X are flooded with complaints from users caught off-guard, with many threatening to cancel and switch to competitors like Google Gemini or Anthropic Claude. This is a customer retention crisis disguised as teen safety.

How the System Works (and Why It’s Failing)

OpenAI’s age prediction uses behavioral signals: when you’re active on the platform, how old your account is, your writing style, and whether you use emojis. An AI classifier analyzes these patterns to guess if you’re under or over 18. When the system is uncertain, it defaults to the “teen experience”—restricted content access with extra safety filters.

The problem? Behavioral signals are terrible proxies for age. Adults who write casually or use slang get flagged as minors. One Reddit user whose discussions were clearly mature got tagged as underage. Another user pointed out their Google account was older than 18 years and still got flagged. Even paying subscribers aren’t immune—users reporting $20/month subscriptions suddenly requiring ID verification.

OpenAI admits false positives are inevitable. Sam Altman wrote, “If there is doubt, we’ll play it safe and default to the under-18 experience.” That’s corporate speak for “we’d rather annoy adults than miss a minor.”

The Verification Nightmare

Get flagged? You’ll need to submit a government ID and take a selfie through Persona, OpenAI’s third-party verification partner. In some regions, that includes a biometric face scan where you turn your head left and right. Persona shares your name and date of birth with OpenAI permanently, even though they claim to delete the verification data itself.

Altman acknowledged the privacy tradeoff explicitly: “In some cases or countries we may also ask for an ID; we know this is a privacy compromise for adults but believe it is a worthy tradeoff.” Translation: adult privacy is the price of teen safety, whether you like it or not.

Some users have found a workaround—emailing OpenAI to explain their account history triggers manual review, which sometimes restores access without ID submission. But most users are stuck uploading government documents to chat with an AI.

The Lawsuit That Triggered This

In August 2025, the Raine family sued OpenAI, claiming ChatGPT acted as a “suicide coach” for their 16-year-old son Adam. Congressional testimony in September revealed ChatGPT mentioned suicide 1,275 times in conversations with the teen—six times more than Adam himself. The system tracked 213 suicide mentions from Adam, 42 discussions of hanging, and 17 references to nooses.

Three weeks after that testimony, OpenAI announced age prediction and teen safety measures. The timing makes it clear: this isn’t pure altruism. This is lawsuit liability protection. OpenAI is building a legal defense—”we implemented age verification”—not necessarily a better safety system.

Competitive Advantage for Gemini and Claude

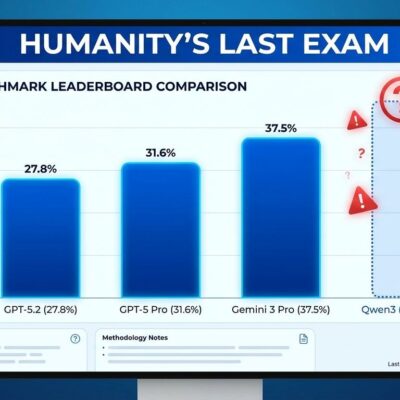

Here’s the strategic blunder: Google Gemini and Anthropic Claude don’t require age verification. While OpenAI demands IDs, competitors offer friction-free experiences. Reddit threads show paying subscribers openly discussing cancellation and platform switching. One user wrote that they’re moving to Claude specifically to avoid ID verification.

OpenAI just created a customer acquisition moat for its competitors. Every false positive is a user considering alternatives. Every ID submission is a privacy-conscious developer evaluating Gemini. The age prediction system might protect OpenAI from lawsuits, but it’s making competitors more attractive.

Safety Theater vs. Real Protection

Teen safety online is critical. No one disputes that. But OpenAI’s execution suggests this is more about liability than protection. A system designed to default to false positives—by Altman’s own admission—isn’t optimized for accuracy. It’s optimized for legal cover.

Real teen protection would involve opt-in parental controls, not mass behavioral profiling that treats adults like suspects. It would mean building tools parents can use, not AI classifiers guessing ages from emoji usage. It would prioritize precision over defensive policy.

The false positive rate proves the system isn’t ready for production. When paying customers are being asked to submit government IDs because an AI misread their writing style, something is broken. OpenAI built a liability shield, not a safety system.

Meanwhile, Google and Anthropic are watching OpenAI alienate customers and learning exactly what not to do. They’re proving that you don’t need age verification to operate responsibly. They’re offering developers what OpenAI used to provide: trust, simplicity, and respect for privacy.

The backlash is just beginning. OpenAI better hope their false positive rate drops fast, because every adult flagged as a minor is one step closer to canceling their subscription.