OpenAI launched GPT-5.1-Codex-Max this week, a coding model that solves AI assistants’ biggest limitation: forgetting what they were doing halfway through complex tasks. Through a “compaction mechanism,” it works autonomously for over 24 hours on multi-step refactors and architectural changes across millions of tokens. Early developer reactions follow a predictable pattern—brilliant insights mixed with baffling mistakes, and a tool demanding as much coaching as code it provides.

Compaction Beats Bigger Context Windows

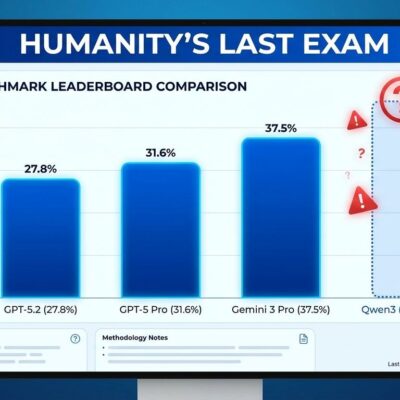

The context window arms race hit absurd territory this year. Claude Sonnet 4, Gemini 2.5 Pro, and GPT-4.1 all sport 1 million token windows. Magic.dev’s LTM-2-Mini claims 100 million tokens. GPT-5.1-Codex-Max took a different approach.

Instead of expanding memory, OpenAI built compaction—a mechanism that prunes conversation history while preserving critical context. The model automatically compresses when approaching limits, enabling indefinite work sessions instead of hitting walls.

The efficiency gains are real. GPT-5.1-Codex-Max uses 30% fewer thinking tokens while matching or beating accuracy. On SWE-bench benchmarks, it jumped from 66.3% to 79.9%—a 13.6 percentage point improvement. It completed 24-hour tasks internally that would have crashed earlier models.

This matters because size without management creates noise. A million-token window doesn’t help if the model can’t distinguish relevant context from stale debugging logs. Compaction addresses what to keep, not just how much to store.

What 24-Hour Autonomous Coding Actually Means

OpenAI didn’t just theorize about extended sessions—they tested them. Internal evaluations saw GPT-5.1-Codex-Max work independently for over 24 hours on comprehensive refactors, security vulnerability remediation across entire codebases, and legacy system migrations.

The practical applications target enterprise-scale problems. Imagine pointing an AI at a Django 1.x codebase to migrate to 5.x, updating deprecated APIs, fixing breaking changes, and ensuring tests pass. These aren’t snippet-generation problems—they’re architectural marathons requiring sustained context.

The model handles up to 10 million lines of code. When it approaches context limits, compaction kicks in automatically, summarizing what happened while preserving decisions that matter.

But here’s the reality developers are discovering: autonomous doesn’t mean unsupervised. One Reddit user captured it perfectly—”brilliant one moment, mind-bogglingly stupid the next.” Without clear boundaries, the model fixates on edge cases or drifts into unnecessary complexity.

The Literal Genie Problem

A Hacker News thread revealed an interesting dynamic. One developer described GPT-5.1-Codex-Max as “extremely, painfully, doggedly persistent in following every last character” of instructions—like a literal genie that will technically fulfill your wish while missing the spirit entirely.

Ask it to make 1+1 equal 3, and one developer joked it would “rewrite the entire V8 engine to break arithmetic” rather than question the request. It will spend 30 minutes on convoluted solutions because you told it to, not because those solutions make sense.

This contrasts sharply with Claude Code, which tends to ignore instructions it disagrees with. It’s a trade-off: precision versus adaptability. Codex follows orders. Claude uses judgment. Neither approach is universally better.

The successful early adopters stopped asking “can AI do this?” and started asking “how do I help AI do this better?” They treat themselves as AI coaches, not tool users. Clear instructions with well-defined scope prevent wasted cycles.

The AI Coding Assistant Wars Heat Up

GPT-5.1-Codex-Max enters a crowded battlefield. GitHub Copilot achieved a documented 55% productivity improvement and now integrates multiple models. Claude Code delivers terminal-native workflows with “game-changing” Plan Mode. Cursor promises “at least 2x improvement over Copilot” as a full AI IDE.

One Hacker News commenter warned Anthropic: “They better make a big move or this will kill Claude Code.” Competitive pressure is real. Multiple developers noted Codex CLI “quite caught up to Claude Code” after being “woefully behind.”

But maintain healthy skepticism. Studies show AI tools can actually increase completion time by 19% with experienced developers, while defect rates grew 4x in AI-assisted code. The tools promise speed; reality delivers mixed results.

GPT-5.1-Codex-Max’s bet is that long-horizon autonomy differs from snippet generation. Reducing context-switching overhead on complex refactors could deliver value where autocomplete falls short.

Context Engineering Becomes a Developer Skill

What this really signals is a shift in how developers work. The industry moved from ad-hoc prompting to rigorous context engineering—carefully preparing structured information so AI models perform reliably over extended sessions.

The Model Context Protocol (MCP) emerged as a standard for controlling context effectively. Developers write AGENTS.md files to constrain behavior, anchor coding agents to reference applications, and build frameworks preventing agents from “going haywire.”

This isn’t about AI replacing developers. It reinforces why skilled engineers matter. Someone has to set boundaries, catch when the model drifts off track, and know what “good” looks like.

Consider the numbers: 80% of new GitHub developers use Copilot within their first week. 25% of companies are piloting agentic AI projects by 2025. The low-code market grew to $65 billion. Yet professional developers remain critical—they’re just doing different work. Less typing, more directing.

GPT-5.1-Codex-Max is available now in Codex CLI, IDE extensions, and code review tools for ChatGPT Plus, Pro, Business, and Enterprise users. Whether it lives up to the 24-hour autonomous coding promise depends on whether developers treat it as a partner requiring constant oversight, not a replacement they can ignore.