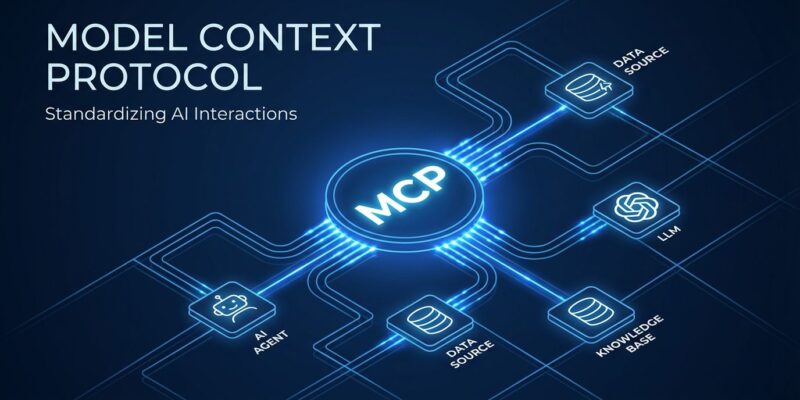

Anthropic is releasing the next version of Model Context Protocol on November 25, 2025—exactly one year after launch—with upgrades that address the biggest pain points enterprises discovered during MCP’s rapid ascent to industry standard. The update delivers server discovery, async operations, and scalability improvements that signal MCP has graduated from experimental protocol to production infrastructure.

What’s New in the November 25 Update

The biggest improvement is server discovery via .well-known URLs. Currently, to know what an MCP server can do, you must connect to it first. Starting November 25, servers advertise capabilities through /.well-known/mcp/ endpoints using RFC 8615 standards—the same pattern powering sitemap.xml for search engines. Registries can automatically catalog servers, and clients browse capabilities without connecting. Simple fix, massive friction reduction.

Async operations finally arrive. Today’s MCP is synchronous: call a tool, everything blocks until it finishes. The update adds async support so servers kick off long-running tasks while clients check back later. Generating reports, processing datasets, or running multi-step workflows that take hours? Now feasible instead of theoretical.

The protocol refines scalability for horizontal deployments. Enterprises deploying MCP at scale hit rough edges around server startup and session handling across multiple instances. The November 25 release smooths those edges. Official extensions for healthcare and finance get formalized patterns. And a new SDK tiering system clarifies which SDKs meet compliance, maintenance, and feature standards.

Why This Update Matters Now

The timing tells the story. MCP launched November 2024 as Anthropic’s bid to standardize AI agent integration. By March 2025, OpenAI officially adopted MCP across ChatGPT desktop, Agents SDK, and Responses API. Google and Microsoft followed. The protocol war is over. MCP won.

Adoption numbers back it up: analysts project 90% of organizations will use MCP by end of 2025. Block deployed 60+ MCP servers. BCC Research launched a production MCP server November 21—four days before this update. The GitHub team reported 98% token reduction (150,000 down to 2,000 tokens) implementing MCP’s code execution pattern.

This update is Anthropic saying: you adopted MCP, we heard your production pain points, here are the fixes. Server discovery, async support, and scalability aren’t flashy features. They’re infrastructure separating promising protocol from production-ready tooling.

What Developers Get

Server discovery solves “how do I find MCP servers?” Before: know a server exists, connect to see what it offers. Now: browse a registry like npm packages or Docker images. Not revolutionary—table stakes. But table stakes matter when building on a protocol.

Async operations unlock previously impractical use cases: long-running data processing, multi-step workflows, background tasks reporting progress. Standard backend patterns MCP finally supports. The difference between synchronous-only and async-capable isn’t academic—it’s toy demos versus production systems.

Token efficiency gains are real. MCP’s code execution pattern lets agents filter data locally before returning results. Instead of fetching 10,000 rows through context, filter in execution environment, return summaries. GitHub’s 98% token reduction proves this works at scale.

Security Still Needs Work

Reality check: Equixly’s security assessment found 43% of tested MCP implementations vulnerable to command injection, 30% to server-side request forgery, 22% allowing arbitrary file access. The protocol isn’t the problem—implementation is. Building MCP servers? Need secure sandboxing, resource limits, monitoring. MCP gives you power. Power without guardrails is vulnerability.

What’s Next

The MCP roadmap shows semantic context ranking, toolchain orchestration, IoT support, and enterprise registries coming. But the bigger picture: MCP is no longer one option among many. Building AI agents? MCP is the path. OpenAI surrendered. Google and Microsoft on board. November 25 update removes last barriers to enterprise-scale deployment.

The protocol war is over. Now comes the hard part: building systems that actually work.