Developers using AI coding tools are 19% slower than those coding without them—yet believe they’re 20% faster. This isn’t a minor discrepancy. For a 100-developer team, that 19% slowdown costs $2.85 million annually in lost productivity. Meanwhile, trust in AI tools has collapsed from 40% to 29% in just one year, even as 80% of developers now use them daily. The AI coding productivity revolution was supposed to transform software development. Instead, it’s created an expensive illusion—and companies are doubling down.

The Research: AI Makes Developers Slower, Not Faster

METR’s randomized controlled trial, conducted from February to June 2025 and published in July, tested 16 experienced open-source developers across 246 issues in repositories averaging 22,000+ stars and over 1 million lines of code. When developers used AI tools like Cursor Pro with Claude 3.5 and 3.7 Sonnet, they took 19% longer to complete tasks compared to coding without AI assistance.

These weren’t junior developers learning to code. These were experts on their own codebases with years of contribution history and substantial experience prompting LLMs. They accepted fewer than 44% of AI suggestions, rejecting or heavily modifying 56% of generated code. The study compensated developers at $150 per hour for tasks averaging 2 hours each—serious work on complex systems.

The perception gap is staggering. Before the study, developers predicted AI would reduce completion time by 24%. Economics and machine learning experts forecasted 38-39% productivity gains. After experiencing the actual 19% slowdown, developers still believed AI had improved their speed by 20%. They felt faster because AI reduced cognitive load, but measurable task completion time proved otherwise.

For a 100-developer team with an average fully-loaded cost of $150,000 per developer, a 19% productivity loss equals 19 developer-equivalents wasted. That’s $2.85 million in lost productivity annually, plus $40,000 to $180,000 in tool subscriptions and implementation costs. The total waste approaches $3 million per year—for tools marketed as productivity multipliers.

Trust Collapse: 80% Use AI, Only 29% Trust It

Stack Overflow’s 2025 Developer Survey, gathering responses from over 49,000 developers across 177 countries, revealed a paradox: AI tool usage has climbed to 80-84%, while trust in AI accuracy has plummeted to just 29%—down from 40% the previous year.

Positive favorability toward AI tools declined from 72% to 60% year-over-year. Meanwhile, 46% of developers actively distrust AI accuracy, up from 31% the previous year. Experienced developers are the most skeptical, with 20% reporting they “highly distrust” AI tools. Yet 84% are using or planning to use AI tools despite this widespread mistrust.

This isn’t cautious optimism. This is blind adoption driven by hype, corporate mandates, and fear of missing out—not by measured productivity gains. When nearly half of developers actively distrust the tools they’re required to use daily, something is fundamentally broken.

The “Almost Right” Trap: AI’s Biggest Problem

“AI solutions that are almost right, but not quite” ranks as developers’ number-one frustration, cited by 45% in the Stack Overflow survey. An even larger 66% report spending more time fixing AI-generated code than they would have spent writing code manually from scratch. Another 45% specifically call out that “debugging AI-generated code is more time-consuming.”

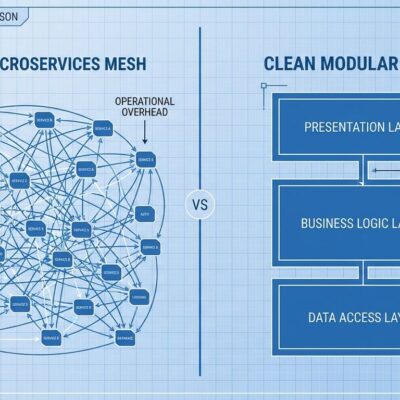

The problem is insidious. AI generates code that looks correct, passes initial review, and appears to follow established patterns—but contains subtle errors that surface in edge cases or production environments. Developers spend extended time debugging code they didn’t write, lacking the full context of how it was generated. This is what researchers call the “70% problem”: AI rapidly produces 70% of a solution, but the final 30%—edge cases, security considerations, production integration—remains as challenging as ever.

The net result is often slower than writing 100% of the code from scratch with full context. This explains why 75% of developers still turn to colleagues rather than trusting AI when facing uncertainty about generated code. Developers review every line of AI output and make major modifications to clean up what the AI produced. The acceptance rate of 44% in the METR study confirms this: developers reject or heavily modify more than half of AI suggestions.

When AI Actually Works (And When It Fails)

AI coding tools aren’t universally bad—they’re highly context-dependent. Junior developers see 21-40% productivity gains compared to 7-16% for seniors, because AI helps them learn syntax and recognize patterns. About 70% of Go developers use AI assistants primarily for boilerplate generation, documentation, comments, and unit tests—AI’s sweet spot. For developers working in unfamiliar codebases or learning new languages, AI can reduce the initial learning curve. On simple tasks under one hour, AI succeeds 70-80% of the time.

But for experienced developers working on familiar code—the exact scenario tested in the METR study—AI makes them 19% slower. Experts already know the patterns and context; AI suggestions become noise rather than signal. On complex, multi-hour tasks, AI success rates drop below 20%. Large, mature codebases with implicit context, long-standing conventions, and complex dependencies defeat AI’s understanding.

Security-critical code presents an even bigger problem. A 2024 Stanford study found AI-assisted developers were more likely to introduce security vulnerabilities than those coding manually. “Vibe coding”—generating full applications from prompts—creates security holes and technical debt that non-programmers can’t fix. The technical debt accumulates invisibly until it becomes a crisis.

The Forced Adoption Disaster

In August 2025, Coinbase CEO Brian Armstrong asked company developers to personally justify not using AI tools. Those who failed to justify were fired. This isn’t an isolated incident. A financial software company in India made a “concerted effort to force developers to use AI coding tools” while simultaneously downsizing development staff. Junior developers at the company don’t remember the syntax of the language they’re using due to overreliance on Cursor. One recently hired developer posted on Reddit: “I feel like it’s not helping me develop my own skills.”

The Register reported in November 2025 that “devs gripe about having AI shoved down their throats.” Even Microsoft experienced the problem internally: various pull requests generated by GitHub Copilot ended up creating more work for the developers who had to review “suggested AI slop.” Reviewers spent more time on PR reviews than coders saved in initial development.

This forced adoption without measurement is creating a generation of developers who can’t code without AI assistance. Junior developers forget language syntax, lose problem-solving skills, and can’t debug without AI. Their security awareness declines as they accept “almost right” code without understanding its flaws. Yet 72% of developers don’t use “vibe coding” professionally despite corporate pressure, revealing a clear disconnect between C-suite mandates and developer reality.

The Real Cost of the Productivity Lie

The advertised costs are deceptive. GitHub Copilot Business runs $19 per month per developer, Cursor costs $20 per month, and enterprise plans scale up to $39 per developer monthly. But one company’s case study reveals the truth: “Our initial budget was $50,000 annually for AI coding tools. After 18 months, we’re spending $180,000 annually when we include all related costs, training, and additional tool subscriptions.” That’s a 3.6x cost multiplier.

Implementation and governance—monitoring, enablement, internal tooling—can cost $50,000 to $250,000 annually. Training runs $50-$100 per developer. Hidden costs from technical debt and integration challenges add another 10-20% beyond initial budgets. Meanwhile, the 19% productivity loss for a 100-developer team costs $2.85 million per year in wasted time.

Companies are optimizing for the wrong metrics. Sanchit Vir Gogia, CEO of Greyhound Research, warns that “organizations risk mistaking developer satisfaction for developer productivity.” Developers feel faster because AI reduces cognitive load, but this satisfaction doesn’t translate to faster shipping. Measurement should include downstream rework, code churn, and peer review cycles—not just time-to-code.

What Developers Should Actually Do

Stop assuming AI equals productivity. Measure actual impact on task completion time, code quality, security vulnerabilities, and technical debt—not perceived benefit or developer satisfaction surveys. Use AI selectively for tasks where it demonstrably helps: boilerplate generation for juniors, documentation, navigating unfamiliar codebases. Avoid AI for complex architectural decisions, security-critical code, and work in familiar systems where expertise outperforms autocomplete.

Resist corporate mandates to use AI without measurement. When leadership demands AI adoption, demand they measure productivity impact first. Run controlled experiments like METR did: assign work randomly with and without AI, compare actual completion times and code quality. If the data shows slowdown, stop using AI for those tasks.

For companies: stop firing developers who won’t blindly adopt AI. Start measuring whether AI actually delivers the productivity gains vendors promise. Budget for the real costs—3-4x the subscription price—and track downstream impacts on technical debt and security. Most importantly, recognize that AI is a tool for specific contexts, not a universal productivity multiplier.

Key Takeaways

- METR’s study proves experienced developers are 19% slower with AI tools, yet believe they’re 20% faster—a perception gap costing companies millions in wasted productivity

- Stack Overflow’s 2025 survey shows trust in AI accuracy collapsed from 40% to 29%, while usage climbed to 80%—developers use tools they don’t trust due to hype and corporate mandates

- The “almost right” problem is developers’ #1 frustration: 66% spend more time fixing AI code than writing manually, as subtle bugs in generated code create debugging nightmares

- AI works for juniors (21-40% gains), boilerplate, and unfamiliar code, but fails for experienced developers on complex tasks (success drops below 20% on multi-hour work)

- Companies forcing AI adoption—like Coinbase firing developers who won’t use it—are degrading skills, introducing security vulnerabilities, and wasting $2.85M+ annually per 100 developers on a productivity lie