Anthropic’s prompt caching feature can slash your AI API costs by 90% and latency by 85%. Developers building production chatbots are reporting dramatic savings—one company cut their monthly bill from $50,000 to $5,000. Document Q&A systems, code assistants, and customer support bots are seeing similar results. But there’s a catch: OpenAI doesn’t offer equivalent caching. That creates a dilemma. Are these cost savings worth potential vendor lock-in, or is this just another way to fragment the AI ecosystem?

The Cost Savings Are Real

Prompt caching lets you reuse previously processed context across API calls instead of re-billing for the same tokens every time. Anthropic recently enhanced the feature to cache up to 5 conversation turns. The economics are compelling: cache reads cost $0.30 per million tokens versus $3 per million for fresh processing—a 90% discount. Latency drops by 85% on cache hits.

Here’s what that looks like in practice. A customer support chatbot with a 20,000-token knowledge base costs $0.06 per conversation without caching. With caching, that drops to $0.006. At 10,000 conversations per day, you’re looking at $600 daily versus $60—saving $540 every single day. Scale that to a month and you’ve saved $16,200.

Code analysis tools see even bigger wins. Cache a 100,000-token codebase and query it repeatedly. Each query costs $0.30 without caching but only $0.03 with cache hits. At 1,000 queries per day, that’s $270 saved daily. A developer on Hacker News put it bluntly: “Cut our inference costs by 87% overnight.”

The Vendor Lock-In Problem

OpenAI doesn’t have prompt caching. Neither does Google Vertex AI or Azure OpenAI. This is an Anthropic-specific feature, and that matters. When you optimize your application for caching, you’re writing Anthropic-specific code. The cache_control syntax doesn’t port to other providers. If you build a production system that depends on 90% cost savings from caching, switching to OpenAI means rewriting your prompt architecture and eating a 10x cost increase.

This isn’t theoretical. One commenter on Hacker News called it “vendor lock-in disguised as innovation.” That’s harsh but not entirely wrong. We’ve seen this pattern before with cloud provider lock-in—AWS Lambda doesn’t run on Azure, proprietary database features don’t port to competitors, iOS apps don’t run on Android. Optimization comes with commitment.

The AI API landscape is fragmenting. Each provider develops proprietary features to differentiate. There’s no SQL-style standard for AI APIs. Developers face a choice: optimize for one provider and accept switching costs, or stay portable and leave performance on the table.

When Caching Actually Helps

Not every use case benefits from caching. It works best when you have stable, reusable context that appears in many requests. Customer support chatbots excel here—the company knowledge base, product documentation, and FAQs stay constant while only user questions change. Cache the static context once, reuse it thousands of times.

Document Q&A systems are another sweet spot. Upload a 50,000-token PDF and ask multiple questions. The first question pays full price to cache the document, but subsequent questions hit the cache. Legal document analysis, research paper exploration, and technical manual queries all benefit.

Code analysis tools can cache entire codebases. When reviewing pull requests or answering questions about a repository, the code context remains stable. Cache it once, query it repeatedly. Multi-turn conversations benefit from caching up to 5 turns of history—valuable for complex troubleshooting, tutoring, or brainstorming sessions.

Where caching doesn’t help: one-off queries with unique context each time, short prompts below the 1024-token minimum, rapidly changing context (the cache expires after 5 minutes of inactivity), or applications needing real-time data that can’t be cached.

Developers report cache hit rates varying from 60% to 95% depending on how well they architect their prompts for caching.

What Happens Next

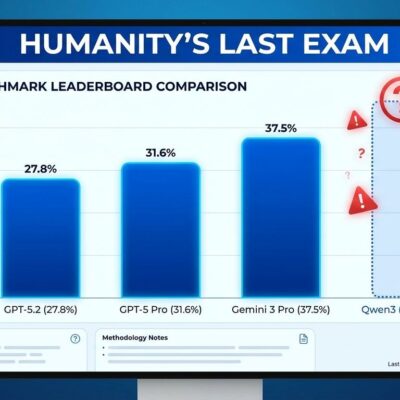

Anthropic has a competitive advantage right now. With prompt caching, they can be 33x cheaper than GPT-4 for cache-heavy workloads. That’s not a rounding error—it’s a different business model. OpenAI is facing pressure to respond.

Will competitors follow? Probably, but the timeline is unclear. Adding prompt caching requires infrastructure changes, not just API tweaks. Google has the resources. OpenAI definitely does. But “eventually” doesn’t help developers making architecture decisions today.

In the short term, expect Anthropic to gain market share in cost-sensitive applications. Chatbots, document analysis, and code assistants will lean toward Claude. OpenAI maintains advantages in model ecosystem breadth, consumer mindshare via ChatGPT, and tooling integrations.

Long-term, caching will likely become table stakes across providers. The question is whether it happens in 6 months or 2 years. Abstraction layers like LangChain are already adding caching support, which could make provider differences transparent to application code.

Use It Strategically

The cost savings are real. The vendor lock-in concerns are also real. Both statements are true simultaneously, and that means you need to make an informed tradeoff decision.

Use prompt caching if you’re building a high-volume, cost-sensitive production application where 90% cost reduction justifies potential switching costs. If your monthly Anthropic bill is $10,000 and caching could cut it to $1,000, that $9,000 monthly savings probably outweighs portability concerns. If your bill is $100, save yourself the complexity.

Stay provider-agnostic if you’re running low-volume applications where absolute costs are already manageable, working on exploratory projects where provider flexibility is valuable, or if strategic independence from any single vendor is a priority.

The middle ground: use abstraction layers that isolate provider-specific features. Architect for eventual portability even if you commit to Anthropic today. Don’t spread Anthropic-specific code throughout your application—contain it.

This is genuine innovation with real tradeoffs. It’s not a trap, and it’s not a silver bullet. The 90% cost reduction is objectively significant for the right use cases. The vendor lock-in is a legitimate architectural concern. Use caching where the economics make sense, but don’t architect yourself into a corner you can’t escape.