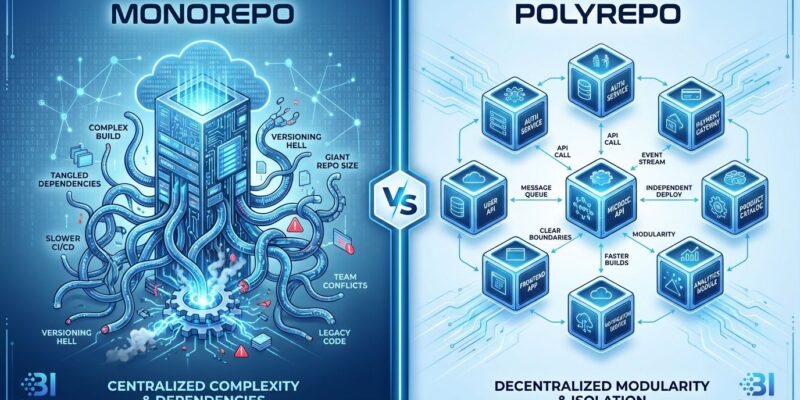

Monorepos have become the cargo-culted default in tech circles, with Turborepo and Nx vendors selling them as “the enterprise way” to organize code. But here’s the uncomfortable truth: for most development teams, monorepos are technical debt disguised as architecture. They solve Google-scale coordination problems by creating build complexity, CI/CD nightmares, and cognitive overhead that most teams don’t need.

Google’s monorepo works because they built custom infrastructure over 15 years—Piper, Bazel, distributed caching—with dedicated teams maintaining these systems. Your 30-person team copying Google’s patterns without Google’s resources isn’t being pragmatic. You’re cargo culting.

You’re Not Google, Stop Copying Google

Google’s monorepo contains billions of lines of code supporting 1000+ engineers making 40,000+ commits daily. It works because they invested millions in custom tooling: Piper (custom version control), Bazel (build orchestration), and dedicated infrastructure teams maintaining these systems around the clock.

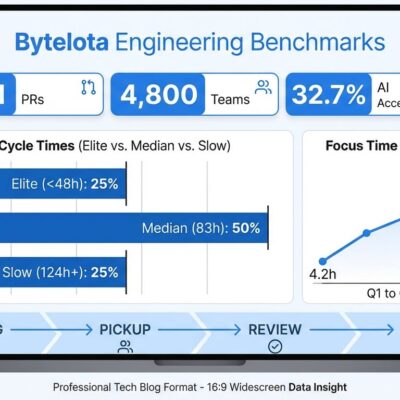

Most development teams have 10-50 engineers. They adopt monorepos thinking it will help them “scale,” but they’re solving problems they don’t have while creating new ones. The pattern on Hacker News and Reddit is consistent: FAANG engineers say “works great at Google,” while everyone else reports “terrible for our 30-person team.”

The disconnect is resources. Google has thousands of engineers maintaining Bazel and build infrastructure. Your team has one DevOps engineer who’s also managing AWS, CI/CD, and on-call rotations. Copying Google’s architecture without Google’s dedicated platform teams is setting yourself up for failure.

The Complexity Tax Nobody Mentions

Monorepos create massive hidden costs that vendor marketing conveniently glosses over. A mid-size SaaS company with 50 engineers migrated to a monorepo in 2022, expecting better code sharing and “atomic commits.” Reality hit hard: CI/CD times ballooned from 8 minutes to 35 minutes despite aggressive caching. Team velocity dropped 30%. Three engineers spent 40% of their time maintaining the build pipeline instead of shipping features. After 12 months of pain, they reverted to separate repositories.

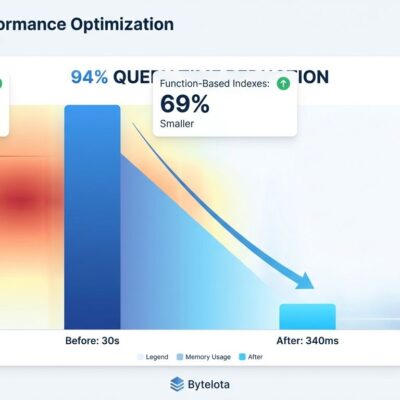

The math is brutal even with optimization. A 50-package monorepo takes 25 minutes for a clean build, 3 minutes for a cached build with one package changed. Compare that to building just the changed service in a separate repository: 45 seconds. The overhead exists even with “smart” caching because dependency graphs grow non-linearly and caching strategies are conservative to avoid false negatives.

Cognitive overhead compounds the problem. Developers navigate 50+ directories wondering “which package am I in?” Lock file conflicts erupt on every pull request. npm install takes 4+ minutes with a 15MB lock file. Developer machines need 32GB RAM just to keep the IDE responsive, compared to 16GB for working with separate repos. These aren’t edge cases—they’re the standard monorepo experience once you scale beyond a handful of packages.

Turborepo Profits From Problems It Creates

Turborepo and Nx have a clever business model: convince teams that monorepos are the “right way” to organize code, then sell tools to mitigate the problems monorepos create. Vercel acquired Turborepo in 2021 for strategic positioning in the build tooling space. The marketing promises “10x faster builds”—but compared to what? A naive monorepo, not separate repositories.

The free tier gets you hooked. Paid enterprise features include remote caching, analytics, and priority support. You’re not just adopting a tool; you’re entering a vendor lock-in loop. Adopt monorepo, hit complexity, buy tooling, need more tooling. Meanwhile, separate repositories would work with standard Docker and GitHub Actions workflows you already understand.

Ask yourself this: if these tools are so good at making monorepos fast, why do separate repositories still build faster with zero extra tooling? The answer reveals the trap. Turborepo is solving problems that monorepos create. The best monorepo tool is not having a monorepo.

Related: Serverless Costs 10x More Than VPS – The Math Exposed

The Three Cases Where Monorepos Make Sense

Fairness demands acknowledging legitimate use cases. Monorepos are appropriate for three narrow scenarios, and if you’re not in one of these, you’re probably making a mistake.

Component libraries like Material-UI, Radix UI, and Chakra UI benefit from monorepos because they ship 5-20 tightly coupled packages that users consume together. Synchronized releases are necessary. Changes genuinely do cross package boundaries constantly. This is the legitimate use case where monorepo complexity pays for itself.

Google/Meta scale with 1000+ engineers means coordination overhead outweighs technical overhead. When you have thousands of services and dedicated platform teams, the equation flips. But be honest: is your team anywhere near that scale?

Very small projects—fewer than 5 packages, fewer than 10 engineers—can work with monorepos before hitting the complexity threshold. Standard npm workspaces suffice without elaborate build orchestration. But understand this is a temporary state. Once you cross 10-15 packages, the overhead compounds fast.

Just Use Multiple Repos

For most teams, the better default is obvious: multiple repositories with clear boundaries. Each repo has one purpose. Changes rebuild only what matters. Standard Docker and CI/CD tools work out of the box. Teams move independently without waiting for monorepo builds to complete.

The clarity alone justifies it. One repo equals one purpose. New engineers understand the architecture in hours, not weeks. No confusion about “which package am I in?” No lock file conflicts spanning 50 dependencies. No elaborate caching strategies that break every third build.

When you need to share code, publish it as an npm package. Yes, this requires versioning and releases. That’s a feature, not a bug. It forces you to think about API stability and breaking changes. It creates natural boundaries that prevent the “change one file, rebuild everything” problem monorepos create.

Key Takeaways

- Monorepos solve Google-scale coordination problems by creating technical complexity most teams don’t need—you’re not Google, and copying their patterns without their infrastructure is cargo culting

- Hidden costs are massive: CI/CD times balloon even with caching, cognitive overhead grows non-linearly, and teams waste months maintaining build pipelines instead of shipping features

- Turborepo and Nx profit from convincing you monorepos are necessary, then charging to fix the problems they create—separate repos work faster with zero extra tooling

- Legitimate use cases exist but are narrow: component libraries (Material-UI, Radix), extreme scale (1000+ engineers with platform teams), or very small projects (fewer than 5 packages before hitting complexity)

- The better default for most teams: multiple repositories with clear boundaries, standard tooling, independent deployments, and npm packages for code sharing when actually needed

Start simple. Add complexity only when you have Google’s scale and Google’s resources to manage it. Until then, the best monorepo strategy is not having one.