Your AI code reviewer just approved the code that will cause next month’s security incident. It flagged 23 style violations, suggested renaming three variables for clarity, and completely missed the authorization logic bug that will expose customer data. This isn’t a hypothetical scenario—it’s the reality of AI-powered code review tools that promise comprehensive security analysis but deliver security theater instead.

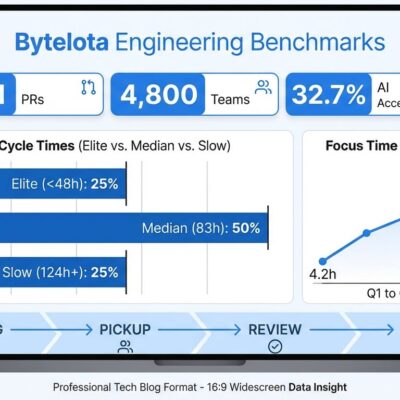

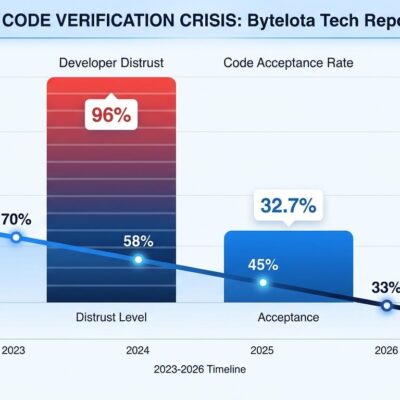

According to academic research testing five major AI code review platforms, these tools generate false positives at a 54% rate. Only 12% of AI-flagged issues turn out to be actual bugs. The remaining 88% are style suggestions, minor optimizations, or outright incorrect analysis—problems already solved by linters and formatters. Meanwhile, the logic flaws and architectural vulnerabilities that cause real security breaches sail through undetected.

What Security Theater Actually Means

Security researcher Bruce Schneier coined the term “security theater” to describe security measures that make people feel safer without actually improving security. The TSA making you remove your shoes at airports is the classic example—visible action that provides minimal actual protection. Security theater is the appearance of security, not the substance.

AI code review fits this definition perfectly. Teams implement automated scanning that generates impressive-looking reports filled with flagged issues. Dashboards show thousands of lines analyzed and hundreds of suggestions made. Everyone feels like security is being taken seriously. But when you examine what’s actually being caught—and more importantly, what’s being missed—the theater becomes obvious.

The test Schneier proposes is simple: does this measure actually reduce risk, or does it just reduce anxiety? AI code review fails this test. Teams feel safer because “the AI reviewed it,” but the data shows they’re shipping vulnerabilities at the same rate as before. That’s theater.

The False Positive Problem Destroys Value

Even if AI code review could catch real issues, the false positive problem makes it nearly unusable. Developers report spending 2-6 hours per week triaging AI suggestions, with 70-80% of that time wasted on false positives. For a five-person team, that’s $50,000 per year in developer time spent arguing with an algorithm about whether code is actually correct.

The pattern is consistent across teams: initial enthusiasm about AI-powered security, followed by frustration as false positive noise accumulates, leading to teams ignoring or disabling the tools entirely. One Reddit developer described it perfectly: “We adopted CodeGuru six months ago. First week: 47 suggestions per PR. Now: CodeGuru is running but nobody looks at it. We’re paying $500/month for theater.”

This creates a dangerous “crying wolf” effect. When a tool generates too many false alarms, people stop paying attention to any alarms. The few real issues AI might catch get ignored along with the noise. That’s worse than having no automated review at all—it’s actively undermining security by training developers to ignore warnings.

AI Catches the Wrong Things

Understanding what AI code review can and cannot do reveals why it’s fundamentally mismatched to the problem. AI-powered tools excel at pattern matching: finding known CVE signatures, detecting simple syntax errors, flagging style violations, and catching obvious code smells like unused variables. The problem? These are all issues that simpler tools already handle better. Linters catch style violations with near-zero false positives. Dependency scanners find outdated packages. Static analysis tools flag known vulnerability patterns.

What AI code review cannot do is understand business logic, evaluate architectural decisions, analyze context-dependent security, or answer the most important code review question: “Is this the right approach to solve this problem?” According to OWASP research, 70-80% of real-world vulnerabilities are logic and design flaws—exactly the category AI can’t analyze. AI is optimizing for the 20-30% of issues already solved by simpler tools while missing the critical problems that cause actual breaches.

Real Breaches Prove the Point

Theory aside, look at what actually causes security incidents. The 2019 Capital One breach exposed 100 million customer records. The company had automated security scanning in place. The breach occurred due to a misconfigured firewall combined with an authorization logic bug—problems that required understanding AWS architecture context and intended versus actual authorization flows. No amount of pattern matching would have caught it. AI code review would have been useless.

The 2020 SolarWinds supply chain attack compromised thousands of organizations. Sophisticated attackers inserted malicious code that looked entirely normal from a pattern-matching perspective. Detecting the attack required understanding what the code should be doing based on business context, not analyzing syntax patterns. AI code review wouldn’t have helped.

The 2021 Log4Shell vulnerability affected millions of systems worldwide. It wasn’t an implementation bug that pattern matching could catch—it was an architectural design flaw requiring deep understanding of JNDI behavior and attack vectors. AI code review couldn’t have flagged it because the code itself was “correct.” The problem was what that correct code enabled attackers to do.

This isn’t cherry-picking. Security expert Troy Hunt analyzed hundreds of major breaches and concluded that virtually none would have been prevented by automated code review tools. Real vulnerabilities require understanding context, architecture, and intent—all capabilities beyond current AI.

What Real Code Review Actually Does

Google’s engineering practices documentation outlines what code review should evaluate: design approach, functionality against requirements, unnecessary complexity, appropriate testing, and architectural fit. Style and formatting—AI’s primary focus—ranks eighth on the list of review priorities. Code review is fundamentally about human judgment on whether this is the right solution, not pattern matching on whether the syntax is clean.

Martin Fowler emphasizes that code review’s purpose is to transfer knowledge across the team, ensure multiple people understand each change, catch design issues before they become technical debt, and discuss trade-offs and alternatives. These are conversations, not automated checks. You can’t automate asking “Have you considered a different approach?” or “Does this match our long-term architecture vision?”

The disconnect is stark: AI handles the mechanical verification that doesn’t require human judgment, while claiming to replace the judgment-based analysis that actually prevents serious problems. That’s like automating spell-checking and claiming to have replaced editors.

When AI Code Review Actually Helps

To be fair, AI code review has legitimate but limited uses. It can catch some known CVE patterns, though dedicated SAST tools do this better with fewer false positives. It can serve as a learning aid for junior developers by suggesting best practices, though the false positive rate makes this risky—teaching developers to ignore warnings isn’t good education. It can flag simple mechanical issues if you’re willing to invest significant time tuning sensitivity to reduce noise.

The key word is “limited.” AI code review is marginally useful for a narrow set of already-solved problems, not the comprehensive security solution vendors market. It should never be a primary security control. It should never block code without human verification. And it definitely shouldn’t replace actual code review focused on design and logic.

The Better Approach

The solution isn’t abandoning automation—it’s using the right tool for each job. Linters handle style and formatting with near-perfect accuracy and no false positives. Static analysis tools built for security (not marketed as AI) catch known vulnerability patterns effectively. Dependency scanners identify outdated libraries. These tools work well because they solve focused problems with clear answers.

Save human code review for what it’s actually good at: evaluating design decisions, validating business logic, discussing architectural trade-offs, ensuring the code solves the right problem in a maintainable way. This is where real value comes from, and it’s exactly what AI cannot do.

For actual security, invest in threat modeling during design, security-focused architecture review before implementation, and penetration testing after deployment. These practices find the logic and design flaws that cause real breaches. They require human expertise and business context—precisely what distinguishes effective security from security theater.

Stop Buying Theater

The AI code review market is booming because it sells the appearance of comprehensive security without requiring the hard work of actual security. It’s easier to buy a tool that promises to “AI-power your security” than to invest in threat modeling, security training, and design review. It’s more comfortable to point at automation dashboards than to admit your team doesn’t have the expertise to evaluate security architecture.

But comfort isn’t security. If your security strategy relies on AI code review as a primary control, you have security theater, not security. The false positives waste developer time. The false negatives ship vulnerabilities. The psychological safety is false. The breaches will be real.

Demand better from vendors. Demand honest marketing about what these tools can and cannot do. Demand acknowledgment that pattern matching isn’t the same as understanding. Demand tools positioned as narrow assistants, not comprehensive solutions. And build your security strategy on what actually prevents breaches: good design, human expertise, real testing, and honest assessment of risk.

Your codebase deserves better than theater.