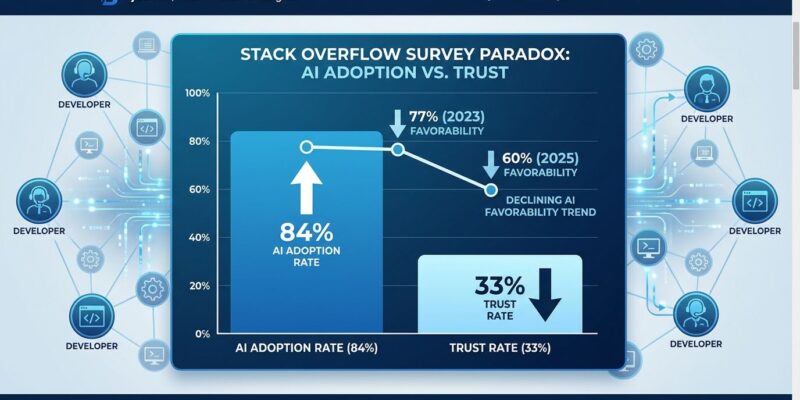

The Stack Overflow 2025 Developer Survey, released in July-August 2025, reveals a troubling paradox: 84% of developers now use or plan to use AI coding tools (up from 76% in 2024), yet trust in these tools has collapsed to an all-time low. Only 33% of developers trust AI accuracy—down from 43% last year—while 46% actively distrust it. Based on 49,009 responses from 166 countries, the survey exposes a 51-percentage-point gap between adoption and trust. Developers are using tools they fundamentally don’t believe in.

This isn’t just sentiment—it’s market dysfunction. When nearly half of developers distrust the tools they’re pressured to use daily, it signals a trust crisis that could reshape the AI coding market. The data challenges the “10x productivity” narrative pushed by AI vendors and reveals what’s really happening in developer workflows.

The Trust Crisis in Numbers

While AI adoption surged from 76% to 84%, trust metrics moved in the opposite direction. Trust in AI accuracy dropped from 43% to 33%, creating a 51-point gap between usage (84%) and trust (33%). More developers actively distrust AI tools (46%) than trust them (33%). Only 3% report they “highly trust” AI outputs—a near-zero confidence level.

The sentiment decline is accelerating. AI favorability dropped from 77% in 2023 to 72% in 2024 (a 5-point decline), then plummeted to 60% in 2025 (a 12-point drop). The erosion rate doubled in one year. Stack Overflow CEO Prashanth Chandrasekar called out “the growing lack of trust in AI tools” as the survey’s key finding, noting that “AI is a powerful tool, but it has significant risks of misinformation or can lack complexity or relevance.”

Experienced developers are the most skeptical. They have the lowest “highly trust” rate (2.6% compared to 3% overall) and the highest “highly distrust” rate (20% versus 15% overall). Professional developers show higher overall favorable sentiment (61%) than learners (53%), but within that, the most experienced are most cautious. This isn’t resistance to change—it’s pattern recognition from developers who know what quality code looks like.

The “Almost Right” Productivity Tax

The survey’s top developer frustration tells the story: 66% cite “AI solutions that are almost right, but not quite” as their primary complaint. This leads directly to the second-biggest frustration: 45% say debugging AI-generated code takes more time than expected. These numbers contradict the “10x productivity” narrative—instead of saving time, developers report spending additional hours fixing subtle bugs in AI-generated code.

The “almost right” problem is particularly insidious because code looks correct at first glance. Bugs slip past initial review and surface later in production. When stakes are high, 75% of developers prefer asking colleagues rather than relying on AI. That’s a trust gap you can measure in production incidents.

Related: AI Productivity Paradox: Devs 19% Slower, Think 20% Faster

If debugging AI code takes longer than writing it from scratch, the net productivity gain is zero or negative. VentureBeat dubbed this the “hidden productivity tax” of AI tools. It challenges the entire ROI proposition of AI coding assistants and explains why adoption (84%) vastly exceeds trust (33%)—market pressure, not confidence, drives usage.

Experience Breeds Skepticism

The survey reveals an uncomfortable truth: the more coding experience developers have, the less they trust AI tools. Experienced developers have the lowest “highly trust” rate (2.6%) and the highest “highly distrust” rate (20%). This upends the assumption that AI skepticism is a generational issue.

Junior developers aren’t more AI-optimistic because they’re digital natives—they’re optimistic because they don’t yet know what quality code looks like. Professional developers gravitate toward higher-quality AI tools: 45% use Claude Sonnet versus only 30% of learners, suggesting experience breeds selectiveness. As junior developers gain experience, their trust will likely erode too.

Senior engineers’ skepticism suggests AI tools have a fundamental quality problem, not a perception problem. The people who review code for a living are the least impressed by AI-generated output. That’s a signal the industry can’t ignore.

Related: AI Coding Productivity Illusion: Developers Trust It Less After Using It

Beyond AI: Docker and Python Dominate

While AI trust craters, two technologies saw explosive growth. Docker experienced its largest single-year increase ever: a 17-percentage-point jump to 71% usage. Python accelerated its decade-long climb with a 7-point surge to 57.9% adoption. Both are approaching “universal knowledge” status, signaling major ecosystem shifts driven by containerization and AI workloads.

Docker’s unprecedented growth reflects the need for reproducible, containerized environments—critical for AI model deployment and microservices architecture. At 71% usage, Docker is transitioning from “nice to have” to “table stakes” knowledge. Within 2-3 years, it could reach the 80%+ universal adoption threshold where job listings simply assume competency.

Python’s acceleration is directly AI-driven. The language dominates machine learning (TensorFlow, PyTorch), data science (Pandas, NumPy), and backend development (FastAPI). Its 7-point jump—up from the typical 2-3 points per year—reflects AI’s reshaping of the development stack. Ironically, AI skepticism coexists with AI-driven language growth. Developers distrust AI code generation but embrace the languages and tools AI workloads demand.

Key Takeaways

- Adopt AI tools, but validate everything: 84% adoption means you can’t ignore AI in 2025, but 46% distrust means code review is mandatory. Use AI for boilerplate and low-risk tasks, human judgment for critical logic.

- The “almost right” problem is real: 66% of developers waste time debugging AI code that looks correct but isn’t. If your team uses AI without accounting for validation overhead, expect productivity declines, not gains.

- Experience matters more than hype: Senior engineers trust AI least (2.6% “highly trust”). The people who know code best are most skeptical. That’s a quality signal, not a perception issue.

- Docker and Python are table stakes: 71% Docker usage and 57.9% Python adoption mean these are now baseline expectations, not resume bonuses. Late adopters risk falling behind on DevOps and AI-adjacent roles.

- Trust erosion signals market correction: AI favorability dropped from 77% to 60% in two years, with the decline accelerating. We’re entering a reality-check phase where AI tools must deliver on productivity promises or face a backlash.

The gap between AI adoption and AI trust has never been wider. Developers are caught between market expectations (AI everywhere) and reality (AI unreliable). The Stack Overflow 2025 survey quantifies this tension with hard data: 49,009 developers across 166 countries saying the same thing—we use it, but we don’t trust it. That’s not sustainable. Either AI tools improve dramatically, or the 84% adoption rate will start declining as trust continues to erode.