AWS solved the cold start problem technically. Cold starts dropped 80% through SnapStart, warm pools, and runtime optimizations in 2025. VPC overhead vanished from 10+ seconds to under 100 milliseconds. Java functions that took 2 seconds to initialize now start in 200 milliseconds. By every technical measure, serverless got faster and more reliable.

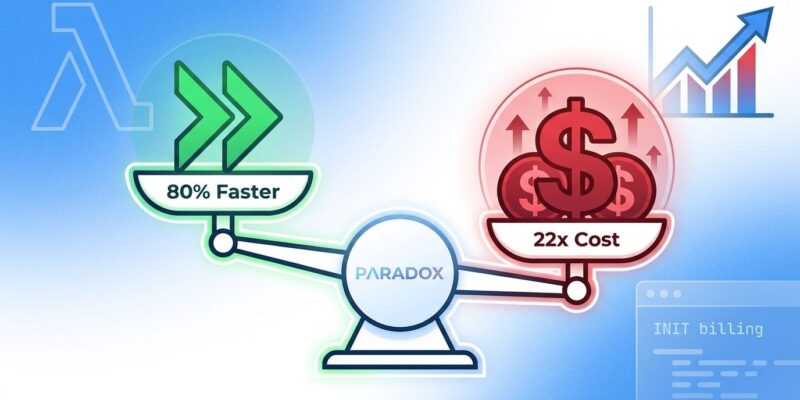

Then AWS started billing for the Lambda INIT phase in August 2025, turning cold starts from a latency problem into a budget problem. Before the change, cold starts cost roughly $0.80 per million invocations. After? $17.80 per million—a 22x increase. The paradox: serverless got faster but more expensive, and organizations now spend $500 monthly solving $50 problems with provisioned concurrency.

The August 2025 Billing Shockwave

Until August 1, 2025, AWS only billed for the INVOKE phase of Lambda execution. The INIT phase—download code, start runtime, initialize dependencies, run initialization code—was free. Cold starts were purely a latency issue affecting user experience, not costs. That calculus changed overnight.

Now every cold start bills GB-seconds for both INIT and INVOKE phases. The cost impact varies dramatically by runtime and initialization complexity. For a 512 MB function in US East 1, Python 3.12 adds $2.08 per million cold starts, Node.js 20 adds $2.50, and Java 17 without SnapStart adds $16.67. Java and C# applications with heavy startup logic saw Lambda costs increase 10-50%.

A high-volume Java API handling 10 million invocations monthly with 5% cold starts now pays $8.35 per month for INIT billing alone—51% of the total Lambda bill. The same traffic pattern for a Python API costs $1.05 for INIT billing, just 12% of the bill. The cost threshold where INIT billing matters kicks in around $50 monthly Lambda spend—exactly where thousands of production applications operate.

The $19,000 Provisioned Concurrency Trap

Teams enable provisioned concurrency everywhere by default to eliminate cold starts, then discover they’re spending $500 monthly to solve what was effectively a $50 problem. The math is brutal.

Provisioned concurrency keeps Lambda functions perpetually warm, eliminating cold starts entirely and delivering double-digit millisecond response times. It costs $0.0000041667 per GB-second with no free tier. A 512 MB function with 10 provisioned environments running 730 hours monthly costs $15.21 for provisioned concurrency alone, typically rising to $20-30 with invocation charges included.

Scale that across 50 functions with 5 provisioned concurrency each—a typical microservices deployment—and annual costs hit $19,000 while accepting INIT billing costs roughly $600. Organizations overspend by a 380:1 ratio, paying $18,400 yearly for insurance against latency spikes that AWS says affect less than 1% of invocations.

Why does this happen? Provisioned concurrency is easy. Set it, forget it, never think about cold starts again. But post-August 2025, that convenience costs real money. The question isn’t whether provisioned concurrency works—it does. The question is whether it’s worth $19,000 when SnapStart and code optimization solve the same problem for free.

Related: Cloud Waste 2026: $225B Lost, FinOps Automation Needed

Cold Start Solutions That Actually Work in 2026

SnapStart remains the highest-ROI optimization for Java and C# applications. It reduces Java cold starts by up to 90% by creating encrypted snapshots of initialized execution environments and restoring them instead of re-initializing. Java 17 cold starts drop from 2,000 milliseconds to 200 milliseconds, bringing Java INIT costs from $16.67 per million down to $1.67—the same magnitude as Python. SnapStart support now includes Java 11+, .NET 8 with Native AOT, and Arm64 architecture as of July 2024.

Code-level optimizations deliver incremental gains across all runtimes. Reducing package size matters because every megabyte adds milliseconds to INIT duration. Lazy initialization defers expensive setup until actually needed. Runtime selection creates baseline performance differences: Python and Node.js start in 200-400 milliseconds naturally, while Go and Rust consistently deliver the lowest startup times for real-time systems.

VPC configuration no longer creates cold start penalties. The old problem of 10+ second VPC cold starts disappeared with Hyperplane ENIs, reducing VPC overhead to under 100 milliseconds for properly configured functions. This infrastructure improvement removed one of the biggest historical cold start contributors without requiring application changes.

When to Pay for Speed vs When to Accept Cold Starts

The cost-aware architecture decision in 2026 starts with monitoring. CloudWatch’s InitDuration metric measures time spent in INIT phase per invocation, identifying which functions actually experience cold start problems worth solving. Avoid “provisioned concurrency everywhere” defaults. Most background jobs, async processors, and low-traffic functions tolerate 200-500 millisecond cold starts without user impact.

Accept cold starts for workloads where latency isn’t revenue-critical. Background jobs processing queue messages, scheduled tasks, webhook handlers—these functions run asynchronously where 1-2 second response times are acceptable. INIT billing adds $1-5 monthly for typical usage patterns.

Use SnapStart for Java and C# applications before considering provisioned concurrency. It delivers 90% cold start reduction at zero incremental cost beyond normal per-invocation pricing. If Java functions still take over 1 second to initialize after SnapStart, investigate initialization code rather than throwing money at provisioned concurrency.

Reserve provisioned concurrency for user-facing APIs with strict latency requirements. When response time SLAs demand sub-100 millisecond performance and high, consistent traffic justifies the cost, provisioned concurrency makes financial sense at $15-30 monthly per function. Revenue-critical paths where latency directly impacts conversion rates or customer experience warrant the expense. Everything else is probably waste.

The Serverless Cost Reckoning

The serverless computing market grows from $31.99 billion in 2026 to $92.22 billion by 2034 at 14.15% CAGR, making cost optimization a survival skill rather than nice-to-have. Usage-based pricing creates budgeting challenges at scale. Cost visibility tools that reduce unexpected cloud spending by 25% become table stakes. Multi-concurrency models cutting compute usage 30-50% differentiate efficient architectures from wasteful ones.

The fundamental tension won’t resolve. Cloud providers improve performance and create new optimization products that cost more. Developers want performance guarantees and pay premiums for provisioned concurrency. AWS solved cold starts technically while monetizing the solution financially. The $19,000 question is whether your architecture evolved with it.

Related: OpenTelemetry Observability Costs 2026: Can It Save You From Crisis?

Key Takeaways

- AWS INIT phase billing (August 2025) increased cold start costs 22x from $0.80 to $17.80 per million invocations, hitting Java/C# applications hardest with 10-50% Lambda cost increases

- Organizations overspend $18,400 annually by enabling provisioned concurrency everywhere—$19,000 yearly for 50 functions solving cold starts that cost $600 accepting INIT billing

- SnapStart eliminates 90% of Java/C# cold start costs at zero incremental charge, reducing Java initialization from 2,000ms to 200ms and INIT billing from $16.67 to $1.67 per million

- Monitor CloudWatch InitDuration metric to identify which functions need cold start optimization—avoid “provisioned concurrency everywhere” defaults that waste money on functions tolerating 200-500ms latency

- Reserve provisioned concurrency ($15-30/month per function) for user-facing APIs with sub-100ms SLAs and consistent high traffic; accept cold starts for background jobs and low-traffic functions

- Cost-aware serverless architecture is essential in 2026 as the $92B market matures—usage-based pricing demands monitoring, SnapStart adoption, and selective provisioned concurrency instead of blanket solutions