Alibaba’s Qwen team just released Qwen3-Coder-Next, a sparse Mixture-of-Experts model that uses only 3 billion active parameters per token yet matches models 10-20 times larger. On SWE-Bench Verified—which tests models on real GitHub issues—it scored 70.6%, just 2 percentage points behind Claude Sonnet 4’s 72.7%. The kicker? It runs on consumer hardware, costs nothing, and ships with an Apache 2.0 license. This is proof that architectural innovation beats brute-force scaling.

The Efficiency Revolution

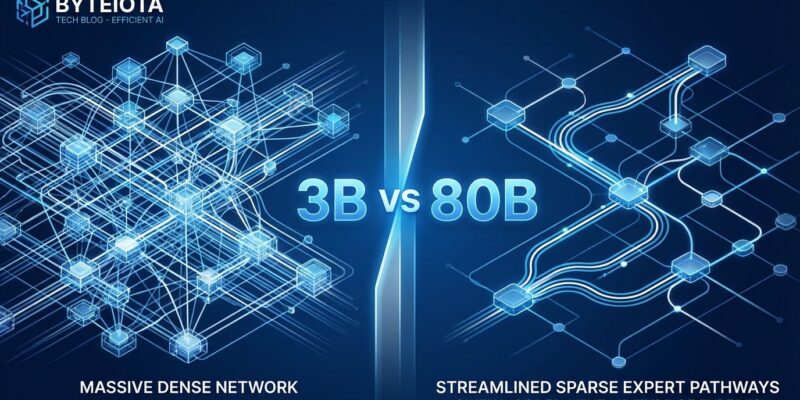

Qwen3-Coder-Next has 80 billion total parameters but only activates 3 billion per token—a 96% reduction in active computation. The secret is sparse Mixture-of-Experts architecture: instead of routing every token through all 80 billion parameters, it routes each token to just 10 of its 512 specialized “experts.”

The result? Ten times higher throughput for repository-level tasks. A 262,000-token context window that handles entire codebases. And because only 3 billion parameters activate per token, it runs on consumer hardware—AMD Ryzen AI, Radeon graphics cards, even high-end gaming rigs.

This challenges the “bigger is better” paradigm dominating AI development. Qwen3-Coder-Next shows the path forward isn’t just scaling up, but building smarter.

Performance That Competes

On SWE-Bench Verified, Qwen3-Coder-Next scored 70.6% versus Claude Sonnet 4’s 72.7%. That’s a 2-percentage-point gap—close enough that cost and control start to matter more than marginal quality differences. On SWE-Bench Pro, it hit 44.3%, beating DeepSeek-V3.2’s 40.9% despite being smaller.

Independent reviews rank Claude as “most complete and reliable,” but Qwen3-Coder delivers “solid outputs” at dramatically lower cost. The quality delta is small enough that many developers will trade 2% accuracy for zero API bills, full privacy, and the ability to fine-tune on proprietary code.

That raises an uncomfortable question for proprietary model makers: At what quality gap do most developers choose free over paid? Probably around 5% or less. We’re already there.

How It Works

Qwen3-Coder-Next combines Gated DeltaNet for linear attention, query-guided attention for quality, and sparse MoE for specialization. The model has 48 layers with 512 routed experts, activating 10 per token.

The training methodology is equally innovative: 800,000 verifiable coding tasks with executable environments, reinforcement learning across real-world scenarios, and 20,000 parallel environments teaching multi-turn interactions and tool usage. It’s execution-driven learning at scale.

Vibe Coding Goes Mainstream

Because only 3 billion parameters activate per token, you can run Qwen3-Coder-Next locally. No expensive API calls. No sending proprietary code to external servers. Just download from HuggingFace, fire up Ollama or LM Studio, and start coding.

For indie developers, that’s transformative. For enterprises in regulated industries, it’s a game-changer. For teams in regions with poor connectivity, it’s the difference between having frontier AI coding assistants and not.

The Apache 2.0 license removes the last barrier. Fine-tune on your codebase. Deploy in air-gapped environments. Use commercially without restriction. This isn’t “open source” with asterisks.

What Comes Next

Qwen3-Coder-Next signals a fundamental shift from brute-force scaling to architectural innovation. In 2024, open alternatives lagged proprietary models by 6-12 months. In 2026, Qwen trails Claude by 2 percentage points. Quality parity is arriving fast.

Developers should test Qwen3-Coder-Next now. Download from HuggingFace. Run locally via Ollama. Compare to Claude on your use cases. If it meets your threshold, you’ve eliminated API bills and gained full control.

The era of paying for frontier AI coding assistants is ending. The era of running them locally, for free, with full control, is here.