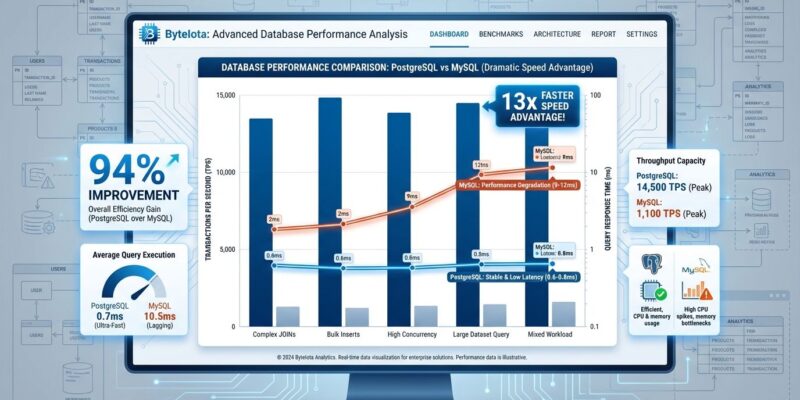

PostgreSQL 17 delivered a documented 94% reduction in query times in production deployments, translating to $4,800 per month in infrastructure cost savings for one organization. Performance benchmarks show PostgreSQL now executes queries in 0.6-0.8ms versus MySQL’s 9-12ms for complex workloads—a 13x performance advantage. This isn’t incremental improvement. It’s a fundamental shift in database economics.

Database decisions have immediate bottom-line impact. Teams evaluating PostgreSQL versus MySQL need hard performance data, not marketing claims. The data is in, and the gap is widening.

The Benchmarks That Settle the Debate

A peer-reviewed benchmark study from MDPI tested PostgreSQL 17 against MySQL across production workloads. The results are decisive:

- Simple queries (1M records): PostgreSQL executes in 0.6-0.8ms while MySQL requires 9-12ms. That’s 13x faster.

- High-volume reads: PostgreSQL achieves 23,441 queries per second versus MySQL’s 6,300 QPS—a 3.72x throughput advantage.

- Write operations: PostgreSQL shows 4.1ms mean latency compared to MySQL’s 26.5ms, making it 6.5x faster for writes.

- Performance under load: PostgreSQL maintains 0.7-0.9ms response times while MySQL degrades to 7-13ms as concurrent operations increase.

These aren’t synthetic benchmarks. They’re production measurements. A documented case study showed one team upgrading to PostgreSQL 17 saw query times drop from 30 seconds to 340ms. That’s the 94% improvement that unlocked $4,800 monthly savings by eliminating three read replicas and reducing database size by 40%.

MySQL still wins for simple read-heavy workloads like blog posts or product listings—about 10-20% faster. However, for complex queries, concurrent writes, or JSON operations, PostgreSQL’s lead is decisive. The old “MySQL is faster” wisdom is dead for any non-trivial workload.

What Changed Under the Hood

PostgreSQL 17’s performance gains come from three architectural improvements that change how the database handles maintenance and queries:

Incremental VACUUM now tracks which pages actually changed since the last run using a bitmap, rather than scanning entire tables. If only 1% of your 1TB table changed, VACUUM processes 10GB instead of 1TB. Consequently, VACUUM overhead becomes proportional to write volume, not table size—a fundamental improvement for continuous availability during maintenance operations.

Bi-directional indexes can now be scanned efficiently in both directions without rebuilding. This optimizes pagination queries and time-series analysis where you need both ascending and descending traversal on the same column.

Parallel VACUUM execution runs index vacuum and cleanup phases in parallel using background workers. For large tables with multiple indexes, this cuts maintenance downtime by 50-80%. Each index gets its own worker, dramatically reducing the maintenance windows that used to require scheduled downtime.

These features deliver immediate ROI with zero application changes. Just upgrade and measure.

The $1.25 Billion Validation

In June 2025, Databricks acquired serverless PostgreSQL provider Neon for $1 billion. Weeks later, Snowflake countered by acquiring Crunchy Data for $250 million. When the two largest data warehouse companies spend $1.25 billion combined on PostgreSQL infrastructure, they’re not hedging—they’re betting PostgreSQL is the foundational database for AI-driven applications.

The valuations tell different stories. Neon’s $1 billion reflects its serverless architecture and the fact that over 80% of its databases are created by AI agents, not humans. Furthermore, Databricks sees PostgreSQL as the substrate for AI-native applications where databases are provisioned programmatically at scale. Crunchy Data’s $250 million reflects its enterprise compliance capabilities and battle-tested infrastructure for regulated industries. Meanwhile, Snowflake wants the government and healthcare markets where security and compliance trump developer convenience.

The strategic insight: These companies aren’t fighting over data warehousing anymore. They’re competing to become “the AI-native data foundation unifying analytics, operational storage, and machine learning.” PostgreSQL is the substrate layer for that foundation. This isn’t a trend. It’s a tectonic shift.

PostgreSQL 18’s Temporal Constraints

PostgreSQL 18, released in September 2025, adds native temporal constraints—database-enforced prevention of overlapping time periods without triggers or custom logic. Before this, preventing double-bookings or overlapping reservations required complex exclusion constraints or application-level checks. Now it’s schema definition:

CREATE TABLE room_bookings (

room_id INT,

reservation_period TSTZRANGE,

PRIMARY KEY (room_id, reservation_period WITHOUT OVERLAPS)

);

-- Temporal foreign keys enforce time-based referential integrity

CREATE TABLE room_services (

booking_id INT,

service_period TSTZRANGE,

FOREIGN KEY (booking_id, service_period PERIOD)

REFERENCES room_bookings (room_id, reservation_period)

);This matters for scheduling systems, audit trails, and any application dealing with time-series data where you need guarantees about non-overlapping periods. As a result, the database enforces temporal integrity the same way it enforces foreign key constraints—reliably and automatically.

When to Choose PostgreSQL (and When MySQL Is Fine)

The decision framework is straightforward: workload complexity determines the right choice.

Choose PostgreSQL when you need: Complex queries with joins and subqueries, concurrent write-heavy workloads, ACID compliance for financial or critical data, advanced features like JSON operations or full-text search, or you’re building GenAI applications that need vector search via pgvector. Moreover, PostgreSQL is also the default for long-term reporting and analytics on the same database.

MySQL is fine when you have: Simple read-heavy workloads serving blog posts or product listings, straightforward data models without complex relationships, or legacy systems where compatibility matters more than features. If that 10-20% read performance edge for simple queries matters more than everything else, MySQL is still viable.

The truth most vendors won’t tell you: For typical CRUD applications, the performance difference is under 30% either way. Modern hardware and PostgreSQL 17/18 updates have closed the historical MySQL speed advantage for reads. Therefore, choose based on workload complexity and feature requirements, not speed myths from 2015.

The market signals where things are headed. PostgreSQL shows 55.6% developer preference in the 2025 Stack Overflow survey, 73% growth in job listings, and roughly a 12% pay premium for PostgreSQL developers versus MySQL developers. MySQL still has the larger deployment base from legacy web applications, but new projects default to PostgreSQL.

The Bottom Line

PostgreSQL 17 and 18 deliver measurable performance gains and cost savings in production. The 13x advantage for complex workloads isn’t marketing—it’s peer-reviewed benchmarks. The $4,800 monthly savings isn’t theoretical—it’s a documented case study. Additionally, the $1.25 billion in acquisitions isn’t coincidence—it’s validation that PostgreSQL is foundational infrastructure for the next decade of application development.

If you’re running MySQL for anything more complex than simple reads, the migration ROI is provable. If you’re starting a new project in 2026, PostgreSQL is the safer bet. The performance gap is widening, not closing, and the ecosystem momentum is decisive.

PostgreSQL is 40 years old this year. It’s more relevant now than ever.