OpenAI announced on January 14, 2026, a $10 billion partnership with Cerebras Systems to deploy 750 megawatts of AI inference computing through 2028—the largest high-speed inference deployment in the world. Cerebras’ Wafer-Scale Engine architecture delivers up to 15x faster inference than traditional GPU systems, addressing the latency bottleneck that limits real-time conversational AI. The deal marks OpenAI’s largest infrastructure diversification move away from Nvidia while validating Cerebras’ $22 billion pre-IPO valuation announced just one day earlier.

OpenAI Commits $10B for 750MW of Cerebras Inference Through 2028

The partnership focuses exclusively on AI inference—the production phase where trained models generate responses for end users. Cerebras will install wafer-scale systems across US datacenters starting Q1 2026, with capacity scaling through 2028. OpenAI rents compute time on these systems rather than purchasing hardware outright, similar to cloud infrastructure models but optimized specifically for low-latency inference workloads.

From OpenAI’s official announcement: “Cerebras adds a dedicated low-latency inference solution to OpenAI’s platform, meaning faster responses, more natural interactions, and a stronger foundation to scale real-time AI to many more people.” The 750MW deployment is equivalent to powering thousands of traditional GPU servers but promises dramatically better performance per watt for inference tasks.

This isn’t OpenAI testing new vendors—it’s strategic diversification. Depending entirely on Nvidia creates supply risk and limits negotiating leverage. Cerebras offers an architectural alternative specifically optimized for the workload that matters most at scale: serving billions of ChatGPT queries daily.

Inference Replaces Training as AI’s Dominant Cost Center

AI infrastructure spending is undergoing a fundamental shift. Training and inference currently represent roughly equal costs, but Deloitte projects inference will account for two-thirds of all AI compute spending by 2026. The math is straightforward: training builds a model once, but inference serves billions of queries continuously. At OpenAI’s scale, inference latency and throughput matter more than training speed.

Traditional GPU clusters weren’t designed for this workload. They excel at parallel training tasks but face memory bandwidth bottlenecks during inference. According to VentureBeat, “While massive GPU clusters excel at training huge models, the time it takes for a deployed model to ‘think’ and respond—inference—is the current friction point for widespread, real-time AI adoption.”

Real-time applications like voice AI, fraud detection, and interactive agents require sub-100ms latency. GPU-based inference typically delivers responses in 1-3 seconds. That gap explains why OpenAI would commit $10 billion to specialized inference hardware rather than adding more training capacity.

Related: Cerebras $1B at $22B: Nvidia’s Inference Rival Pre-IPO

How Cerebras Wafer-Scale Engine Delivers 2.5-21x Faster Inference

Cerebras’ Wafer-Scale Engine (WSE-3) takes a fundamentally different approach to AI acceleration. Instead of connecting hundreds of small GPUs with cables and network switches, the WSE-3 is a single silicon wafer measuring 46,255 mm² with 900,000 AI cores and 4 trillion transistors. It’s the largest chip ever built, and that scale enables architectural advantages GPUs can’t match.

The critical difference is memory architecture. GPUs store model weights in external HBM memory and shuttle data back and forth during inference. The WSE-3 packs 44GB of SRAM directly on the wafer—nearly 1,000x more on-chip memory than an H100—with 214 Pb/s interconnect bandwidth. Model parameters don’t move between chips or memory banks; they’re already positioned near the compute cores.

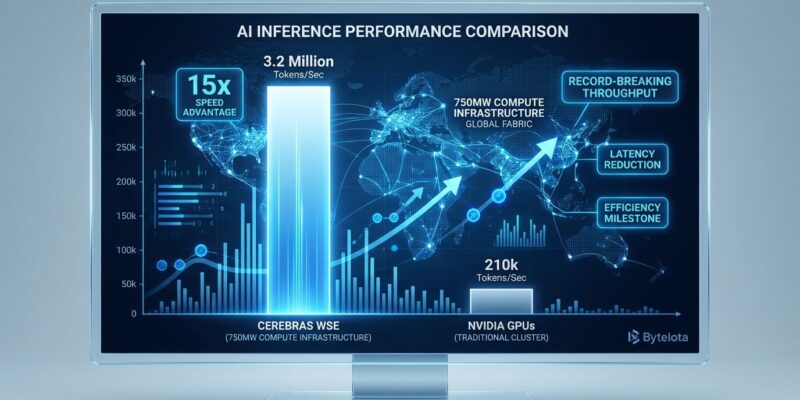

Benchmarks from Artificial Analysis show the performance impact. Llama 4 Maverick (400B parameters) generates 2,522 tokens/second on Cerebras versus 1,038 tok/s on Nvidia’s Blackwell—a 2.5x advantage. For GPT-OSS-120B, Cerebras hits 3,000+ tok/s compared to Blackwell’s 650 tok/s, a 5x gain. Llama 3 70B shows even larger gaps in reasoning scenarios, reaching 21x faster inference.

The “15x faster” claim varies by workload, but the pattern holds: Cerebras consistently delivers 2.5-21x better throughput depending on model size and inference pattern. For ChatGPT serving millions of concurrent users, that throughput advantage translates directly to lower latency and reduced infrastructure costs.

Cerebras Challenges Nvidia Inference Dominance Ahead of Q2 IPO

Nvidia maintains over 95% market share in AI chips, protected by 20 years of CUDA development and ecosystem maturity. However, that dominance was built on training workloads. Inference is a different game with different requirements—lower latency, higher throughput, cost sensitivity—and that’s where specialized architectures find openings.

The timing of this deal is strategic for both companies. OpenAI diversifies infrastructure risk while optimizing for inference economics. According to CNBC, Cerebras diversifies revenue away from G42, which represented 87% of H1 2024 revenue, while validating its $22B pre-IPO valuation ahead of a planned Q2 2026 IPO. The deal follows major contracts with IBM and the US Department of Energy, proving Cerebras can scale beyond single-customer concentration.

Nvidia isn’t standing still. The company acquired startup Groq for $20 billion in 2025 to integrate deterministic scheduling into its upcoming “Rubin” platform—a direct response to the inference competition from Cerebras and others. Market consensus: the era of one-size-fits-all GPUs dominating both training and inference is ending. The market is segmenting into specialized architectures, with inference-optimized chips projected to capture 15-25% market share by 2030.

The real question isn’t whether Cerebras replaces Nvidia—it won’t. Training still requires GPUs, and Nvidia’s ecosystem remains unmatched. But as inference spending reaches two-thirds of the AI compute market, specialized architectures that eliminate memory bottlenecks and deliver 5-15x better throughput create genuine alternatives. Consequently, OpenAI’s $10B bet suggests that segmentation is already happening.

Key Takeaways

- OpenAI’s $10B Cerebras partnership signals inference has become the dominant AI bottleneck and cost center, not training

- Wafer-scale architecture delivers 2.5-21x faster inference by eliminating GPU memory bandwidth bottlenecks with 44GB on-chip SRAM

- Nvidia’s 95% market dominance faces genuine challenge in the inference segment, which will represent 67% of AI spending by 2026

- 750MW deployment through 2028 enables sub-100ms latency for real-time applications (voice AI, fraud detection, interactive agents)

- The AI chip market is segmenting: specialized inference architectures versus general-purpose training GPUs, not replacement but complementary roles