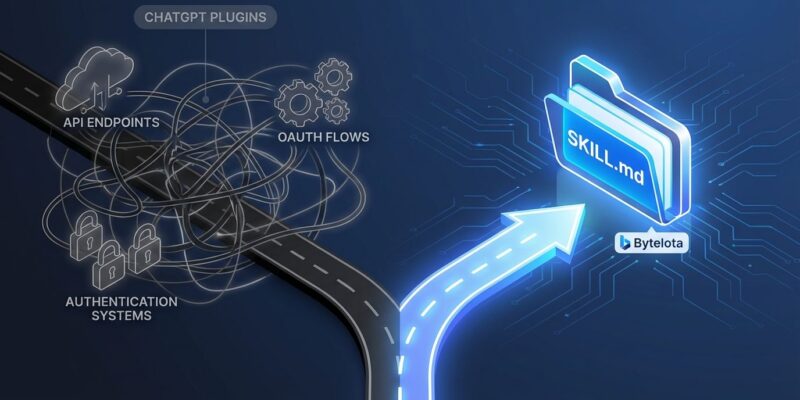

OpenAI quietly added “skills” support to both ChatGPT and their Codex CLI tool on December 12, 2025, adopting Anthropic’s lightweight approach to extending AI capabilities. Skills are just folders with markdown files—no APIs, no authentication, no complex setup. This is a stark contrast to OpenAI’s failed ChatGPT Plugins, which were deprecated in March 2024 after developers rejected their complexity. When a small competitor influences a tech giant, it signals a fundamental shift: simplicity wins.

Skills Beat Plugins Because Developers Hate Complexity

Skills are folders containing a SKILL.md file with YAML frontmatter and instructions. That’s it. No API development, no OAuth 2.0 authentication, no server infrastructure. You install them with git clone, not through an app store approval process.

Compare this to ChatGPT Plugins. OpenAI’s plugin system required JSON manifests, API endpoints, developer accounts, and OAuth authentication. It was too complex, and developers abandoned it. OpenAI shut down plugins by April 2024—less than a year after launch.

Now OpenAI is copying Anthropic’s simpler approach. ChatGPT Code Interpreter has a /home/oai/skills folder with skills for spreadsheets, DOCX, and PDFs. Codex CLI loads skills from ~/.codex/skills and displays them in a runtime ## Skills section. Simon Willison, who discovered this adoption, described skills as “based on a very light specification, if you could even call it that.”

The difference is night and day. Plugins locked you into ChatGPT’s ecosystem and required constant maintenance. Skills work across ChatGPT, Codex CLI, and Claude—any LLM tool with filesystem access can support them. Developers prefer tools that get out of their way.

How OpenAI Skills Work in ChatGPT and Codex CLI

ChatGPT Code Interpreter automatically accesses skills in /home/oai/skills when you prompt it. Currently, you’re limited to OpenAI-provided skills: spreadsheets, DOCX, and PDFs. For PDFs, OpenAI converts pages to PNG images and processes them with vision models to preserve layout and graphics—smart, but closed.

Codex CLI takes a more open approach. It loads skills from ~/.codex/skills at startup. You install community skills via git clone <skill-repo> ~/.codex/skills/<skill-name>, then run Codex with the --enable skills flag. Skills appear in a ## Skills section with their names and descriptions.

Simon Willison demonstrated this by creating a Datasette plugin skill that generated working Python code. The barrier to entry is trivial—write a markdown file, clone it to the right directory, and you’re done. Official Codex CLI documentation explains the full implementation.

OpenAI is testing the concept with ChatGPT’s closed implementation before rolling out community skills more broadly. If you’re using Codex CLI, you can already install third-party skills from GitHub today.

Anthropic Did It First (and Better)

Anthropic introduced skills in October 2025 across Claude.ai, Claude Code, and their API. Their implementation uses “progressive disclosure”—loading only minimal information needed per task, making context “effectively unbounded.” Skills dynamically load based on the task at hand, keeping performance fast while accessing specialized expertise.

Anthropic’s use cases include company brand guidelines, organization-specific workflows, Excel spreadsheets with formulas, and PowerPoint presentations. The design is thoughtful: progressive loading means you’re not constrained by context limits, and skills aren’t platform-locked. Any LLM with filesystem access can support them.

OpenAI’s adoption validates Anthropic’s design. When a 100-person company (Anthropic) influences a trillion-dollar competitor (OpenAI), that’s newsworthy. Simon Willison called Claude Skills “awesome, maybe a bigger deal than MCP” back in October. He was right—OpenAI just proved it.

The Agentic AI Foundation Is Formalizing This

Three days before OpenAI’s skills adoption surfaced, the Linux Foundation announced the Agentic AI Foundation on December 9, 2025. OpenAI, Anthropic, and Block donated MCP (Model Context Protocol), AGENTS.md, and goose as founding projects. Platinum members include AWS, Google, Microsoft, Bloomberg, and Cloudflare.

Simon Willison suggested the foundation could formalize skills specifications, making them an official standard alongside MCP and AGENTS.md. AGENTS.md has already been adopted by 60,000+ open source projects. Skills are likely next.

The timing is telling. Industry-wide standardization is happening at the same time as skills adoption. Developers benefit from standardization: write a skill once, use it across ChatGPT, Claude, and other AI tools. The foundation’s backing means this isn’t a passing trend—it’s the future of extending AI capabilities.

What Developers Should Do Now

If you use ChatGPT Code Interpreter, prompt it to access /home/oai/skills for spreadsheet, DOCX, and PDF processing. If you use Codex CLI, install community skills via git clone to ~/.codex/skills and run with --enable skills. Start creating custom skills for your workflows—just a folder with a SKILL.md file containing YAML frontmatter and instructions.

Skills work in Claude.ai, Claude Code, ChatGPT, and Codex CLI today. The barrier to entry is so low that every developer can extend their AI tools for domain-specific tasks without writing APIs or dealing with authentication. Expect cross-tool compatibility to improve as the Agentic AI Foundation formalizes specifications.

OpenAI’s ChatGPT Plugins failed because they were too complex. Skills succeed because they’re simple. When OpenAI copies Anthropic’s approach, that’s validation. Simplicity wins.