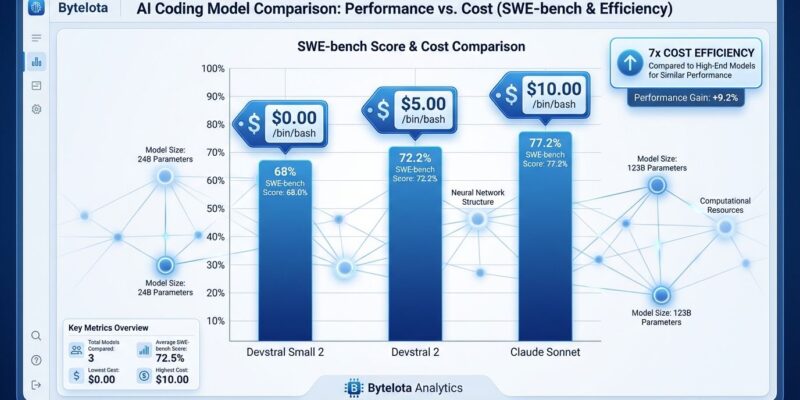

Mistral AI released Devstral 2 today—a coding model hitting 72.2% on SWE-bench Verified while costing $2 per million output tokens, seven times cheaper than Claude Sonnet’s $15. The French AI company launched two variants: a 123-billion parameter model and a 24-billion version that runs on a single RTX 4090. Alongside the models, Mistral shipped Vibe CLI, an open-source terminal coding assistant capitalizing on the shift from IDE autocomplete to conversational agents. In human evaluations, Devstral 2 beats open-source competitor DeepSeek V3.2 with a 42.8% vs 28.6% win rate—though Mistral concedes Claude Sonnet “remains significantly preferred.”

This matters because enterprises scaling AI coding face exploding API costs. Furthermore, teams burning thousands monthly on Claude now have a credible alternative that trades 5 percentage points of accuracy for 85% cost savings. Additionally, the Apache 2.0 license for the smaller variant enables fully local deployment for privacy-sensitive projects.

The 7x Cost Advantage Has a Catch

Devstral 2 costs $0.40 input and $2.00 output per million tokens compared to Claude Sonnet 3.5’s $3.00 and $15.00. At 100 million output tokens daily—a high-volume scenario for large teams—Claude runs $1,500 per day versus Devstral’s $200, saving $474,000 annually. That’s compelling math for startups and scale-ups watching their AI budgets spiral.

However, the 5-percentage-point gap between Devstral 2’s 72.2% SWE-bench score and Claude Sonnet’s 77.2% represents roughly 14 additional solved issues out of 277 test cases. Mistral’s own announcement acknowledges Claude “remains significantly preferred” in human evaluations. That language matters—it suggests the quality difference is noticeable in practice, not just on benchmarks. Consequently, if lower accuracy increases human review time by 10%, labor costs could offset savings. Moreover, SWE-bench measures GitHub issue resolution, not day-to-day coding quality, so real-world performance will vary.

The smart play isn’t wholesale replacement. Use Devstral 2 for high-volume, low-stakes tasks: unit test generation, documentation, boilerplate code, multi-file refactors. In contrast, reserve Claude budget for complex reasoning, critical systems (financial, medical, security), and architectural decisions where quality outweighs cost.

Beating DeepSeek While Staying Compact

In benchmark comparisons, Devstral Small 2 (24 billion parameters) scores 68.0% on SWE-bench Verified—matching Qwen 3 Coder Plus despite being 20 times smaller at 480 billion parameters. The full Devstral 2 model at 123 billion parameters achieves 72.2%, positioning itself between open-source competitors and Claude’s 77.2% ceiling. Meanwhile, on Terminal Bench 2, Claude dominates at 42.8% while Devstral manages 22.5%, revealing weaknesses in command-line interaction tasks.

Mistral’s efficiency narrative holds: these models punch above their weight class. The company claims Devstral 2 shows a 42.8% win rate versus DeepSeek V3.2’s 28.6% in head-to-head human evaluations, suggesting a 14-point advantage despite being five times smaller. Therefore, that’s meaningful for teams deploying on limited GPU infrastructure or running self-hosted.

But efficiency cuts both ways. The 123-billion-parameter Devstral 2 requires four H100 GPUs minimum—not feasible for individual developers. However, the 24-billion variant runs on consumer hardware (RTX 4090, Mac with 32GB RAM), making it accessible for local experimentation. Both models support 256K context windows, tool calling, and vision capabilities for analyzing screenshots and diagrams.

Apache 2.0 Licensing Opens Local Deployment

Devstral Small 2 ships under Apache 2.0, enabling on-premises deployment without cloud API exposure. This matters for data-sensitive enterprises in finance, healthcare, and defense where sending code to external APIs violates compliance policies. In contrast, the larger Devstral 2 uses a “modified MIT” license—details unclear, likely commercial restrictions. Legal review recommended before enterprise adoption.

Deployment instructions from Hugging Face recommend vLLM with temperature 0.15 for instruction-following tasks. The model integrates with existing agentic frameworks including Cline, OpenHands, and SWE Agent, allowing teams to swap it in as a backend for Claude or GPT workflows.

# Deploy Devstral Small 2 locally

docker run -it --gpus all \

mistralllm/vllm_devstral:latest \

--model mistralai/Devstral-Small-2-24B-Instruct-2512Compare this to Meta’s Llama license restrictions and GitHub Copilot’s mandatory cloud infrastructure. Nevertheless, Mistral carved space between proprietary models (Claude, GPT) and restrictive open-source (Llama) by offering permissive licensing that enterprises actually want. The €11.7 billion valuation following ASML’s €1.3 billion September investment suggests investors believe this strategy works.

Vibe CLI Rides the Terminal Coding Wave

Mistral Vibe CLI launched today as an open-source (Apache 2.0) terminal coding assistant with natural language commands, Git integration, persistent history, and file manipulation. It competes against Cursor and Aider in the “vibe coding” market—the industry’s shift from IDE autocomplete (GitHub Copilot) to conversational agents. TechCrunch notes Mistral is “surfing vibe-coding tailwinds,” which is accurate: Cursor raised over $100 million on exactly this premise.

The timing is strategic. Developers increasingly prefer typing natural language commands in their terminal over tab-completing through boilerplate. Furthermore, Mistral offers an open-source alternative that runs fully local with Devstral Small 2, avoiding both cost and privacy concerns of cloud-based tools. Integrations exist for Zed IDE, Kilo Code, and Cline.

The catch: the CLI launched hours ago. Expect bugs, missing features, and rough edges compared to mature competitors. Early adopters get cost and privacy benefits. In contrast, mainstream users should wait for stability and community validation. The Vibe CLI GitHub repository isn’t trending yet, suggesting limited immediate adoption despite the Hacker News buzz (270 points, 107 comments).

When to Choose Devstral Over Claude

Use Devstral 2 when budget matters more than perfection. High-volume scenarios—generating thousands of unit tests, documenting APIs, scaffolding CRUD endpoints—benefit from cost efficiency without requiring Claude-level reasoning. Therefore, teams spending $10,000+ monthly on Claude API calls should calculate break-even: at what volume do savings justify slightly lower quality?

Skip Devstral 2 for mission-critical code where the 5% accuracy gap matters. Financial systems, medical software, security implementations, and complex architectural decisions justify paying 7x for Claude’s superior reasoning. The “significantly preferred” language in Mistral’s announcement isn’t marketing fluff—it’s a warning that quality differences are real.

Hybrid strategies work best. Use Devstral for drafts and boilerplate, Claude for review and complex logic. This mirrors how developers already work: quick iteration with tools, careful review for production. Consequently, the cost-performance trade-off becomes a feature, not a bug, when you match each model to appropriate tasks.

Devstral 2 isn’t a Claude killer. It’s a cost optimizer for teams willing to accept minor quality trade-offs at scale. For high-volume coding tasks where 72% accuracy suffices, seven times lower costs make the math compelling.

[…] Mistral Devstral 2: 7x Cheaper Than Claude, 72% SWE-Bench(外部)Devstral 2がClaude Sonnet 4.5と比較して最大7倍のコスト効率を実現していることを報じている。 […]