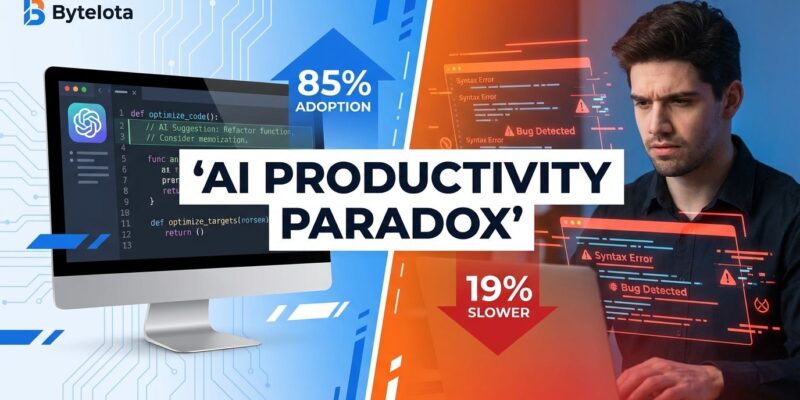

JetBrains’ 2025 Developer Ecosystem survey reveals a troubling contradiction: 85% of developers now use AI coding tools regularly, and 90% report saving at least an hour weekly. Yet controlled studies show developers are actually 19% slower with AI assistance. Meanwhile, AI-generated code ships with 1.7x more bugs than human-written code, and developer satisfaction with AI tools crashed from over 70% in 2023-2024 to just 60% in 2025.

This isn’t a statistical anomaly. It’s hard evidence that the AI productivity revolution is more complicated – and less beneficial – than vendor marketing suggests.

The Numbers Tell a Different Story

JetBrains surveyed 24,534 developers across 194 countries between April and June 2025. The data shows AI tools have become ubiquitous: 62% rely on at least one AI coding assistant or editor, and 41% of all code written in 2025 was AI-generated. Nearly 90% of developers say they save at least one hour weekly, with 20% claiming they save eight hours or more – a full workday.

But when researchers actually measured developer performance, the results contradicted these perceptions. A METR study analyzing 246 tasks with experienced developers found that using AI tools like Cursor made developers 19% slower than working without assistance. Developers expected AI to speed them up by 24% and, remarkably, still believed they were 20% faster after experiencing the slowdown. That creates a 43-point gap between perception and measured reality.

The quality picture is even worse. CodeRabbit analyzed 470 real-world open source pull requests in December 2025 and found AI-generated code averages 10.83 issues per PR compared to 6.45 for human-written code – a 1.7x multiplier. The problems span every quality dimension: logic and correctness defects increased 75%, security vulnerabilities rose 1.5-2x, readability problems tripled, and performance inefficiencies appeared 8x more often.

Why Developers Feel Faster But Measure Slower

The perception gap has clear psychological and workflow explanations. AI tools hijack the human brain’s reward system. Instant suggestions create the same dopamine hit as closing a ticket or fixing a failing test – developers get the feeling of achievement without the heavy lifting. That feels like progress, even when it isn’t.

The workflow shifts compound the problem. AI saves time on routine tasks like boilerplate generation and documentation, which creates visible time savings developers notice. But the real costs hide in the review and testing phases. The METR study found developers spend 9% of total task time specifically reviewing and modifying AI-generated code. Low AI reliability requires constant double-checking, and that validation overhead cancels out the speed gains from faster initial code generation.

Faros AI’s team-level analysis reveals another bottleneck: teams with high AI adoption complete 21% more tasks and merge 98% more pull requests, but PR review time increases 91%. Human approval becomes the constraint, not code generation. And critically, these team-level gains don’t scale – Faros found no significant correlation between AI adoption and company-level improvements in overall throughput, DORA metrics, or quality KPIs.

Industry Shifts Beyond DORA Metrics

The productivity paradox is forcing the industry to rethink how it measures developer effectiveness. Companies are moving from DORA metrics – which focus purely on technical performance like deployment frequency and lead time – to holistic productivity frameworks that include developer experience.

The JetBrains survey data shows why: 62% of developers cite non-technical factors like collaboration, communication, and clarity as critical to their performance, compared to just 51% citing technical factors. More telling, 66% of developers don’t believe current metrics reflect their real contribution.

New frameworks are emerging to address this gap. The DevEx framework focuses on three core dimensions: feedback loops, cognitive load, and flow state. Organizations with top-quartile DevEx scores achieve 4-5x higher engineering speed and quality than bottom-quartile performers. The SPACE framework measures developer productivity across satisfaction, performance, activity, communication, and efficiency – capturing not just how efficiently code moves through pipelines, but how sustainably and collaboratively it gets written.

Atlassian’s 2025 DevEx report found that 89% of software engineering leaders are actively working to improve developer experience. The data supports this shift: removing productivity as the sole focus actually increases developer productivity while reducing churn.

What This Means for Developers

AI tools aren’t disappearing. With 85% adoption and 68% of developers expecting AI proficiency to become a job requirement, the question isn’t whether to use AI but how to use it effectively.

The JetBrains survey reveals what developers are most concerned about: 23% cite inconsistent code quality, 18% point to limited understanding of complex logic, 13% worry about privacy and security, 11% fear negative effects on their skills, and 10% note lack of context awareness. These concerns align with the measured quality problems.

Developers need to be strategic about when to rely on AI. The data shows AI helps with boilerplate generation, documentation, code comments, and learning new frameworks – 65% report faster learning with AI assistance. But for complex debugging, application logic design, security-critical code, and performance-sensitive sections, the quality risks outweigh the speed benefits. Security vulnerabilities are 1.5-2x higher in AI code, and performance inefficiencies appear 8x more often.

Code review becomes more critical, not less. The 9% of time spent validating AI suggestions is necessary overhead – blindly accepting AI output leads to the 1.7x bug multiplier. Teams should measure actual outcomes, not perceived productivity. If PR review times are spiking 91% or bug rates are climbing, the AI productivity gains might be illusory.

Tool choice matters too. ChatGPT usage dropped from 49% to 41% between 2024 and 2025, while GitHub Copilot holds steady at 30%. Most developers use both: ChatGPT for exploration, debugging, and understanding complex problems, and Copilot for in-IDE autocomplete and routine coding tasks. That division reflects their strengths – Copilot for doing, ChatGPT for thinking.

The Road Ahead

The 2025 data paints a clear picture: AI coding tools are powerful but not magic. Widespread adoption doesn’t guarantee productivity gains, especially when measured rigorously. Quality concerns are real and quantifiable. And the gap between how developers feel and how they actually perform suggests we’re still learning to integrate these tools effectively.

The industry response – shifting from pure speed metrics to developer experience frameworks – acknowledges this reality. Success requires thoughtful integration, rigorous code review, focus on quality over speed, and honest measurement of actual outcomes. The AI productivity revolution is happening, but it’s messier and more nuanced than the marketing suggested.