Google Cloud launched fully-managed Model Context Protocol (MCP) servers on December 10, 2025, making Google Maps, BigQuery, Compute Engine, and Kubernetes Engine instantly accessible to AI agents with zero extra cost. The move reduces agent setup time from weeks to minutes via simple URL activation, positioning Google as “agent-ready by design” while intensifying pressure on AWS and Azure to match its MCP support.

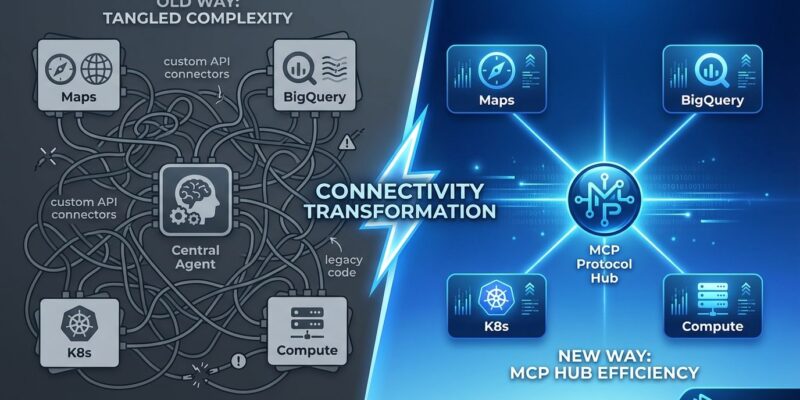

This is Google’s strategic bet on Anthropic’s open MCP standard over proprietary solutions, potentially standardizing how AI agents connect to enterprise cloud services. For developers, it eliminates the “N×M integration problem” of building custom connectors for each service—paste a URL, configure IAM permissions, and agents can immediately query data, manage infrastructure, and combine multiple Google services in single workflows.

What Google Launched: Zero Setup, Zero Cost

Google announced fully-managed, remote MCP servers for four services at launch—Maps, BigQuery, Compute Engine, and Kubernetes Engine—with 20+ more coming in Q1 2026. The servers are free for existing enterprise Google Cloud customers and protected by IAM permissions plus Model Armor, an agentic firewall defending against prompt injection and data exfiltration.

“We are making Google agent-ready by design,” said Steren Giannini, Google Cloud’s Product Management Director. Setup time drops from 1-2 weeks (custom integration) to minutes (paste MCP URL). Developers can build agents that query BigQuery for revenue forecasts while cross-referencing Maps for location intelligence—all in one workflow.

The timing isn’t coincidental. Gemini 3 launched with advanced reasoning capabilities, but reasoning without tools is like a brain without hands. Google’s managed MCP servers unlock Gemini 3’s full potential by providing reliable, standardized connections to real-world data and operations.

The N×M Problem MCP Solves

MCP (Model Context Protocol) is an open standard created by Anthropic in November 2024 to standardize AI-tool integration. Before MCP, developers built custom connectors for each service—N agents × M tools = N×M integrations. However, MCP reduces this to 1×M. Learn the standard once, connect to any MCP server.

Anthropic describes MCP as “USB-C for AI”—any AI model connects to any data source regardless of where it’s hosted. Moreover, adoption accelerated fast: OpenAI integrated MCP in March 2025 (ChatGPT, Agents SDK), Google committed in April 2025, Microsoft followed with Azure AI Foundry. By December 2025, all major cloud providers support it.

Developers waste weeks building and maintaining custom connectors. Consequently, MCP eliminates that overhead. Standardization means agents can be multi-LLM (swap Claude for ChatGPT without rewriting integrations) and tools are portable across platforms.

Related: AI Rivals OpenAI, Anthropic, Google Unite: Agentic AI Foundation

Open Standard Over Proprietary: Google’s Calculated Bet

Google could’ve built a proprietary “Google Agent Protocol”—they didn’t. That tells you something. Instead, Google chose Anthropic’s open MCP standard, coinciding with MCP’s donation to the Linux Foundation (Agentic AI Foundation) in December 2025. This makes Google MCP servers compatible with Claude, ChatGPT, and Gemini—any MCP client works with any MCP server.

Giannini tested Google’s MCP servers with Anthropic’s Claude and OpenAI’s ChatGPT as clients: “They just work.” The timing is strategic—MCP under Linux Foundation governance reduces vendor lock-in fears, making Google’s adoption safer for enterprises hesitant about proprietary standards.

This signals Google believes open standards will win in the agent ecosystem, like HTTP and USB-C did. Furthermore, it puts competitive pressure on AWS Bedrock and Azure AI Foundry—if MCP becomes the de facto standard, they must support it or risk losing agent developers to Google’s simpler, managed offering.

AWS and Azure Must Respond

AWS and Azure technically support MCP, but Google’s “paste a URL and you’re done” approach makes theirs feel like homework. AWS Bedrock AgentCore offers MCP server deployment via custom code or Marketplace. Azure AI Foundry provides an Azure MCP Server but not fully-managed endpoints for all services. Google’s advantage: paste https://mcp.googleapis.com/bigquery, configure IAM, done.

The comparison matters. AWS requires deploying MCP servers to AgentCore Runtime, configuring Lambda functions or APIs. Azure provides an MCP Server for CosmosDB, SQL, and SharePoint but still requires manual setup. Google’s fully-managed URLs eliminate infrastructure management entirely—zero servers to run, zero maintenance overhead.

Google’s “managed + free” model creates competitive pressure. If developers choose Google for ease-of-use, AWS and Azure may need to offer equivalent managed servers or lose agent market share. This could accelerate MCP as the industry standard, forcing competitors to follow Google’s lead rather than fragment the ecosystem with proprietary alternatives.

Key Takeaways

- Google’s managed MCP servers reduce agent setup from weeks to minutes, eliminating the N×M integration problem developers face when connecting AI agents to cloud services

- Free for enterprise customers creates a competitive moat—AWS and Azure now face pressure to match Google’s managed offering or risk losing developers to superior ease-of-use

- Open standard governance via Linux Foundation makes MCP the emerging industry default, with Anthropic, OpenAI, Google, Microsoft, and AWS all committed

- Multi-LLM compatibility unlocks flexible agent strategies—the same MCP server works with Claude, ChatGPT, and Gemini without code changes

- Security built-in (IAM + Model Armor) addresses enterprise concerns about prompt injection and data exfiltration that plagued earlier MCP implementations

Google’s bet on Anthropic’s open standard instead of a proprietary protocol signals where the industry is heading. The smart money is on MCP becoming the HTTP of AI agent connectivity—ubiquitous, standardized, and vendor-neutral. Developers asking “Should I build custom integrations or use MCP?” are asking the wrong question. The question is: Can you afford to spend weeks on what Google just made free?