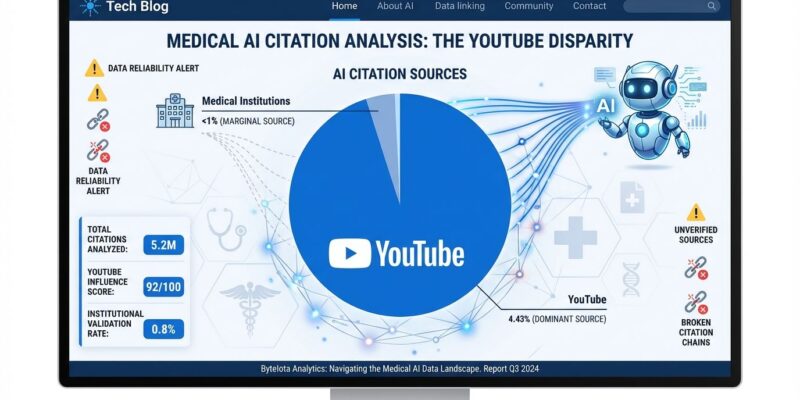

Google’s AI Overviews cite YouTube videos more than any hospital, academic institution, or government health portal for medical queries. SE Ranking’s January 2026 study of 50,807 health searches found YouTube accounts for 4.43% of all AI citations—20,621 mentions—while academic journals and government health sites combined represent less than 1%. Only 34% of citations come from reliable medical sources. Following The Guardian’s investigation that exposed dangerous medical misinformation, Google quietly removed AI Overviews for certain health queries this week, but the problem runs far deeper than one company’s search engine.

This isn’t a Google bug—it’s an AI architecture crisis. YouTube’s massive content volume (500+ hours uploaded every minute) gives it a statistical advantage in training datasets, but popularity doesn’t equal accuracy. The training data democracy paradox: viral content wins the citation lottery, and patients lose.

YouTube Ranks #1 in AI Citations, Academic Journals <1%

YouTube dominates AI health citations despite ranking 11th in traditional Google search results. The disconnect is staggering: YouTube appears 5,464 times in organic “blue link” results but jumps to 20,621 AI Overview citations—nearly 4x the frequency. Meanwhile, academic journals, government health portals, and hospital networks combined account for roughly 1% of AI citations.

The SE Ranking study analyzed 465,823 total citations from AI Overviews and found 65.55% come from sources “not designed to ensure medical accuracy or evidence-based standards.” Systematic reviews confirm YouTube health content is “average to below-average quality,” with only 32% of health videos showing neutral, unbiased information. The kicker: 73.9% of health content from medical advertisements and for-profit organizations is outright misleading.

AI Overviews now appear on more than 82% of health searches, meaning most users see AI-synthesized answers—not authoritative sources—as their first impression. However, Google’s citation algorithm prioritizes engagement metrics and content volume over editorial authority, a design flaw that scales misinformation rather than facts.

Pancreatic Cancer, Vaginal Cancer: AI Gets It Wrong

The Guardian investigation, published January 2, exposed dangerous medical advice with real-world consequences. Google’s AI told pancreatic cancer patients to avoid high-fat foods—the exact opposite of correct guidance. Patients need high-fat diets to tolerate chemotherapy and surgery. Following this AI advice could jeopardize survival.

Another query for “vaginal cancer symptoms and tests” returned an AI summary claiming Pap tests detect vaginal cancer. Athena Lamnisos, CEO of The Eve Appeal, called this “completely incorrect.” Pap tests screen for cervical cancer only. Relying on this misinformation could delay life-saving diagnosis.

The liver function test queries proved equally troubling. AI Overviews presented numerical ranges without critical context about age, sex, ethnicity, medications, and underlying conditions. Vanessa Hebditch, Director of Communications at the British Liver Trust, noted people can have normal test results while requiring urgent medical intervention for serious liver disease—but AI summaries omit this nuance entirely.

Google removed AI Overviews for specific liver-related searches following the investigation, but researchers found slightly different search terms still trigger the same dangerous summaries. Worse: repeating the same query at different times produces different AI answers pulling from different sources. As Hebditch put it: “People are getting a different answer depending on when they search. This is unacceptable.”

Related: AI Benchmarks Can’t Be Trusted—Meta Admits Manipulation

All AI Search Engines Fail >60% Accuracy Test

This isn’t a Google-only problem. Columbia University’s Tow Center tested eight AI search engines—ChatGPT Search, Perplexity, Microsoft Copilot, Google Gemini, Grok-2, Grok-3, and DeepSeek Search—across 1,600 queries. All failed to retrieve correct information more than 60% of the time.

Google Gemini produced completely correct responses in only 10% of cases. More than half of Gemini’s citations were broken or fabricated URLs leading to error pages. ChatGPT Search linked to the wrong source 40% of the time and provided no citation in another 21% of cases—a combined 61% citation failure rate.

Perplexity, which brands itself as a tool for research, had the “best” performance with a 37% error rate. Still wrong more than one in three times. The Columbia researchers described AI citation practices as “buffing”—listing multiple sources to create an “impression of thoroughness without substance” while not actually citing them in generated answers. Citation precision is “concerningly low, with many generated citations not supporting their associated statements.”

Furthermore, developer trust is already eroding. Stack Overflow’s 2026 survey found 46% of developers actively distrust AI tool accuracy, with sentiment falling for the first time. Only 3% report “high trust” in AI output.

Related: AI Code Quality Crisis: 1.7x Bugs, 4.6x Review Wait

Training Data Bias: Engagement Over Authority

The root cause is training data bias. LLMs are trained on internet-scale datasets where YouTube’s upload velocity gives it massive representation. Citation algorithms prioritize content volume and engagement metrics—views, likes, watch time—over editorial authority. There’s no medical safeguards or evidence-based verification in the citation logic. The system can’t distinguish MDs from fitness influencers.

LLM hallucination research shows models repeating or elaborating on planted errors in up to 83% of cases. When training data is biased toward popular content, the AI amplifies those biases at scale. Consequently, fixing this requires fundamental architectural changes: authority-weighted citations, domain-specific training data with physician oversight, and retrieval-augmented generation (RAG) to improve accuracy.

Google’s removal of specific health queries treats symptoms, not causes. Until AI search implements source verification beyond engagement metrics, the citation crisis persists.

Key Takeaways

- YouTube dominates AI health citations (4.43%) despite being 11th in organic search results

- Only 34% of AI citations come from reliable medical sources; 66% unreliable

- All major AI search engines fail >60% accuracy tests; Google Gemini: 10% correct, 50%+ broken links

- Training data bias favors viral content over verified expertise—engagement metrics beat editorial authority

- Developers shipping AI products need source verification, hallucination detection, and quality gates before deployment

- The EU AI Act takes full effect August 2026; regulatory pressure mounting on AI healthcare applications

- For high-stakes queries (medical, legal, financial), AI search is fundamentally unreliable