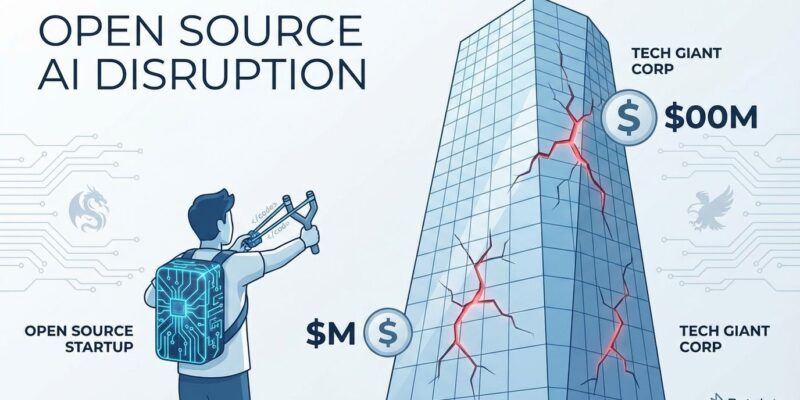

Chinese AI startup DeepSeek just released R1, an open-source reasoning model that matches OpenAI’s o1 on key benchmarks—and claims it was trained for $6 million. That’s 94% cheaper than GPT-4’s estimated $100 million cost. Released January 20, 2026, R1 is MIT-licensed, runs locally, and costs 27x less via API. But critics say DeepSeek’s infrastructure investment tops $1.6 billion. The disruption is real, even if the headline isn’t the full story.

Performance Parity at a Fraction of the Price

DeepSeek R1 matches OpenAI’s o1 on reasoning benchmarks. On AIME 2024, R1 scored 79.8% versus o1’s 79.2%. On MATH-500, R1 hit 97.3%, beating o1 by nearly a point. For code, R1 solved 49.2% of SWE-bench Verified problems versus o1’s 48.9%. General knowledge favors o1 slightly (91.8% vs. 90.8% on MMLU), but the gap is negligible.

The cost difference is staggering. DeepSeek’s API charges $2.19 per million output tokens. OpenAI’s o1 costs $60—27 times more. For developers building AI products, that pricing gap is existential.

The $6M Number Is Technically True—and Misleading

DeepSeek’s $6 million figure represents the marginal cost of the final training run: 55 days on 2,048 Nvidia H800 GPUs, plus a $294,000 RL phase. Accurate, but incomplete.

What it excludes: failed experiments, infrastructure build-out, team salaries, and data prep. SemiAnalysis investigated and found DeepSeek’s total investment approaches $1.6 billion, with ~50,000 Nvidia Hopper GPUs. Hardware acquisition alone exceeded $500 million.

Martin Vechev of INSAIT called it out: “The $6M cost is misleading because it represents only one training run. Developing such a model requires running this training many times, plus many other experiments.”

Even at $1.6 billion total, that’s a fraction of what Western AI labs are burning. The efficiency gains are undeniable.

Run Frontier AI on Your Laptop

You can run DeepSeek R1 locally, right now, for free. With Ollama installed, it’s one command:

ollama run deepseek-r1:7bThe 7B model runs on 8GB VRAM. The 1.5B version works on consumer laptops. It’s MIT-licensed: use it commercially, modify it, distill it.

For teams, the calculus shifts: pay $60 per million tokens to OpenAI, or run a comparable model on your hardware for the cost of electricity? The option exists now in a way it didn’t before.

What This Means for OpenAI’s $157B Valuation

OpenAI is valued at $157 billion. Anthropic at $60 billion. Both assume frontier AI requires massive capital and closed development.

DeepSeek challenges that. If an open-source model matches your flagship product at a fraction of the cost, what’s the moat? Why pay 27x more when an MIT-licensed alternative exists?

Critics allege DeepSeek used distillation—training on OpenAI’s outputs. DeepSeek V3 reportedly “frequently claims it is a model made by OpenAI,” suggesting it learned by imitating closed systems.

If true, that’s brutal: spend hundreds of millions on R&D, release via API, and watch competitors train on your outputs to ship cheaper alternatives. Your innovation gets commoditized before you recoup costs.

OpenAI and Anthropic can’t ignore this. The pricing pressure is real.

Pure Reinforcement Learning: The Technical Breakthrough

DeepSeek’s real innovation is methodology. R1 validates that reasoning can be learned purely through reinforcement learning, without supervised fine-tuning.

Most models need human-labeled data. DeepSeek skipped that. R1-Zero was trained via trial and error, with rewards for correct reasoning. AIME scores jumped from 15.6% to 71.0% through RL alone, developing self-verification and chain-of-thought reasoning without explicit instruction.

There were trade-offs—R1-Zero suffered from repetition and language mixing. DeepSeek’s final R1 added “cold-start data” to fix that. But the proof stands: you can teach models to reason through incentives, not just labeled examples.

The Era of Commoditized AI

DeepSeek R1 proves open-source can compete at the frontier. The $6 million cost is marginal, not total, but efficiency gains are real. API pricing is 27x cheaper. Performance matches OpenAI o1. Developers can run it locally, free, under MIT license.

Closed-source AI business models face pricing pressure. The assumption that frontier AI requires unlimited capital is cracking. Total investment may be $1.6 billion, not $6 million—but that’s still a fraction of Big Tech spending.

The question for OpenAI, Anthropic, and others: what’s your competitive advantage when performance commoditizes?