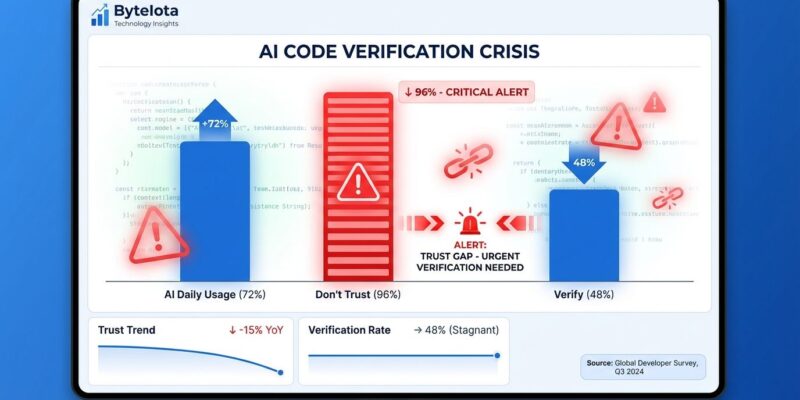

Sonar’s State of Code Developer Survey 2026, released last week (January 8) and surveying 1,100+ professional developers, exposes a dangerous paradox: 72% of developers now use AI coding tools daily and AI accounts for 42% of all committed code—yet 96% don’t fully trust AI output, and only 48% always verify it before committing. Developers are shipping code they don’t trust, creating what AWS CTO Werner Vogels calls “verification debt”—the burden of rebuilding code comprehension during review rather than gaining it naturally through creation. With AI-generated code projected to reach 65% by 2027, the verification bottleneck—not generation speed—will define software quality and velocity.

The Verification Bottleneck Replaces Code Generation

The AI productivity narrative collapses under scrutiny. While AI generates code 10x faster than humans, overall engineering velocity gains hover around 10-20%—nowhere near the promised transformation. The gap? Verification consumes most of those generation savings. Moreover, developers report that reviewing AI code takes MORE effort than reviewing human-written code (38%), yet time pressure leads half the industry to skip thorough verification entirely.

The numbers tell a stark story: AI code share has exploded from 6% in 2023 to 42% in 2026, and developers expect it to hit 65% by 2027. However, the trust-to-verification gap is widening, not closing. As AI share grows, teams that haven’t addressed verification will drown in technical debt and security vulnerabilities. Consequently, the bottleneck has shifted downstream from creation to review, and most organizations aren’t ready for it.

Verification Debt: Why AI Code Review Is Genuinely Harder

Vogels’ “verification debt” concept reframes the entire debate. When you write code yourself, comprehension comes naturally through the creative process—you understand architectural decisions, edge cases, and trade-offs because you made them. In contrast, when AI writes code, you must rebuild that comprehension retroactively, which is cognitively expensive. This isn’t developers being lazy or careless—verification is genuinely harder.

The data backs this up. Senior developers spend 4.3 minutes reviewing each AI suggestion, compared to 1.2 minutes for junior developers. But here’s the problem: juniors aren’t better at verification—they’re worse. They just spend less time because they lack the experience to spot subtle issues. Meanwhile, seniors become “AI babysitters,” overwhelmed by the volume of junior-generated code. Furthermore, the 40% productivity gains juniors report create a verification burden that doesn’t scale to 65% AI code share.

The Register captures it perfectly: “Value is no longer defined by the speed of writing code, but by the confidence in deploying it.” Speed without confidence is technical debt on credit.

Where AI Actually Works (And Where It Fails)

Not all AI coding is created equal, and the effectiveness data reveals a clear pattern: AI excels as an “explainer” but struggles as a “maintainer.” Documentation generation hits 74% effectiveness—the highest rating. Additionally, code explanation follows at 66%, and test generation manages 59%. These are descriptive, lower-risk tasks where verification is straightforward.

Then the cliff arrives. While 90% of developers use AI for new code development, only 55% find it “extremely or very effective”—a 35-point gap between usage and satisfaction. Refactoring performs worst at just 43% effectiveness despite 72% usage. Why? AI lacks system context, breaks subtle dependencies, and degrades performance. It generates code that “works” in isolation but fails in integration.

Smart teams will match AI usage to task effectiveness. Use AI heavily for documentation and greenfield prototypes. Verify thoroughly for new features. Avoid or minimize AI for refactoring and security-critical code. The 43% refactoring rating isn’t a suggestion—it’s a warning that verification costs exceed generation savings for maintenance work.

The Security and Governance Crisis

Beyond technical verification challenges, governance gaps compound the problem. Thirty-five percent of developers access AI coding tools via personal accounts rather than work-sanctioned ones, creating compliance blind spots. Organizations can’t audit what they can’t see, and personal AI accounts bypass corporate security controls entirely.

Unverified AI code carries real security risks. AI-generated code often passes existing tests but introduces subtle vulnerabilities—SQL injection, authentication bypass, cross-site scripting—that manual review misses and test suites don’t cover. Consequently, the “tests pass, ship it” mentality becomes dangerous when 42% of your codebase comes from a tool that 96% of developers distrust.

The 35% shadow IT problem demands immediate action: ban personal AI accounts, require work-sanctioned tools with audit logging, mandate verification for security-critical code paths, and enhance automated security scanning. The verification gap isn’t just a productivity issue—it’s a security crisis waiting to materialize at scale.

What 2027 Demands: Verification-First AI Coding

The trajectory is unsustainable. From 6% (2023) to 42% (2026) to 65% (2027 projected)—that’s 10x growth in four years. The current verification model breaks at 65%. Organizations need strategies that scale to majority-AI codebases, and “try harder at code review” isn’t a strategy.

The shift must be from “AI makes you 10x faster” to “verification-first AI coding”—tools, processes, and governance designed around verification constraints, not generation hype. This means automated verification pipelines (static analysis, security scanning, comprehensive test suites), tiered review processes that prioritize high-risk AI changes, and task-specific AI policies that match tool usage to effectiveness ratings.

Teams that invest now in verification infrastructure will thrive in 2027. Teams chasing generation speed without verification strategy will accumulate technical debt, security vulnerabilities, and slower overall delivery. The verification bottleneck isn’t temporary—it’s the new constraint that defines AI-era software development.

The paradox persists: developers use tools they don’t trust, verify half the time, and expect the problem to worsen. The question isn’t whether your team uses AI coding tools—it’s whether your verification strategy can handle 65% AI code share. Most organizations aren’t ready, and 2027 is forcing the reckoning.