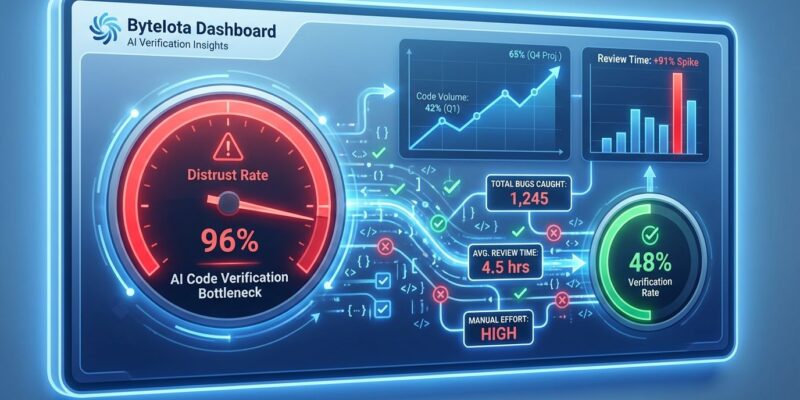

Sonar’s 2026 State of Code Developer Survey, published last month, reveals a critical paradox: 96% of developers don’t fully trust the functional accuracy of AI-generated code, yet only 48% always verify it before committing. This gap between distrust and verification represents what Sonar calls the verification bottleneck—the phenomenon where AI generates code faster than humans can confidently review it. The survey of 1,149 professional developers found that despite real productivity gains (35% average boost), organizations with 30%+ AI-generated code see only 10% velocity improvements. The bottleneck shifted from writing code to verifying it.

The Review Time Crisis

The productivity paradox is now quantified. Faros AI’s research of over 10,000 developers found that code review time increases 91% when teams adopt AI coding assistants—even as individual task completion rises 21%. Organizations see 98% more pull requests and PRs that are 154% larger, overwhelming reviewers and creating cognitive overload. Teams can’t keep pace.

Meanwhile, bug rates climb 9% as quality gates struggle with volume. In controlled trials, developers estimated AI made them 20% faster but actually took 19% longer overall when verification overhead was measured. The individual feels productive, but organizational delivery metrics remain flat. Human review capacity is finite and linear; AI generation is exponential. That’s the problem.

Worse, 67% of developers report spending more time debugging AI code than human code, and 68% spend more time resolving security vulnerabilities—AI-assisted code shows 3x more security issues than traditionally written code. The “almost right” problem compounds this: AI generates code that looks correct and often passes basic tests but contains subtle bugs harder to fix than obviously broken code. Nearly half of developers (45.2%) say debugging AI code takes longer than fixing human-written code precisely because it’s subtly wrong, not obviously broken.

The Trust-Verification Gap

Here’s the uncomfortable truth: 96% of developers don’t trust AI code accuracy, yet only 48% always verify before committing. That means 52% of developers are shipping code they don’t trust without adequate verification. This isn’t theoretical—it’s happening in production right now.

Developer trust in AI accuracy has been declining, dropping from 43% in 2024 to just 33% in 2025, according to multiple surveys. Yet adoption keeps climbing: 90% of developers now use AI coding assistants, and 72% use them nearly every day. The result is a verification gap that Sonar calls “critical”—teams are committing potentially unreliable code at scale.

Adding to the governance challenge, 35% of developers use personal AI accounts rather than work-sanctioned tools, creating blind spots in code tracking and compliance. Organizations often don’t know how much AI-generated code is entering their codebase or whether it’s being verified.

The Scale Problem Accelerates

AI-generated code now accounts for 42% of all commits in 2025, up from under 10% in 2023. Developers predict this will hit 65% by 2027. With monthly code pushes exceeding 82 million and 43 million merged pull requests across enterprise teams, the verification crisis isn’t slowing down—it’s accelerating.

Industry analysts project a 40% quality deficit for 2026, meaning more code will enter development pipelines than teams can validate with confidence. This isn’t sustainable. When two-thirds of your codebase is AI-generated and only half of it gets verified, you’re gambling with production quality.

The challenge is structural: verification capacity hasn’t scaled with generation speed. AI tools like GitHub Copilot and Cursor generate code 2-5x faster than humans, but review processes designed for human-written code can’t handle the volume or the nature of AI output. Larger PRs, unfamiliar code patterns, and subtle bugs require more time to review, not less—yet teams are getting buried under 98% more PRs than before AI adoption.

Automated Verification as the Solution

The automated code review market recognized this shift early. It exploded from $550 million to $4 billion in 2025 as organizations realized that verification capacity must scale with AI generation speed. Tools like Sonar, CodeRabbit, and Qodo offer automated verification workflows—static analysis, security scanning, automated test generation—that catch issues before human review.

High-performing teams achieve 42-48% improvement in bug detection accuracy using AI code review tools. CodeRabbit’s multi-layered analysis detects real-world runtime bugs with 46% accuracy. Qodo generates automated tests alongside code review, while Sonar’s static analysis covers 30+ languages and identifies security vulnerabilities, code smells, and technical debt. Some organizations report 31.8% reduction in PR review cycle time when combining automated tools with human oversight.

The successful approach isn’t eliminating human review—it’s reserving it for what matters. As Google engineer Addy Osmani puts it: “AI writes code faster. Your job is still to prove it works. The developers who succeed with AI at high velocity aren’t the ones who blindly trust it; they’re the ones who’ve built verification systems that catch issues before they reach production.”

That means automated tools scan for security vulnerabilities, enforce code standards, and generate test coverage, while humans focus on architecture decisions, business logic, and critical code paths like authentication, payments, and data handling. Keep PRs under 400 lines to prevent cognitive overload. Scope AI prompts narrowly—one function, one bug fix, one feature at a time—to avoid the 154% PR size inflation that bogs down reviews.

The Path Forward

Verification is the new bottleneck. The 96% distrust rate isn’t going away—if anything, it’s justified. AI code has 3x more security vulnerabilities, takes longer to debug, and requires careful review to catch subtle errors. But the 48% verification rate is unacceptable when 42% of your codebase is AI-generated.

Organizations have two choices: scale verification capacity with automated tools, or accept a 40% quality deficit as AI-generated code outpaces human review. The teams winning with AI aren’t the ones generating code fastest—they’re the ones who automated verification, adjusted workflows for AI’s quirks (larger PRs, unfamiliar patterns, subtle bugs), and maintained rigorous oversight on critical paths.

The bottleneck shifted. Adapt or drown in unverified code.