Sonar’s 2026 State of Code Developer Survey, polling 1,149 professional software developers globally in January 2026, reveals a critical AI verification bottleneck: while 90% of developers now use AI coding assistants and AI accounts for 42% of all committed code, 96% of developers do not fully trust the functional accuracy of AI-generated output. This trust gap has shifted the productivity burden from code generation—which AI accelerates—to code verification and debugging. The time saved writing code gets consumed verifying its correctness.

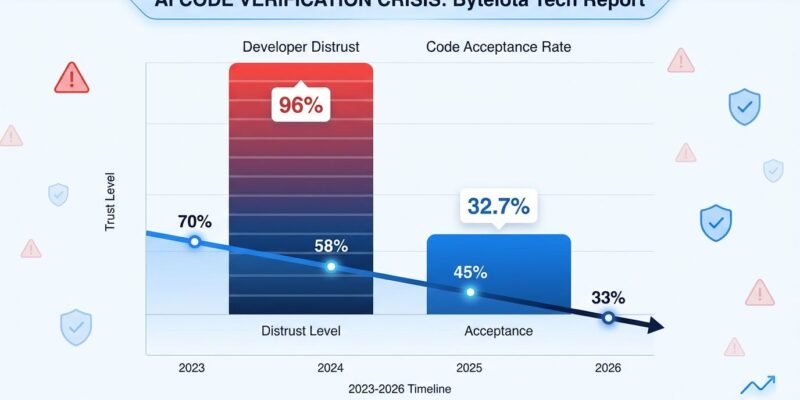

Multiple authoritative surveys confirm this trend. Stack Overflow’s 2025 Developer Survey, with 49,000+ respondents, shows 46% actively distrust AI accuracy versus only 33% who trust it—a dramatic decline from 70%+ positive sentiment in 2023-2024 to just 60% in 2025. LinearB’s 2026 Benchmarks Report, analyzing 8.1 million pull requests across 4,800 engineering teams, reveals that AI-generated PRs have dramatically lower acceptance rates (32.7% vs 84.4% for manual code) and wait 4.6x longer for review.

96% Don’t Trust AI Code

The trust gap isn’t developer resistance to change—it’s informed caution based on experience. Sonar’s survey found that only 2.6% of experienced developers “highly trust” AI output, while 20% “highly distrust” it. “While AI has made code generation nearly effortless, it has created a critical trust gap between output and deployment,” says Tariq Shaukat, CEO of Sonar. This trust gap is structural, not temporary, because AI models hallucinate APIs, miss edge cases, and generate security vulnerabilities at alarming rates.

Stack Overflow’s survey identified the #1 developer frustration: 66% of respondents cite “AI solutions that are almost right, but not quite.” This maddening characteristic forces developers to carefully review every AI suggestion because they can’t assume correctness. Teams that skip verification accumulate technical debt. Teams that verify rigorously lose the productivity gains AI promised. There’s no easy middle ground.

AI PRs: 32.7% Acceptance vs 84.4% Manual

LinearB’s data makes the AI verification bottleneck concrete. When two-thirds of AI-generated pull requests get rejected or require significant rework (67.3% rejection rate), verification overhead isn’t abstract—it’s measurable delay in your deployment pipeline. AI PRs also wait 4.6x longer before review even begins, signaling that reviewers approach AI code with heightened skepticism and caution.

Bot acceptance rates vary by tool. Devin’s acceptance rates have been rising since April 2025, while GitHub Copilot’s have been slipping since May—not all AI coding assistants perform equally. For engineering managers tracking velocity, this data explains PR backlog growth. AI generates code faster, but code review becomes the new bottleneck. Teams must restructure review processes: some segregate AI PRs into separate queues with stricter testing requirements and senior-only reviewers. Others implement mandatory security scanning before human review begins.

Related: AI Coding Paradox: Devs 19% Slower Yet Believe AI Helps

Developers Feel Faster But Data Shows Slower

A MIT-backed study revealed a dangerous perception gap: seasoned developers actually took 19% longer to complete tasks with AI assistance, yet they believed they were 24% faster before starting and still believed they’d been faster after finishing. Ars Technica’s analysis found developers spend 9% of total task time specifically reviewing and modifying AI-generated code. Combined with time spent prompting AI systems, overhead activities overwhelm time savings.

Senior developers spend an average of 4.3 minutes reviewing each AI suggestion compared to 1.2 minutes for junior developers, according to a study of 250 developers across five organizations. Stanford University research shows that rework consumes 15-25 percentage points of the 30-40% potential productivity gains from AI. This explains the paradox every engineering leader faces: teams adopt AI tools, developers report feeling more productive, but sprint velocity doesn’t increase proportionally—or at all.

The disconnect between perception and reality is dangerous. If teams believe they’re faster without measuring actual cycle times, they miss the verification bottleneck consuming their gains. Objective measurement is critical: track full cycle time (generation + verification + debugging), not just how fast code appears in the editor.

40-48% of AI Code Contains Security Flaws

Veracode’s 2025 GenAI Code Security Report, testing over 100 large language models, found that 40-48% of AI-generated code contains security vulnerabilities. Specific vulnerability types are worse: cross-site scripting (CWE-80) undefended in 86% of AI code samples, log injection (CWE-117) undefended in 88%. Java was the riskiest language with a 72% security failure rate across tasks.

AI models confidently generate insecure code without warnings. They hallucinate APIs, miss input validation, and replicate vulnerable patterns from training data. For organizations in regulated industries—finance, healthcare, defense—the 40-48% vulnerability rate is unacceptable. It requires systematic security scanning of all AI-generated code before deployment, adding another verification step to the workflow. This is the clearest reason why verification can’t be skipped. Accepting AI code without security review is negligent.

Related: IDEsaster: 30+ Flaws Expose AI Coding Tools to Data Theft

The “Vibe, Then Verify” Solution

Sonar coined the term “vibe, then verify” to describe the emerging best practice: use AI for creative code generation (“vibe”), then apply rigorous verification before deployment. A new market category is forming around AI-native verification tools. Sonar’s AI Code Assurance analyzes 750 billion lines of code daily for 7 million developers. The projected global market for AI security and verification tools: $100 billion by 2026.

Leading organizations implement multi-step verification workflows. First, AI generates code. Second, automated static analysis scans for vulnerabilities. Third, AI-native testing frameworks validate functional correctness. Fourth, senior developers review business logic and architecture fit. Some teams use “AI-reviews-AI” approaches where a different LLM critiques the first model’s output before human review. SonarQube users report 24% lower vulnerability rates, suggesting verification tooling makes a measurable difference.

Teams that invest in verification tooling maintain AI productivity gains while avoiding the trust gap. Teams that skip verification either accumulate technical debt (security vulnerabilities, logic errors) or burn senior developer time manually reviewing everything. The “vibe, then verify” workflow acknowledges reality: AI is fast at generation but unreliable without oversight. This isn’t a temporary problem—it’s structural to how current LLMs work.

Key Takeaways

- 96% of developers distrust AI code functional accuracy (Sonar 2026)—the trust gap is structural, not temporary, driven by hallucinations, edge case blindness, and security vulnerabilities

- AI PR acceptance rate (32.7%) vs manual PRs (84.4%) quantifies the verification bottleneck—two-thirds of AI-generated code requires significant rework or rejection

- Senior developers are 19% slower with AI despite feeling faster—the perception gap is dangerous because teams believe they’re productive while actual cycle times increase

- 40-48% of AI-generated code contains security vulnerabilities, with 86% undefended against cross-site scripting—verification isn’t optional, it’s mandatory for secure deployment

- “Vibe, then verify” with AI-native tools (Sonar AI Code Assurance, Veracode AI scanning) maintains productivity gains while addressing the trust gap through systematic automated verification before human review

The verification bottleneck won’t disappear until AI models develop better context understanding, uncertainty quantification, and security-aware training. Until then, teams must budget for verification overhead, restructure code review processes, and invest in AI-native verification tooling. The productivity promise was real—but so is the verification cost.