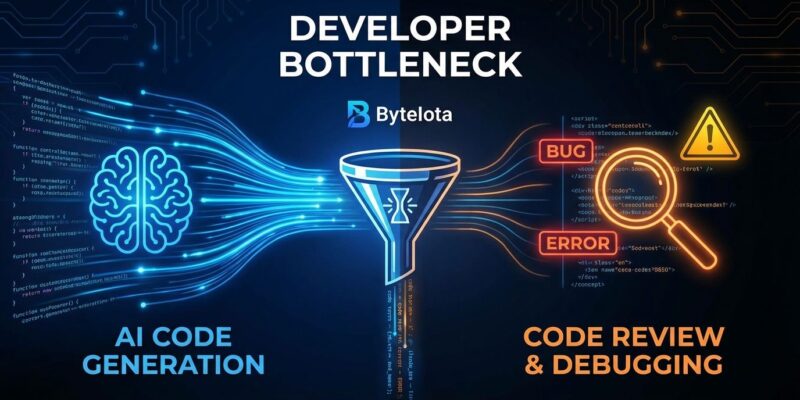

Ninety-six percent of developers don’t trust AI-generated code. Yet only 48% always verify it before shipping to production. This disconnect reveals 2026’s biggest development bottleneck: AI writes code faster than ever, but teams are drowning in verification work.

According to the 2026 State of Code Developer Survey from Sonar, which surveyed 1,149 developers globally, AI now accounts for 42% of all committed code—a figure expected to hit 65% by 2027. But the productivity gains everyone expected? They’re not showing up in the data.

A study by METR found that experienced developers are actually 19% slower with AI tools, despite feeling 20% faster. Welcome to the AI verification bottleneck.

The Verification Gap

The Sonar survey reveals what researchers call a critical “verification gap”: 96% of developers don’t fully trust AI output, yet only 48% consistently verify it before committing. With 72% of developers who’ve tried AI using it daily, this gap means flawed code is reaching production at unprecedented scale.

The bottleneck has shifted. Thirty-eight percent of respondents said reviewing AI-generated code requires more effort than reviewing human-generated code, compared to 27% who said the opposite. Verification capacity, not developer output, is now the limiting factor in delivery. Teams can generate code fast, but reviewing it takes longer.

The Productivity Paradox

Here’s where it gets interesting. The METR study—a randomized controlled trial with 16 experienced developers working on large open-source repositories—found a 39-percentage-point perception gap between how fast developers feel and how fast they actually are.

Developers forecast they’d be 24% faster before using AI. They ended up 19% slower in objective measurements. Even after experiencing the slowdown, they still believed AI made them 20% faster. This is the engineering productivity paradox: the feeling of rapid code generation masks hidden time costs in verification, debugging, and refinement.

The work didn’t disappear. It moved from creation to verification. And despite being measurably slower, 69% of participants continued using AI tools after the experiment ended, suggesting they value something beyond pure speed—perhaps reduced tedium, learning opportunities, or simply the feeling of moving faster.

Why Seniors Ship More AI Code

Senior developers ship 2.5 times more AI-generated code than juniors: 32% of production code versus 13%. The gap isn’t in generation—it’s in verification.

Senior developers spend an average of 4.3 minutes reviewing each AI suggestion, compared to 1.2 minutes for junior developers. They recognize when code “looks right” but isn’t. This makes them more confident shipping AI code to business-critical systems. Junior developers report higher productivity gains (40% versus seniors’ lower figures), but they’re less confident the code will work correctly in production.

Verification experience, not coding speed, is the new differentiator. As Addy Osmani, Chrome Engineering Lead, puts it: “The developers who succeed with AI at high velocity aren’t the ones who blindly trust it; they’re the ones who’ve built verification systems that catch issues before they reach production.”

This raises an uncomfortable question: with junior engineer listings down 60% and 54% of engineering leaders planning to hire fewer juniors, who becomes senior in 10 years? McKinsey forecasts a 14 million senior developer shortage by 2030 if junior training doesn’t resume.

The Security Cost

The verification bottleneck isn’t just about speed. Veracode research shows that 45% of AI-generated code contains security flaws from the OWASP Top 10 vulnerabilities, and 62% contains design flaws or known security vulnerabilities even when using the latest models.

Development teams spend time reworking AI code, eating up 15 to 25 percentage points of the promised 30-40% productivity gains. The net gain? Five to fifteen percent, not thirty to forty. AI can hallucinate dependencies—inventing non-existent packages or functions—which enables dependency confusion and typosquatting attacks. Architectural failures may not show up until production, weeks after the AI generated the code.

What This Means for 2026

Verification is becoming the core development skill. AI code generation is commoditized; every developer has access to the same tools. What separates successful AI adoption from chaos is verification systems: testing frameworks, code review processes, and security checks.

Companies are formalizing policies. Nearly 40% of enterprise code is AI-generated, and review capacity is the constraint. Organizations are requiring employee sign-offs on AI-generated code and integrating automated quality checks directly into workflows. Human oversight is shifting from line-by-line review to architectural judgment: Does this code solve the right problem? Does it introduce systemic risks?

Juniors are now expected to supervise AI, not blindly accept suggestions. Knowledge transfer still matters. As one principle states: “If the original author can’t explain why the code works, how will the on-call engineer debug it at 2 AM?”

The trend is clear. Developers who measure objectively—not just by how they feel—will adapt faster. Those who build verification systems (comprehensive testing, automated checks, security scanning) will ship AI code with confidence. Those who rely on the productivity placebo will ship bugs.

Addy Osmani sums it up: “AI writes code faster. Your job is still to prove it works.”