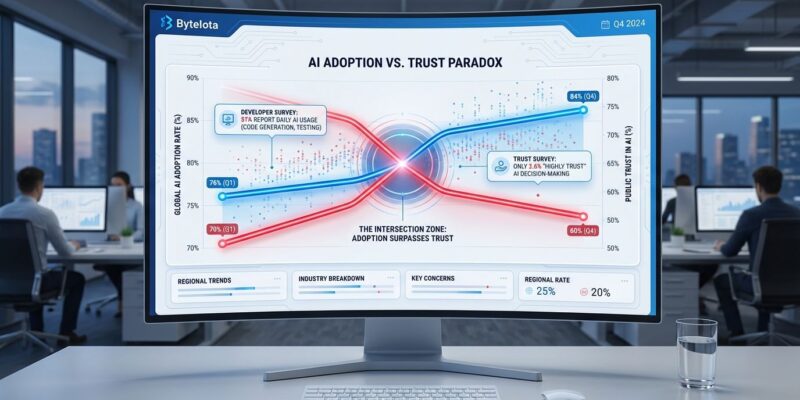

Two major developer surveys released this year reveal an unprecedented paradox in AI adoption: tool usage jumped from 76% to 84%, with 51% of professionals using them daily, yet developer trust plummeted from over 70% to just 60% in the same period. Stack Overflow’s survey of 49,000+ developers and JetBrains’ survey of 24,534 developers independently confirm what the data shows but defies conventional technology adoption patterns—developers are using AI more while trusting it less. This marks the first major technology where forced adoption precedes earned confidence, inverting the historical pattern that drove iPhone and cloud computing success.

The implications extend beyond developer sentiment. With 68% expecting mandatory AI proficiency requirements despite growing distrust, AI tools risk becoming “necessary evils” rather than beloved platforms—a commoditization threat that could reshape the $7+ billion AI development market.

The Numbers Don’t Lie: Trust in Freefall

Between 2023 and 2025, AI tool usage among developers increased from 76% to 84%, with daily usage reaching 51% of professionals. Simultaneously, trust in AI accuracy dropped from 40% to 29%, and positive sentiment fell from over 70% to 60%. The disconnect is stark: active distrust now exceeds trust, with 46% distrusting AI accuracy compared to only 33% who trust it.

Stack Overflow’s 2025 AI survey reveals that only 32.7% of developers “somewhat” or “highly” trust AI accuracy, and just 3.1% “highly trust” AI outputs. Experienced developers show the lowest confidence—2.6% “highly trust” versus 20% “highly distrust,” an eight-fold gap. JetBrains’ independent survey of 24,534 developers confirms the pattern: 85% use AI tools regularly and 62% rely on at least one coding assistant, yet sentiment remains mixed to negative.

This isn’t skepticism from unfamiliarity. Developers are using these tools daily, and that experience is driving distrust, not resolving it. The more exposure to AI-generated code, the more developers recognize its limitations.

“Almost Right, But Not Quite”: The Productivity Paradox

The core frustration explains why trust declines despite adoption: 66% of developers cite “AI solutions that are almost right, but not quite” as their top complaint, while 45% find debugging AI-generated code more time-consuming than writing it manually. Stack Overflow’s analysis calls this the “willing but reluctant” dynamic—developers feel compelled to use AI despite recognizing its flaws.

Context blindness compounds the issue. Between 60% and 65% of developers report AI tools miss relevant details in refactoring and testing tasks. The result: code that looks correct in isolation but breaks downstream dependencies or violates team conventions. When uncertain, 75% of developers still prefer asking humans for help, underscoring that AI hasn’t earned trust as a reliable code partner.

This creates a frustrating dynamic: developers report productivity gains (69% say AI increases productivity for specific tasks), but 45% simultaneously spend extra time debugging AI outputs. The net impact is narrower than vendors claim—real gains exist for boilerplate code generation and simple tasks, but complex logic remains risky territory where AI tools create more problems than they solve.

Historical Precedent: AI Inverts the Adoption Curve

Traditional technology adoption follows a trust-before-use pattern. The iPhone earned developer and consumer trust through its intuitive interface and impressive features before achieving mass adoption after 2007. Cloud computing required enterprises to trust data security—validating platforms like AWS and Azure through early adopter success—before the late majority migrated. In both cases, trust preceded widespread adoption.

AI tools have inverted this pattern entirely. With 84% adoption while trust actively declines, developers are using AI out of competitive pressure rather than earned confidence. The data reveals why: 68% expect employers to require AI proficiency soon, making adoption mandatory rather than voluntary. This is forced implementation, not enthusiastic embrace.

The historical comparison matters because technologies adopted under pressure rarely build loyalty. As Cloud Security Alliance notes, the traditional technology adoption curve doesn’t work for AI—the pattern is fundamentally broken. The long-term risk: commoditization, where price becomes the primary differentiator among interchangeable tools that developers use reluctantly.

The Expertise Paradox: Experience Breeds Skepticism

Conventional wisdom suggests experts adopt new tools first and advocate loudest. AI tools break this pattern: the more experienced a developer, the less they trust AI-generated code. Experienced professionals show only 2.6% “highly trust” rates compared to 20% “highly distrust”—nearly eight times more distrust than confidence.

While professionals report 61% favorable sentiment versus learners’ 53%, the gap narrows significantly when measuring high trust specifically. Experienced developers are more selective: 45% of professionals use Claude Sonnet versus 30% of learners, suggesting careful tool evaluation rather than blanket adoption. Meanwhile, concerns run high across experience levels: 87% worry about accuracy, and 81% cite data privacy risks.

This inversion reveals the trust problem isn’t education or unfamiliarity. Developers who understand code architecture, edge cases, and production requirements recognize AI limitations more clearly than newcomers do. Experience doesn’t build confidence in AI tools—it exposes their weaknesses. That’s a structural problem, not a temporary adoption barrier.

What This Means for AI Vendors: The Commoditization Risk

AI tools are becoming infrastructure, not platforms—used out of competitive necessity rather than enthusiastic preference. With no dominant winner (OpenAI leads at 81%, Claude at 43%, Gemini at 35%), declining trust despite growing usage, and developers viewing AI as a required capability rather than a competitive advantage, vendors face commoditization risk where price becomes the primary decision factor.

GitHub Copilot illustrates the challenge. With 20 million users, 90% Fortune 100 adoption, and developers coding up to 51% faster for certain tasks, adoption metrics look strong. But sentiment is declining across the industry, and switching costs between AI tools remain low when all options are equally distrusted. The market distribution shows no clear moat—developers experiment across multiple tools without strong brand loyalty.

Technologies that are “used but not loved” don’t command premium pricing. Electricity is essential infrastructure; developers don’t evangelize their power company. The iPhone built passionate advocates who convinced others to switch. AI tools currently sit closer to the electricity model—necessary, interchangeable, price-sensitive. For vendors, that’s a strategic warning: earn trust or risk becoming a commodity utility competing on cost.

Key Takeaways

The 2025 developer surveys from Stack Overflow and JetBrains reveal five critical insights:

- AI adoption reached 84% while trust fell to 60%—an unprecedented disconnect where forced usage precedes earned confidence. Historical tech adoption patterns (iPhone, cloud) show trust driving adoption; AI tools reversed the sequence.

- “Almost right, but not quite” code (cited by 66% as top frustration) explains the trust decline. Productivity gains from AI-generated boilerplate are offset by debugging overhead, with 45% spending more time fixing AI code than writing manually.

- Experience correlates with skepticism, not enthusiasm. The most skilled developers show 2.6% “highly trust” versus 20% “highly distrust”—an eight-fold gap revealing that expertise exposes AI limitations rather than building confidence.

- Workplace pressure drives adoption, not developer preference. With 68% expecting mandatory AI proficiency requirements, tools are becoming necessary evils rather than chosen platforms.

- Vendors face commoditization risk if trust deficits persist. Diffuse market share (no dominant winner), low switching costs, and “used but not loved” status threaten to turn AI tools into price-sensitive utilities rather than premium platforms with loyal advocates.