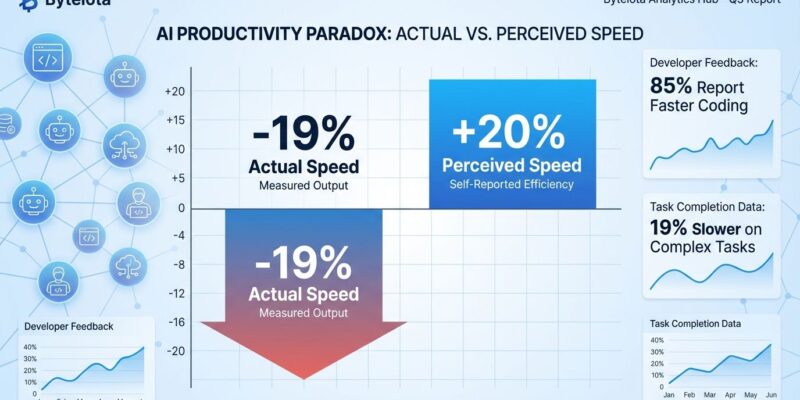

What if the AI coding tools that 85% of developers now use are actually making experienced developers slower, not faster? A rigorous independent study from METR found that senior open-source developers using AI tools like Cursor and Claude took 19% longer to complete tasks. The twist: these same developers believed they were 20% faster—a stunning 39-percentage-point gap between perception and reality.

While vendor studies from GitHub and Microsoft tout 20-55% productivity gains, the evidence for experienced developers tells a different story. This AI productivity paradox challenges the multi-billion-dollar AI coding narrative and forces an uncomfortable question: Are we optimizing for feeling productive rather than being productive?

The Research That Challenges Everything

METR’s study wasn’t a quick survey or vendor-funded marketing play. This was a randomized controlled trial—the gold standard for research—conducted from February to June 2025 with 16 experienced open-source developers. These weren’t beginners: they averaged five years on their projects, working with mature repositories sporting 22,000+ GitHub stars and over a million lines of code.

The methodology was rigorous: 246 real coding tasks, each randomly assigned to permit or prohibit AI tool usage. Developers used Cursor Pro with Claude 3.5 and 3.7 Sonnet when AI was allowed. Researchers manually analyzed 143 hours of screen recordings at roughly 10-second resolution to understand how developers actually spent their time.

The finding: Tasks took 19% longer with AI tools. Before the study, these same developers predicted AI would make them 24% faster. After experiencing the slowdown, they still believed they were 20% more productive. That’s a 39-percentage-point gap between perception and measured reality—developers are systematically wrong about their own productivity.

Five Reasons AI Tools Hurt Senior Developer Productivity

Screen recording analysis revealed where the time went. First, integration friction and context switching: developers spent time crafting prompts, waiting for AI responses, then switching back to manual coding when suggestions missed the mark. Second, over-optimism bias kept developers using AI despite counterproductive results—the belief that “this time it’ll help” when data showed otherwise.

Third, repository complexity defeated AI’s limited context window. These developers had five years of intimate codebase knowledge; AI had a few thousand tokens. The mismatch was stark—developers reported AI “made weird changes in other parts” that required correction because it lacked architectural understanding.

Fourth, review overhead consumed 9% of total task time. Developers spent time evaluating, modifying, and cleaning up AI-generated code. Fifth, low acceptance rates compounded the problem: less than 44% of AI suggestions were accepted, meaning 56% required heavy modification or outright rejection. That’s a lot of wasted cycles reviewing bad suggestions.

Why GitHub Says 55% Faster While METR Says 19% Slower

Both studies can be true because context is everything. GitHub Copilot’s research showed developers completing HTTP server implementations 55% faster—1 hour 11 minutes versus 2 hours 41 minutes without AI. Statistical significance was strong (P=.0017), and the gains were real. However, this was a relatively simple, well-defined task, and less experienced developers benefited most.

METR tested experienced developers on complex, interconnected systems they knew intimately. Google’s 2024 DORA report revealed the trade-off more companies will soon discover: a 25% increase in AI usage correlates with a 7.2% decrease in delivery stability. Speed gains, yes. Quality concerns, also yes.

The pattern is consistent: simple tasks, greenfield projects, and junior developers see AI productivity gains. Complex tasks, brownfield codebases, and senior developers see AI productivity losses. The industry has marketed the former while ignoring the latter, creating billion-dollar bets on half the story.

The Hidden Cost: Technical Debt Explosion

Even when AI coding delivers short-term speed, the long-term cost is accumulating fast. GitClear analyzed 153 million lines of code and found alarming trends: code churn projected to hit 7% by 2025 (up from ~5% pre-AI), copy/pasted code rising significantly, and code reuse declining. This isn’t just sloppy coding—it’s technical debt at scale.

The State of Software Delivery 2025 report confirmed what many developers already felt: the majority now spend more time debugging AI-generated code and resolving security vulnerabilities than they did before AI adoption. CISQ estimates 40% of IT budgets will go to tech debt maintenance by 2025.

API evangelist Kin Lane put it bluntly: “I don’t think I have ever seen so much technical debt being created in such a short period of time during my 35-year career in technology.” An MIT professor described AI as “a brand new credit card that is going to allow us to accumulate technical debt in ways we were never able to do before.” A 40% coding speed gain that generates 15% more tech debt isn’t a win—it’s a deferred crisis.

2026: The Year of Reckoning

Here’s the real paradox: despite independent research showing experienced developers slow down, adoption continues to explode. JetBrains’ 2025 survey of 24,534 developers across 194 countries found 85% regularly use AI tools, with 62% relying on at least one assistant daily. Stack Overflow’s 2025 survey confirmed 65% weekly usage. This isn’t cautious experimentation—this is near-universal adoption based on vendor marketing and perception, not rigorous measurement.

Developers report feeling more productive (74% in JetBrains survey), finishing repetitive tasks faster (73%), and spending less time searching documentation (72%). Yet their top concern remains code quality (23%), followed by AI’s limited understanding of complex logic (18%). The disconnect is glaring: we know AI struggles with quality and complexity, but we’re using it anyway because it feels helpful.

The 2026 reckoning is coming. Companies will start measuring actual productivity versus assumed gains. ROI calculations will factor in tech debt accumulation, debugging overhead, and the reality that senior developers—the most expensive talent—may be slowing down, not speeding up. Hiring freezes justified by “AI makes us 2x more efficient” will collide with delivery data showing stability decreases and maintenance costs rising.

Key Takeaways

- Independent research contradicts vendor claims: METR shows 19% slowdown for experienced developers while GitHub touts 55% gains—both are true in different contexts

- The perception gap is massive: developers believe they’re 20% faster when measured data shows 19% slower, revealing a 39-percentage-point disconnect between feeling and reality

- Context determines outcomes: junior developers on simple tasks gain productivity, senior developers on complex codebases lose it—one-size-fits-all claims are dangerously misleading

- Technical debt is the hidden cost: 7% code churn, declining reuse, increased debugging time, and 40% of IT budgets going to maintenance by 2025 suggest short-term gains create long-term pain

- Measure, don’t assume: track actual completion times with and without AI, monitor acceptance rates (>50% rejection is a red flag), and calculate total cost including review overhead and tech debt

The AI productivity narrative has been built on vendor studies testing toy problems and developer surveys measuring perception, not performance. Independent research measuring experienced developers on real codebases tells a different story. Before making billion-dollar tooling decisions and restructuring teams around assumed productivity gains, it’s time to demand data over vibes.