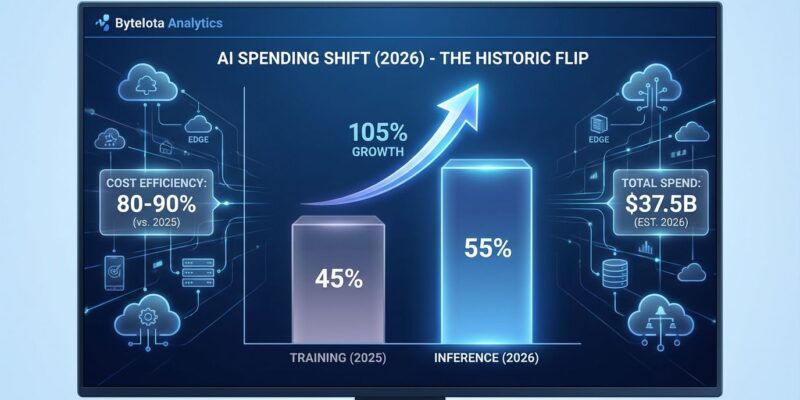

2026 marks a historic inflection point in AI infrastructure economics. For the first time ever, inference workloads now consume over 55% of AI-optimized infrastructure spending in early 2026, surpassing training costs and signaling that companies have moved beyond experimentation to production-scale AI deployment. Moreover, this shift—from 33% of AI compute in 2023 to a projected 70-80% by year-end 2026—isn’t just a spending statistic. Furthermore, it’s a fundamental restructuring of how developers need to think about AI infrastructure costs.

The inference-optimized chip market alone is projected to exceed $50 billion in 2026, while total AI cloud infrastructure spending grew 105% to $37.5 billion. However, here’s what most teams discover too late: inference commonly accounts for 80-90% of total compute dollars over a model’s production lifecycle, despite each individual inference task requiring less compute than training. Consequently, this is the hidden economics of AI that surprises developers when their $200/month dev costs explode to $10,000/month in production.

The 80-90% Lifecycle Cost Reality

Training AI models is a CapEx-like hit—you rent hundreds of GPUs for a few weeks, the bill might cross into millions for foundation models, but it’s a one-time investment. In contrast, inference is the opposite: continuous OpEx where each query, each token, each API call adds to the tab. This pattern creates a cost dynamic most teams underestimate by 40-60% when moving from development to production.

Consider a real example: A construction company built an AI predictive analytics tool for under $200/month during development. Once they launched and users started actually using it, costs ballooned to $10,000/month. This isn’t an outlier—it’s the pattern. Development testing involves hundreds of queries per day. Meanwhile, production involves millions. API pricing for services like Claude Opus 4.5 ($5/$25 per million input/output tokens) or GPT-4o ($3/$10 per million tokens) might look reasonable in testing, but output tokens cost 2-5x more than input because generation is computationally expensive.

The math is brutal for teams that don’t plan ahead. Monthly production costs scale from $10-50 for personal projects to $1,000+ for enterprise applications. Therefore, the critical insight: you optimize for training costs once, but the real money drain is the continuous inference at scale. Production usage patterns create entirely different cost dynamics than development testing, and most teams don’t see it coming until the AWS bill hits.

Cloud vs. Edge: Architecture Decision Framework

The fundamental economic decision for AI inference is balancing centralized cloud compute against edge processing. In 2026, leading organizations aren’t choosing “cloud only” or “edge only”—they’re deploying three-tier hybrid architectures that leverage the strengths of all three deployment models.

Cloud offers elasticity: zero upfront costs, infinite scale, managed infrastructure. It’s perfect for variable workloads, experimentation phases, and low-volume applications. However, the downside? Continuous OpEx that scales with usage, egress fees that can hit 70% of total spend for high-bandwidth apps, and 100-300ms latency that’s unacceptable for time-critical applications.

In contrast, edge flips the economics entirely. You pay upfront CapEx for hardware, but then enjoy sub-10ms latency, no egress fees, and predictable costs. This is why Tesla, Mercedes-Benz, and GM use edge inference for autonomous vehicles—safety-critical decisions can’t tolerate cloud round-trip delays. Furthermore, the automotive edge computing market is projected to grow from $7.64 billion in 2024 to $39 billion by 2032, and the broader edge AI market is expanding from $24.9 billion (2025) to $66.47 billion by 2030 at a 21.7% CAGR.

The pragmatic reality for most enterprises is hybrid by default. Cloud handles training and elastic burst capacity. On-premises infrastructure runs predictable high-volume production inference at fixed costs. Meanwhile, edge processes time-critical decisions for manufacturing, robotics, and autonomous systems. Amazon Robotics and Boston Dynamics use this exact pattern: cloud for model training and updates, edge for split-second operational decisions.

The decision matrix is straightforward. Latency requirements under 50ms? Edge. Variable or unpredictable workloads? Cloud. Continuous high-volume inference exceeding 100 million queries per month? On-premises or edge wins on TCO. Therefore, traffic patterns matter as much as technology.

AI Inference Optimization Strategies

Cost optimization isn’t just about cutting expenses—it’s becoming a competitive advantage. Companies that master inference cost reduction by 50-90% through proven techniques can price their AI products more aggressively than competitors burning cash on unoptimized infrastructure.

Quantization delivers 8-15x compression with less than 1% accuracy loss. Post-training quantization (PTQ) is the fastest path—convert FP32 weights to INT8 or INT4 without retraining. Tools like NVIDIA Model Optimizer, PyTorch Lightning, and ONNX Runtime make this straightforward. As a result, the outcome: massively reduced model size and inference costs with negligible accuracy impact.

Prompt caching offers 90% cost savings for high-repetition applications. RAG systems, chatbots, and customer support tools that repeat similar queries can cache prompts and pay just 0.1x the base price for cache reads. Consequently, this single optimization can reduce costs from $10,000/month to $1,000/month for the right workload patterns.

Additionally, batch processing cuts costs by 50% for non-urgent workloads. Content moderation, analytics pipelines, and overnight processing jobs don’t need real-time responses. OpenAI’s Batch API offers 50% discounts across all models in exchange for latency tolerance—hours instead of seconds. The trade-off is acceptable for many use cases.

Edge data filtration slashes bandwidth and egress fees by up to 70%. Process raw data at the edge, send only refined datasets to the cloud for training. This is critical for high-bandwidth applications involving video, images, or sensor data where cloud transfer costs dominate the budget.

The combination matters more than individual tactics. Teams that quantize models before production, enable caching for repetitive queries, use batch APIs for non-urgent workloads, and monitor output token usage (which costs 2-5x more than input) aren’t just saving money. Furthermore, they’re building sustainable AI economics that competitors can’t match.

The Future is Inference-First

By 2027, McKinsey projects that inference workloads will overtake training entirely, representing 70-80% of AI compute and 30-40% of total data center demand by 2030. Hyperscaler capital expenditure hit $600 billion in 2026 (a 36% increase over 2025), with 75% ($450 billion) tied directly to AI infrastructure. Consequently, this isn’t a temporary spike—it’s a fundamental shift in how the industry allocates resources.

The hardware market reflects this evolution. The inference chip market accelerated from a projected $25 billion in 2027 to over $50 billion in 2026. Moreover, NVIDIA’s $20 billion Groq acquisition signals a strategic pivot to non-GPU inference optimization. AMD’s Instinct MI300X delivers 1,500 TOPS with 192GB HBM3 specifically for memory-heavy inference workloads. Training GPUs and inference hardware have different optimization targets—throughput versus latency, memory bandwidth versus processing speed.

Developers need new mental models for this reality. Track per-query AI costs from day 1, not after launch. Build cost monitoring into development, not as an afterthought. Choose architecture based on latency, volume, and cost matrices, not vague preferences for “cloud” or “edge.” Additionally, optimize before production—quantization, caching, and batching deliver 50-90% cost reductions when implemented proactively, not reactively.

The shift from training-dominant to inference-dominant AI infrastructure is complete. Companies building AI applications in 2026 need to architect for continuous inference costs from day 1, not treat it as a surprise when the production bill arrives. The economics have flipped, and the teams that adapt fastest will build sustainable competitive advantages while others burn cash on unoptimized infrastructure. Therefore, inference isn’t the future of AI—it’s already the present, and the spending patterns prove it.

Key Takeaways

- Inference spending crossed 55% of AI cloud infrastructure ($37.5B) in early 2026, surpassing training for the first time

- Inference accounts for 80-90% of total compute costs over a model’s production lifecycle, despite requiring less compute per task

- Three-tier hybrid architectures (cloud/on-prem/edge) are the pragmatic default for enterprises, not “cloud only” or “edge only”

- Cost optimization through quantization (8-15x compression), caching (90% savings), batching (50% discount), and edge filtration (70% egress reduction) is a competitive advantage

- By 2027-2030, inference will represent 70-80% of AI compute and 30-40% of total data center demand—this shift is permanent

[…] Instead of chasing benchmark headlines or model-size bragging rights, Microsoft introduced a cost-efficient AI chip designed to solve a more uncomfortable problem:AI infrastructure economics are shifting, and inference costs now dominate cloud spending. […]