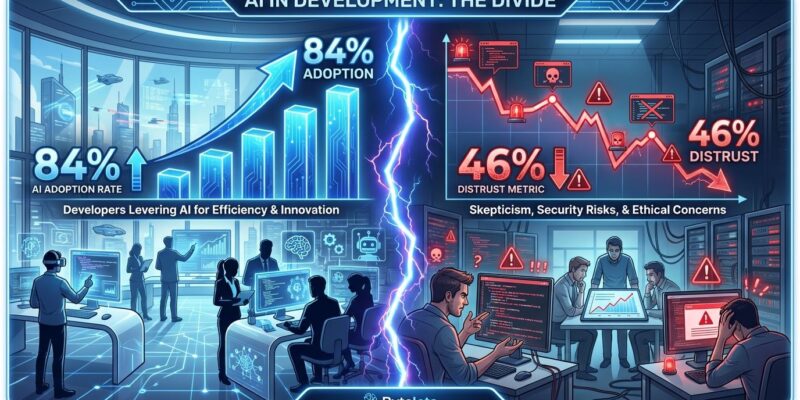

The 2025 Stack Overflow Developer Survey reveals a trust crisis in AI coding tools: 46% of developers actively distrust AI accuracy, up from 31% in 2024—a 48% increase in just one year. Only 33% trust AI outputs, and a mere 3% “highly trust” them. This collapse comes despite record adoption rates: 84% now use AI tools, up from 76% last year. The paradox exposes an uncomfortable truth—developers adopt AI out of necessity, not conviction.

The “Almost Right, But Not Quite” Problem

The top frustration among developers isn’t AI being completely wrong—it’s being almost right. Stack Overflow’s survey found 66% cite “AI solutions that are almost right, but not quite” as their primary complaint. This near-accuracy creates a verification tax: 45% report spending more time debugging AI-generated code than they save initially.

The problem runs deeper than time wasted. A July 2025 METR study found experienced developers using AI tools were 19% slower on complex tasks, despite feeling faster. Meanwhile, up to 42% of AI-generated code contains hallucinations—phantom functions, nonexistent APIs, or fake dependencies that compile but fail at runtime. Security researchers at Apiiro discovered AI code introduces 322% more privilege escalation paths and 153% more design flaws compared to human-written code.

Code that passes linting and compiles creates a dangerous perception gap. Developers believe AI accelerates them, but empirical data shows debugging consumes those gains. Subtle logic flaws are harder to catch than obvious errors, making “almost right” worse than completely wrong.

Senior Developers Ship More AI Code, Trust It Least

Experience reveals AI’s limitations in sharp detail. Only 2.6% of experienced developers highly trust AI outputs, while 20% highly distrust them. Yet senior developers (10+ years experience) ship 2.5 times more AI-generated code than juniors—33% of their shipped code versus just 13% for developers with 0-2 years experience.

The paradox resolves when you consider validation skills. Junior developers perceive quality improvements at 68% rates and use AI tools daily at 55.5%. They lack the pattern recognition to spot AI’s mistakes. Seniors extract value from AI precisely because they’re better equipped to catch and fix “almost right” errors—they use AI more because they trust it less.

This dynamic reveals AI as a ceiling raiser for experts, not a floor raiser for beginners. The troubling question: if junior developers over-rely on AI and skip foundational learning, who becomes the next generation of seniors?

Related: JetBrains 2025 Survey: 85% Use AI, But 19% Slower Reality

Positive Sentiment Drops as Adoption Climbs

Sentiment toward AI tools fell from over 70% positive in 2023-2024 to just 60% in 2025—a 10-percentage-point drop even as usage grew 10%. JetBrains’ 2025 survey of 24,534 developers across 194 countries confirms the trend: 85% use AI regularly, 62% rely on coding assistants, yet concerns about code quality and hallucinations persist.

More telling: 68% of developers expect employers will mandate AI tool proficiency soon. Adoption isn’t driven by developer enthusiasm—it’s driven by employer pressure. The disconnect between rising usage and falling sentiment signals an unsustainable trajectory. Developers use tools they fundamentally distrust because they must, not because they believe in them.

Meanwhile, 52% still code recreationally after work, suggesting passion for programming persists despite AI’s encroachment. Developers haven’t given up on the craft; they’ve just lost faith in the assistants.

Human Verification Isn’t Optional—It’s Mandatory

When stakes are high, developers revert to human expertise. The Stack Overflow survey found 75% would still ask a person for help “when I don’t trust AI’s answers.” Only 29% of professional developers believe AI handles complex tasks well. Human verification isn’t a fallback option—it’s a requirement.

This explains the productivity paradox. While 82% claim AI boosts productivity by 20% or more, and 68% save 10+ hours weekly, empirical studies paint a different picture. The METR trial found developers spend 9% of total task time specifically reviewing and modifying AI output. Faros AI’s analysis of over 10,000 developers across 1,255 teams showed gains from faster code generation get absorbed by downstream review, testing, and fixing.

Code quality metrics expose the cost: developers using AI produce 9% more bugs and 154% larger pull requests. The bottleneck hasn’t disappeared—it’s shifted to code review and integration. Developers feel faster but aren’t measurably faster because verification consumes the gains.

Can AI Rebuild Trust, or Is This the Plateau?

AI coding tools face an existential question: can accuracy improve enough to rebuild trust, or is the “almost right, but not quite” problem fundamental to large language models? Research from Augment Code suggests context-aware engineering can reduce hallucinations by up to 40%, but a 42% baseline error rate and persistent security vulnerabilities point to deeper structural issues.

The current trajectory is unsustainable. Trust dropped 48% year-over-year (31% to 46% distrust). If this continues, developers will limit AI to low-stakes tasks only, never trusting it for production-critical code. Meanwhile, 68% expect employer mandates—creating tension between forced adoption and fundamental distrust.

The industry faces a choice: dramatically improve AI accuracy or accept AI as perpetually supplementary. Better context engineering, tiered trust systems, and mandatory review standards might help. But unless AI tool makers solve the hallucination and near-accuracy problem, adoption may plateau as developers refuse to stake their careers on tools they don’t trust.

Key Takeaways

- Developer trust in AI accuracy hit an all-time low: 46% actively distrust AI outputs (up 48% from 2024), while only 3% highly trust them—yet 84% use AI tools, revealing adoption from necessity rather than conviction

- The “almost right, but not quite” problem is worse than being completely wrong: 66% cite near-accuracy as their top frustration, forcing a verification tax where 45% spend more time debugging AI code than they save initially

- Experience reveals AI’s limits: senior developers (10+ years) highly trust AI at only 2.6% rates but ship 2.5x more AI code than juniors because they’re better equipped to catch and fix errors—AI is a ceiling raiser for experts, not a floor raiser for beginners

- Sentiment collapsed despite rising adoption: positive feelings dropped from 70%+ to 60% as usage grew 10%, signaling developers use tools they fundamentally distrust because employers mandate it, not because they believe in them

- Human verification remains mandatory: 75% would ask a person when they don’t trust AI, and empirical studies show the 19% productivity slowdown and 9% more bugs reveal that gains from faster generation get consumed by review, testing, and debugging