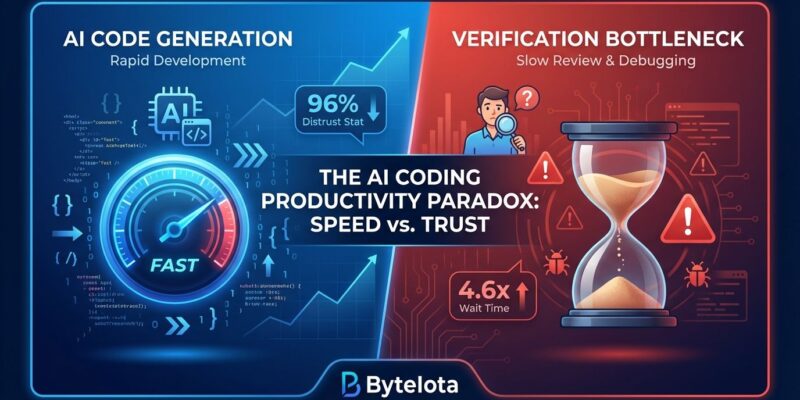

AI coding assistants promise 20-40% productivity gains, but the numbers don’t add up. SonarSource’s 2026 State of Code Survey, published this month, reveals that 96% of developers don’t trust AI-generated code, yet AI already accounts for 42% of committed code. More striking: LinearB’s analysis of 8.1 million pull requests shows AI PRs wait 4.6x longer for review and have an 84% lower acceptance rate than manual code. A randomized trial from METR found experienced developers actually work 19% slower with AI tools. Welcome to the AI coding productivity paradox.

Companies are investing billions expecting massive efficiency gains, but individual velocity increases don’t translate to organizational delivery improvements. This isn’t just a measurement problem—it’s a systems problem exposing fundamental gaps between vendor promises and engineering reality.

The Three Gaps: Trust, Acceptance, and Organizational Delivery

The productivity paradox exists across three dimensions that compound each other. First, the Trust Gap: SonarSource’s survey of 1,149 developers shows 96% don’t fully trust AI-generated code functionality, yet only 48% always verify it before committing. This creates what SonarSource calls a “verification bottleneck” where teams accumulate technical debt that surfaces later as bugs and security vulnerabilities.

Second, the Acceptance Gap reveals the delta between code generation and actual usage. GitHub Copilot generates 46% of developers’ code, but they only accept 30% of suggestions. LinearB’s data from 4,800+ organizations shows AI pull requests have a 32.7% acceptance rate compared to 84.4% for manual PRs—an 84% difference. Developers generate fast but reject more.

Third, the Organizational Gap exposes why individual gains don’t scale. Developers type code 20-40% faster, but organizational delivery velocity stays flat or decreases. The Trust-Verification Gap creates hidden tech debt, while the Acceptance Gap clogs review pipelines with AI PRs that wait 4.6x longer before review starts. Individual speed gains evaporate in downstream bottlenecks.

Experienced Developers Are Actually 19% Slower

METR’s randomized controlled trial challenges the productivity narrative head-on. Researchers recruited 16 experienced open-source developers from major repositories averaging 22,000+ stars and randomly assigned 246 real issues to either allow or prohibit AI tool usage. The result: developers using AI tools like Cursor Pro with Claude models took 19% longer to complete tasks.

The perception gap compounds the problem. Participants expected a 24% speedup and reported believing AI helped by 20% even after measuring the slowdown. The 19% penalty reflects overhead in prompt crafting, output verification, and code review—tasks that don’t exist with manual coding. For experienced developers on familiar codebases, AI verification overhead exceeds generation time savings.

This has major implications for ROI calculations based on developer surveys. Self-reported productivity gains may be unreliable when developers feel faster but measure slower. The finding applies specifically to experienced developers on high-quality codebases—METR explicitly noted results may not generalize to less experienced developers or unfamiliar code.

Vendor Claims vs Reality: The 10x Gap

AI coding tool vendors claim 50-100% productivity improvements based on controlled studies and self-reported data. The marketing touts stats like “55% faster task completion” and “developers save 30-60% of time on coding.” Reality looks different.

Harvard and Jellyfish research shows “developers say they’re working faster, but companies are not seeing measurable improvement in delivery velocity or business outcomes.” Analysis from Index.dev and DX of nearly 40,000 developers finds actual measured organizational ROI ranging from 5-15% improvement in delivery metrics—not the 50-100% vendors promise. The 10x gap between promises and reality creates massive expectation mismatches.

CTOs invest millions expecting 2x productivity, see 10% gains, and question their engineering teams. The measurement crisis stems from tracking wrong metrics. Lines of code generated and suggestions accepted are vanity metrics that feel good but don’t correlate with business outcomes. DORA metrics like cycle time, deployment frequency, and change failure rate reveal the truth: individual coding velocity doesn’t equal organizational delivery velocity.

Related: DX Core 4: Unifying DORA, SPACE, and DevEx Frameworks

The Downstream Bottleneck Problem

Every good engineer knows that optimizing one part of a complex system doesn’t automatically speed up the entire system. Software delivery is no exception. As Cerbos analysis explains: “The bottlenecks are not typing speed. They are design reviews, PR queues, test failures, context switching, and waiting on deployments.”

Optimizing code generation by 30-40% without addressing downstream bottlenecks in review, testing, and deployment doesn’t improve end-to-end delivery. It shifts the bottleneck downstream and can actually slow overall throughput. LinearB data confirms the pattern: AI PRs aren’t just slower to review (4.6x wait time)—they’re also larger (18% more additions), have more incidents per PR (up 24%), and higher change failure rates (up 30%).

Fast code generation overwhelms review capacity, creating pipeline congestion. Individual developers feel faster because they type less, but sprint velocity stays flat because the system bottleneck moved from “writing code” to “verifying AI code.” Companies need to invest in review pipeline capacity, automated testing infrastructure, and deployment automation to realize AI coding gains—otherwise those gains evaporate in downstream queues.

Related: FinOps-DevOps Fusion: Engineers Own Cloud Costs in 2026

What to Track Instead: Outcome Metrics Over Vanity Metrics

The DX Core 4 framework and AI Measurement Framework provide a path beyond the vanity metrics trap. Instead of tracking lines of code or suggestions accepted, engineering leaders should measure three dimensions: Utilization (how developers adopt AI), Impact (effects on productivity, quality, developer experience), and Cost (whether AI spending delivers positive ROI).

Recommended metrics focus on outcomes: cycle time, deployment frequency, change failure rate, code review time, and PR throughput. The framework suggests measurement cadence of adoption weekly, delivery monthly, ROI quarterly. Best-performing teams track whether AI actually reduces time-to-production and maintains code quality—not just counting AI-generated lines.

This shift from vanity metrics (easy to game, feel-good) to outcome metrics (hard to game, business-aligned) is essential for realistic ROI assessment. It also helps teams identify where AI provides value versus where it creates overhead. High-ROI use cases include debugging (clear problem context, verifiable solutions), refactoring (existing code as reference, automated tests verify), and test generation (spec-driven, automated validation). Low-ROI use cases include new feature generation (requires most verification, highest security risk) and complex business logic (high verification overhead).

Key Takeaways

- The AI coding productivity paradox exists across three dimensions: Trust Gap (96% distrust but only 48% verify), Acceptance Gap (46% generated but 30% accepted, 32.7% PR acceptance vs 84.4% manual), and Organizational Gap (downstream bottlenecks erase individual gains)

- Vendor productivity claims (50-100%) diverge massively from measured organizational reality (5-15% ROI) because individual coding velocity doesn’t equal organizational delivery velocity

- Experienced developers can be 19% slower with AI tools due to verification overhead, and self-reported productivity gains are unreliable when perception gaps exist

- Fast code generation without addressing downstream bottlenecks (review, testing, deployment) shifts the constraint instead of eliminating it—AI PRs wait 4.6x longer, are 18% larger, and have 84% lower acceptance rates

- Track outcome metrics (DORA: cycle time, deployment frequency, change failure rate) not vanity metrics (lines generated, suggestions accepted) using the DX Core 4 framework with measurement cadence of adoption weekly, delivery monthly, ROI quarterly

The path forward isn’t abandoning AI coding tools—it’s building verification systems, investing in downstream pipeline capacity, and measuring what actually matters. Individual velocity gains are real, but organizational delivery improvements require addressing the full software development system, not just the code generation stage.