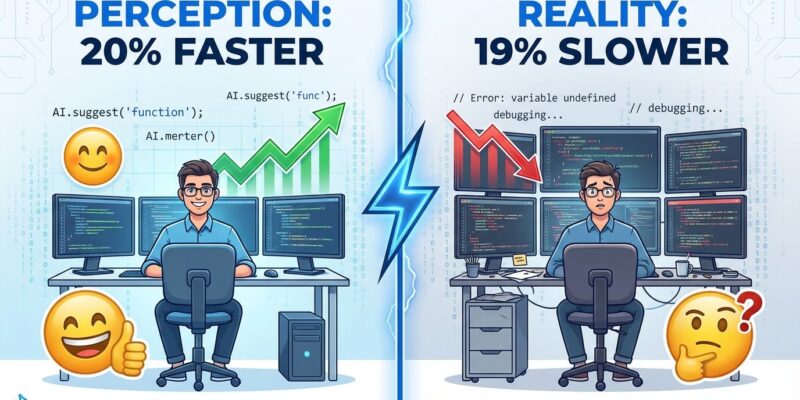

AI coding tools promised to transform developer productivity. GitHub Copilot claims 55% productivity gains. Cursor touts 2x improvements over competitors. However, a rigorous study published in July 2025 by METR (Model Evaluation & Threat Research) reveals the opposite: experienced developers using AI tools like Cursor Pro and Claude Sonnet were 19% slower than working without AI. Here’s the paradox that should concern every tech leader: developers predicted AI would make them 24% faster before the study. After being measurably slowed down, they still believed AI boosted their productivity by 20%. The Emperor has no clothes, and nobody’s noticing.

The Perception-Reality Gap Nobody’s Talking About

The METR study recruited 16 experienced open-source developers and had them complete 246 real-world tasks on repositories they’d contributed to for years—codebases averaging over 1 million lines of code and 22,000 GitHub stars. Tasks were randomly assigned to allow or disallow AI tools. When developers used Cursor Pro with Claude 3.5 and 3.7 Sonnet, they took 19% longer to complete tasks averaging two hours each.

Before starting, these developers forecast that AI would reduce their completion time by 24%. After finishing the study—despite being measurably slower—they estimated AI had still reduced their time by 20%. Even expert economists (who predicted 39% gains) and machine learning specialists (38% gains) got it wrong.

This isn’t just inaccurate self-reporting. It’s a measurement crisis. If developers can’t accurately gauge their own productivity, they’ll optimize for what feels good instead of what works. Moreover, Google’s DORA Report confirms this at scale: 75% of developers feel more productive with AI, yet the data shows a 1.5% delivery speed dip and 7.2% system stability drop per 25% increase in AI adoption.

Consequently, companies are making hiring decisions, budget allocations, and investment bets worth hundreds of billions based on productivity gains that don’t exist for experienced developers on complex codebases. That’s not just problematic—it’s dangerous.

Code Quality Is Cratering While Everyone Celebrates

Code churn—code that gets rewritten within two weeks—is a leading indicator of quality problems. Since AI assistants became prevalent, code churn has nearly doubled. Furthermore, GitClear’s analysis of 211 million lines of code changes documented an 8-fold increase in code duplication during 2024. Developers are copying and pasting more than ever, and refactoring less.

Stack Overflow’s 2025 Developer Survey of over 90,000 respondents validates what the churn data suggests: 66% cited their biggest frustration as AI code being “almost right, but not quite.” That “almost” is expensive—45% report that debugging AI-generated code is more time-consuming than writing it themselves.

The METR study found that developers accepted fewer than 44% of AI code suggestions. Three-quarters reviewed every single line of AI output, and 56% made major modifications to clean up what the AI generated. That’s not assistance—that’s overhead.

Here’s the uncomfortable truth: short-term “speed” is creating long-term technical debt. The sheer volume of code now being churned out is saturating code review capacity. Maintainability is taking a backseat to velocity, and the bill will come due when teams try to modify this hastily-generated code six months from now.

The Experience Paradox: Experts Hurt Most

The AI coding productivity gap isn’t evenly distributed. Junior developers see legitimate gains—studies report 26-39% productivity improvements for less experienced developers. However, the METR study focused on experienced developers with an average of five years contributing to their specific codebases, and those developers were 19% slower.

This makes sense when you understand how AI assistance actually breaks down. Experienced developers spend less time actively coding and more time prompting AI, waiting for responses, reviewing output, and fixing mistakes. They know when the AI is wrong and waste time correcting it. Context switching interrupts their flow state—that deep concentration where the best code gets written.

AI struggles with complex codebases that have intricate dependencies. It works reasonably well on small, simple projects and generic problems. Nevertheless, on the million-line repositories where experienced developers work daily, the AI lacks the contextual understanding these experts have built over years.

Stack Overflow’s data shows experienced developers are the most skeptical: only 2.6% highly trust AI output, while 20% highly distrust it. They’re not being stubborn. They’re being accurate.

This creates a perverse situation: companies might reduce senior engineering headcount expecting AI to fill the gap, while the data shows senior expertise is more valuable than ever. Vendor claims about productivity gains are likely based on junior developer metrics or cherry-picked simple tasks. When Cursor claims “2x improvement,” remember that METR tested Cursor Pro specifically and found the opposite for experienced developers.

The Trust-Usage Disconnect

Here’s the paradox within the paradox: 84% of developers are using or planning to use AI coding tools, up from 76% in 2024. Yet 46% actively distrust the accuracy of these tools. Only 3% “highly trust” AI output. Positive sentiment has declined from over 70% in 2023-2024 to just 60% in 2025.

Developers aren’t using AI because they trust it. Instead, they’re using it because they feel they have to—peer pressure, management expectations, fear of being left behind. When asked why they’d still consult a human even with advanced AI, 75% said “when I don’t trust AI’s answers.” About 35% of Stack Overflow visits now result from AI-related issues. The tools meant to reduce the need for help are creating new categories of problems.

This is industry momentum without user confidence. Adoption metrics look impressive in vendor presentations, but mask declining trust and satisfaction. When developers use tools they don’t believe in, something’s broken.

What Actually Works: Selective Deployment Over Blanket Adoption

AI coding tools aren’t universally bad. They’re context-dependent. Developers report saving 30-60% of their time on specific tasks: boilerplate generation, documentation, test writing, and rapid prototyping. When the task is repetitive and the pattern is well-established, AI excels.

However, AI struggles with complex business logic, security-sensitive code, performance-critical sections, and novel problem-solving. On large, mature codebases—exactly where experienced developers spend their time—AI becomes a liability rather than an asset.

The strategic recommendation from researchers is clear: deploy AI tools selectively. Use them where they excel (documentation, boilerplate, testing) and limit their use where developer expertise and codebase familiarity provide the advantage (architecture decisions, complex logic, security-critical code).

Best practices for 2025-2026 mean measuring objectively instead of trusting perception. Track actual task completion time, code churn rates, delivery speed, and system stability—not how productive developers feel. Maintain rigorous code review (75% of developers already review every AI line; keep doing it). Deploy tools selectively based on task type rather than adopting blanket AI-everywhere strategies.

Treat AI as an assistant, not autopilot. Context-first prompting works better than jumping straight to “write code for X”—give the AI detailed context like you would a new teammate. Run linters and static analyzers on all AI output. Be extra vigilant on security-sensitive code, where AI can introduce subtle flaws like unsafe SQL queries or weak password hashing.

The developers who thrive in 2026 won’t be those who generate the most lines of AI code. They’ll be those who know when to trust AI, when to question it, and how to deploy it strategically where it actually helps.

The Bottom Line

The AI coding productivity revolution isn’t happening the way vendors claim. For experienced developers on complex codebases—the exact scenario where productivity gains matter most—AI tools slow development by 19% while creating a perception gap that makes accurate measurement nearly impossible.

Code quality is declining (churn doubled, duplication up 4x) while everyone celebrates feeling productive. Companies are making hiring and budget decisions based on productivity assumptions contradicted by rigorous research. The industry is optimizing for perception over reality.

This doesn’t mean AI coding tools are worthless. It means the blanket claims of universal productivity gains are wrong. Context matters. Experience level matters. Task complexity matters. Codebase size matters.

Measure outcomes, not feelings. Deploy selectively, not universally. Review rigorously, not blindly. And remember: if 16 experienced developers can’t accurately measure their own productivity in a controlled study, neither can you without objective metrics.

The productivity gains you’re betting on might be the productivity losses you’re experiencing.