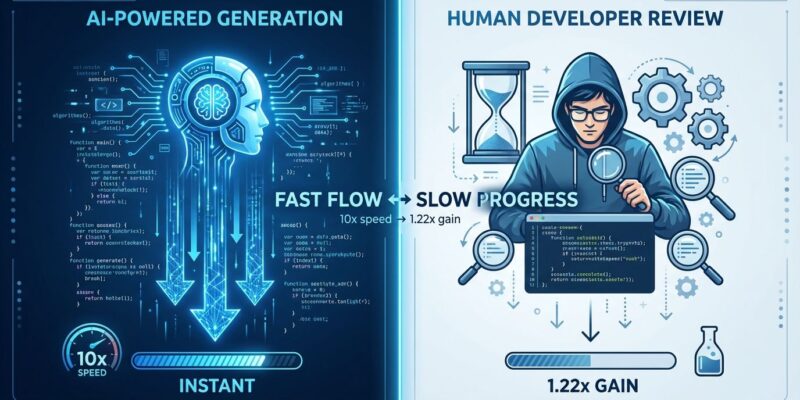

AI coding assistants have created a productivity paradox: while they generate code 10x faster, overall engineering velocity gains hover around 10-20%. Sonar’s 2026 State of Code survey of 1,100+ developers reveals why—96% don’t fully trust the functional accuracy of AI-generated code. The bottleneck has shifted from writing code to verifying it, and that verification workload is now the slowest link in the software delivery chain.

This fundamentally changes how developers work and what skills matter most. Teams generating 30% of code with AI are seeing only 10% velocity improvements because verification—code review, testing, debugging—can’t keep pace with generation speed.

The Trust Gap: 96% Don’t Trust AI Code

Sonar’s 2026 survey found 96% of developers don’t fully trust AI-generated code accuracy. Stack Overflow’s 2025 survey confirms the trend: developer trust in AI accuracy dropped from 43% in 2024 to just 33% in 2025. More developers actively distrust (46%) than trust (33%) AI tools. Only 3% “highly trust” AI output.

The biggest developer frustration—cited by 66%—is dealing with “AI solutions that are almost right, but not quite.” The second-biggest frustration (45%) is that debugging AI-generated code takes more time than expected. This creates a verification burden: developers must carefully review every suggestion rather than trusting the output.

The trust gap isn’t just perception. It directly impacts workflow velocity. If developers could trust AI code, they could merge it quickly. Instead, they spend 91% more time on code review (Faros AI data) verifying what AI generated. Trust isn’t a soft problem—it’s the root cause of the verification bottleneck.

The Bottleneck Shift: From Creation to Verification

Faros AI research shows that teams with high AI adoption complete 21% more individual tasks and merge 98% more pull requests. But PR review time increases 91%. The bottleneck moved from writing code to verifying it.

As Sonar CEO Tariq Shaukat notes, “The burden of work has moved from creation to verification and debugging. That verification workload is the bottleneck—and the risk zone where subtle bugs and vulnerabilities accumulate.” Organizations seeing 30%+ AI-generated code report only 10% velocity gains. PR sizes increase 154%, bug rates rise 9% per developer, and traditional code review workflows weren’t designed for AI-generated code patterns—larger pull requests, unfamiliar code structures, and subtle bugs harder to trace.

The productivity paradox in action: AI makes one part of development blazingly fast while simultaneously creating bottlenecks elsewhere. Speed gains in generation evaporate at the verification stage.

Related: Developer Productivity Metrics Crisis: Why 66% Don’t Trust The Data

Why 10x Coding Speed Doesn’t Mean 10x Delivery

Amdahl’s Law explains why even making coding 10x faster yields only 1.22x overall improvement. Coding represents just 20-30% of the software development value stream. AWS data shows engineers spend only 1 hour per day actually writing code—12.5% of their workday. The rest is planning, design, code reviews, meetings, debugging, and testing. Activities AI doesn’t accelerate and sometimes slows down.

The math is brutal. If coding is 20% of work and AI makes it 10x faster, the speedup is 1 / [(1 – 0.2) + (0.2 / 10)] = 1 / 0.82 ≈ 1.22x. Even if coding took zero time, the theoretical maximum improvement is only 1.25-1.43x because 70-80% of development work remains unchanged.

A METR randomized controlled trial found developers using AI actually took 19% longer in tests—yet believed they worked 20% faster. That’s a 39-percentage-point perception gap. Speed of code generation feels like productivity, but deployment velocity tells the real story. A system moves only as fast as its slowest link.

Solutions: Automated Verification and “Vibe, Then Verify”

Organizations are addressing the verification bottleneck with automated code quality tools that scale review capacity to match AI generation speed. Sonar, Qodo (formerly Codium), CodeRabbit, Codacy, and DeepSource offer automated verification workflows—static analysis, security scanning, 40+ linters, quality gates—that catch issues before human review.

This “vibe, then verify” approach lets developers accept AI suggestions intuitively, then validate systematically through automation. The tools emerging address a projected 40% quality deficit for 2026, where more AI code enters pipelines than reviewers can validate with confidence. Qodo provides 15+ agentic workflows specifically for AI code verification. SonarQube launched “AI Code Assurance” features. These platforms integrate into CI/CD to automate first-pass verification, freeing human reviewers to focus on architecture and business logic rather than syntax and security.

Without automated verification, the bottleneck worsens as AI adoption grows. Teams can’t hire enough senior developers to review 98% more PRs that are 154% larger. Automation is becoming essential infrastructure for AI-augmented development—not optional tooling, but necessary capacity to match generation speed.

Related: Vibe Coding: Software Engineering’s Identity Crisis

What This Means for Developers

JetBrains 2025 survey shows 68% of developers expect AI proficiency to become a job requirement, and 74% of senior developers anticipate moving from hands-on coding toward designing technical solutions and strategy. The skill shift is from “how do I write this code?” to “how do I verify this code is correct, secure, and maintainable?”

The economics are changing. AI makes code generation nearly free (time-wise), so competitive advantage shifts to verification effectiveness. Teams that verify faster without sacrificing quality will ship faster. This means skills in code review, testing strategies, security analysis, and architecture become more valuable than raw coding speed.

For individual developers, this signals a career shift. Getting faster at writing code matters less when AI already writes it 10x faster. Getting better at evaluating code quality, spotting subtle bugs, designing robust architectures, and making trade-off decisions—these skills determine who adds value in an AI-augmented team. Verification expertise is the new differentiator.

Key Takeaways

- 96% of developers don’t trust AI-generated code, creating a verification bottleneck that limits productivity gains to 10-20% despite 10x faster code generation

- Amdahl’s Law caps AI productivity gains at 1.22x overall improvement because coding represents only 20-30% of the software development value stream—the rest (planning, review, testing, debugging) remains slow or gets slower

- Teams with high AI adoption see 98% more pull requests and 154% larger PRs, but PR review time increases 91%, shifting the constraint from creation to verification

- Automated verification tools (Sonar, Qodo, CodeRabbit, Codacy) are becoming essential infrastructure to scale review capacity and match AI generation speed—without them, the bottleneck worsens

- Developer value is shifting from coding speed to verification expertise: code review skills, architecture design, security analysis, and quality assessment matter more than raw generation ability

The bottleneck moved. The teams that recognize this and invest in verification capacity—both automated tools and human expertise—will capture AI’s productivity gains. Those chasing generation speed alone will hit the review wall.