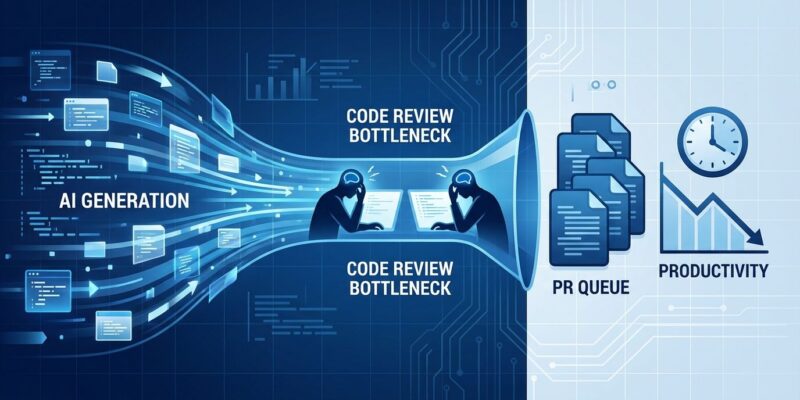

AI coding tools promised 10x faster development. They delivered—but created a crisis nobody saw coming. Faros AI’s analysis of 10,000+ developers found PR review time jumped 91% on AI-using teams. Meanwhile, Sonar’s January 2026 survey revealed 96% of developers don’t fully trust AI-generated code—yet only 48% always check it before committing. The result? A 40% quality deficit where more code enters pipelines than reviewers can validate with confidence. AI’s speed advantage has become an organizational liability.

The 91% Review Time Paradox: More Output, Slower Delivery

Faros AI’s 2025 study exposed the productivity paradox. Developers using AI complete 21% more tasks and merge 98% more pull requests. However, PR review time increases 91%. The bottleneck shifted from writing code to proving it works.

The numbers tell the story. Teams previously handling 10-15 PRs weekly now face 50-100. Additionally, PRs are 18% larger, touching multiple architectural surfaces. Of the 82 million monthly code pushes in 2025, 41% are AI-assisted. Traditional workflows assumed code writing and review took roughly equal time. Consequently, AI shattered that assumption—features that take 2 hours to generate require 4 hours to review.

Review capacity, not coding speed, now defines engineering velocity. As a result, senior engineers spend more time validating AI logic than shaping system design. Growing merge queues and cross-repo regressions slow teams despite faster coding. This is where the 40% quality deficit emerges: AI generates 10x faster, but productivity improves only 10-20% because human review can’t scale.

The Trust-Verification Gap: 96% Doubt, 48% Verify

Sonar’s January 2026 survey of 1,100+ developers exposed a dangerous mismatch. Moreover, 96% don’t fully trust AI-generated code’s functional accuracy. Yet fewer than half always check it before committing. Sonar CEO Tariq Shaukat called it “a critical trust gap between output and deployment.”

AI already accounts for 42% of all committed code, rising to 65% by 2027. Furthermore, 72% of AI-using developers use it daily. That’s mass adoption meeting mass mistrust. The gap widens further when you realize 35% access AI via personal accounts, not work-sanctioned tools. In fact, junior developers report 40% productivity gains but also say reviewing AI code requires more effort than reviewing senior colleagues’ work.

Here’s the danger: untrusted code reaching production because review processes can’t keep up. When only 48% verify AI code but 65% of the codebase will be AI-generated by 2027, the quality deficit isn’t a future problem—it’s already here.

AI Code Quality: 1.7x More Issues Than Human Code

The volume problem is bad enough. The quality problem makes it worse. CodeRabbit’s December 2025 analysis of 470 GitHub pull requests found AI-generated code produces 1.7x more issues: 10.83 issues per PR versus 6.45 for human code.

The breakdown is concerning. Logic and correctness errors rise 75%—business logic flaws, misconfigurations, unsafe control flow. Security vulnerabilities jump 1.5-2x, especially around password handling and insecure object references. Meanwhile, code readability problems triple. Performance inefficiencies appear 8x more frequently, particularly excessive I/O operations. In addition, 45% of AI-generated code contains security vulnerabilities. Logic errors appear 1.75x more often than in human code.

Reviewers aren’t just handling more volume—they’re handling worse code. The quality tax compounds the velocity problem. Traditional code review workflows, designed for human-paced output, collapse under 3-5x volume of 1.7x buggier code. Therefore, AI generates faster, but reviewers must spend extra time catching logic errors, security flaws, and performance issues that human developers typically avoid.

Code Review Now #3 Killer of Developer Productivity

GitLab’s developer survey identified code reviews as the third top contributor to developer burnout, after long work hours and tight deadlines. Haystack Analytics found 83% of software developers have experienced burnout, with code review overload as a major component of the 31% citing “inefficient processes.”

The impact is measurable. Specifically, 52% of developers feel blocked or slowed by inefficient reviews. Developers dissatisfied with code review are 2.6x more likely to seek new jobs. The typical review takes 2-4 business days to complete. By that time, the developer has context-switched to other work. Revisiting a 4-day-old PR requires significant cognitive effort. Many developers handle reviews in post-work hours to preserve coding time—fueling the always-on culture that drives burnout.

The value paradox stings: 60% say reviews are “very valuable” for quality and security, but the process causes friction, delays, and turnover. Indeed, AI was supposed to free developers from tedious work. Instead, it created a different grind: endless review of unfamiliar, buggier code. Teams can’t hire their way out—review capacity doesn’t scale linearly with headcount.

DORA 2024 Data: AI Adoption Hurt Delivery Metrics

Google’s DORA 2024 report delivered a counterintuitive finding. As AI adoption increased among teams (75.9% using AI), delivery throughput decreased 1.5% and delivery stability dropped 7.2%. Individual productivity gains didn’t translate to organizational improvement.

The split in metrics is revealing. Individual measures improved: code quality up 3.4%, code review speed up 3.1%. However, organizational measures declined: throughput down 1.5%, stability down 7.2%. The cause? Over-reliance on AI leads to larger, less manageable change lists. Furthermore, 75% report productivity gains, but delivery metrics tell a different story.

This data challenges the AI productivity narrative. Organizations investing in AI tools saw individual developers move faster—but teams delivered slower and less reliably. The review bottleneck explains part of this: larger PRs and higher bug rates overwhelm review processes, creating delivery friction despite faster coding. The takeaway: AI adoption without addressing review capacity actually degrades performance.

What Engineering Teams Can Do About the Bottleneck

AI didn’t solve the productivity problem—it moved it. Review capacity is the new constraint. Moreover, the solutions aren’t just more AI review tools. They require rethinking quality gates entirely.

Addy Osmani, Chrome Engineering Lead, frames it directly: “AI writes code faster. Your job is still to prove it works.” Emerging practices focus on tiered review systems—automated checks for basic issues, AI-augmented review for pattern detection, human judgment for architecture and security. Differential review strategies apply deep scrutiny to critical code while auto-approving low-risk changes.

PR size limits matter. Enforcing maximum PR sizes (400 lines is a common threshold) keeps changes reviewable and maintains quality standards. Additionally, outcome-based verification—proving code works through tests and live previews rather than line-by-line inspection—is replacing traditional review for AI-generated code.

The fundamental shift: from “how fast can we code?” to “how fast can we prove it works?” Teams that address the review bottleneck will capture AI’s productivity gains. In contrast, teams that don’t will watch those gains evaporate into longer queues, more bugs, and burned-out engineers. The 40% quality deficit isn’t inevitable—it’s a choice about where to invest in the software delivery pipeline.