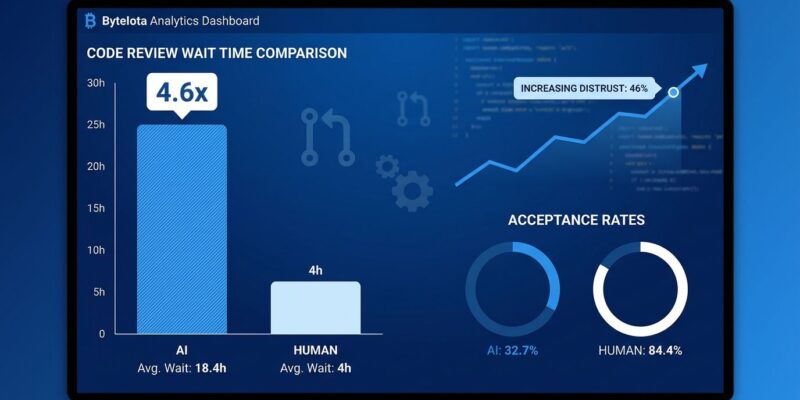

AI coding assistants promised to make developers faster. New data from LinearB tells a different story. AI-generated pull requests wait 4.6 times longer for review than human-written code. This finding comes from analyzing 8.1 million PRs across 4,800 engineering teams in LinearB’s 2025 Software Engineering Benchmarks Report. The paradox gets stranger: once review begins, AI code is reviewed twice as fast. Yet its acceptance rate is just 32.7% compared to 84.4% for manual PRs. With 84% of developers now using AI tools, this AI code review bottleneck is the hidden cost everyone’s paying.

The Review Paradox: Fast to Review, Slow to Start

The numbers expose a critical bottleneck. AI-generated PRs sit in queue 4.6 times longer before anyone touches them. When a reviewer finally picks one up, they move through it twice as fast as manual code. The bottleneck isn’t complexity—it’s trust.

Reviewers have learned from experience. They’re deprioritizing AI PRs because the acceptance rate gap tells the real story: 32.7% for AI versus 84.4% for human code. That’s a 2.6x difference. Teams are building AI PR queues while prioritizing human-written code first. The data even shows tool-specific patterns—Devin’s acceptance rates have been rising since April, while GitHub Copilot’s have been slipping since May.

The Quality Crisis: 1.7x More Bugs

The wait times make sense when you see what reviewers actually find. According to The Register’s analysis, AI-generated PRs contain 10.83 issues on average compared to 6.45 for manual code. That’s 1.7 times more problems per review. Worse, AI code has 1.4 times more critical issues and 1.7 times more major issues. Logic and correctness problems dominate—followed by code quality, maintainability, and security concerns.

This quality gap creates exhausting work. The “almost right” problem—where AI code looks correct but has subtle logic errors—drains reviewer energy. They can’t skim. Every line needs scrutiny. Year-over-year data confirms the trend: incidents per PR jumped 23.5%, and change failure rates rose 30%. More output, worse quality.

The Developer Productivity Paradox: Zero Company Gains

Here’s where it breaks down. Between 75% and 85% of developers use AI coding tools. Yet Faros AI research reveals most organizations see no measurable performance improvements. Teams with heavy AI adoption complete 21% more tasks and merge 98% more PRs. Sounds impressive until you check DORA metrics. There’s no correlation between AI usage and faster, more reliable shipping at the company level.

The individual speed gains vanish in the system. Code drafting accelerates, but review times balloon by 91% in AI-heavy teams. Developers feel productive—they’re typing more code faster. But organizational velocity stalls because the entire workflow hasn’t adapted. The bottlenecks just shift downstream to code review, testing, and rework cycles.

Trust Is Collapsing as Usage Grows

Trust in AI tools is eroding fast. The Stack Overflow 2025 Developer Survey shows that in 2024, 31% of developers actively distrusted AI accuracy. By 2025, that number hit 46%. Only 33% trust AI outputs. Just 3% highly trust them. When asked about a future with advanced AI, 75% said they’d still ask humans “when I don’t trust AI’s answers.”

The top frustration? Forty-five percent cite “AI solutions that are almost right, but not quite.” Another 45.2% say debugging AI code takes longer than fixing human code. For refactoring tasks, 65% report AI “misses relevant context.” This trust crisis directly causes the 4.6x wait times. Reviewers approach AI PRs with well-earned skepticism. They deprioritize them, scrutinize them harder, and waste time on near-misses. It’s a self-reinforcing cycle.

Developers Feel Faster But Are Actually Slower

A METR study found something shocking: developers using AI were 19% slower on average, yet they were convinced they’d been faster. This perception-reality gap reveals the core problem. Typing speed doesn’t equal task completion speed.

PR sizes increased 150% in AI-heavy teams. More code means more to review, debug, and maintain. Developers experience the rush of generating code quickly. They don’t feel the downstream costs—the review delays, the rework iterations, the bug hunts. Subjective productivity (“I typed so much code!”) doesn’t match objective productivity (task completion time). Companies pay for the gap.

What Actually Works: Workflow Fixes

The problem isn’t AI tools. It’s workflow adaptation. Organizations that rethink their processes see real benefits. According to Qodo’s State of AI Code Quality report, 81% of developers using AI for code review report quality improvements. Code reviews complete 15% faster with tools like GitHub Copilot Chat when teams adapt their approach.

Successful patterns emerge from companies like OpenAI and Anthropic. Use AI to review AI-generated code first—catch obvious issues before human review. Break AI output into smaller PRs instead of massive change sets. Strengthen automated testing to catch problems in CI/CD, not in human review. Most importantly, don’t deprioritize AI PRs. Treat them equally in the queue but apply appropriate scrutiny.

At OpenAI, AI code review gives engineers confidence they’re not shipping major bugs. It frequently catches issues the contributor can fix before pulling in another engineer. At Anthropic, engineers review PRs from Claude Code, leave line-by-line comments in GitHub, then Claude fetches those comments and iterates. The pattern works: human oversight with AI assistance, not AI output dumped on overwhelmed reviewers.

The 4.6x wait time isn’t inevitable. It’s a signal that teams are generating AI code faster than they can safely integrate it. The solution isn’t better AI—it’s better processes. Smaller PRs, AI-powered first-pass reviews, robust testing, and cultural shifts to treat AI code as code that needs context and care. The bottleneck is solvable, but only if teams stop expecting AI to be the complete solution and start treating it as one part of a redesigned workflow.