The agentic AI hype machine is running at full throttle. Gartner predicts 40% of enterprise applications will embed AI agents by year’s end, the market is exploding from $7.8 billion to a projected $52 billion by 2030, and multi-agent system inquiries surged 1,445% in just over a year. But strip away the noise and here’s what’s actually happening: while 66% of organizations are experimenting with AI agents, only 11% have deployed them to production. This isn’t a technology problem—it’s a strategy problem, and 2026 is the year the bill comes due.

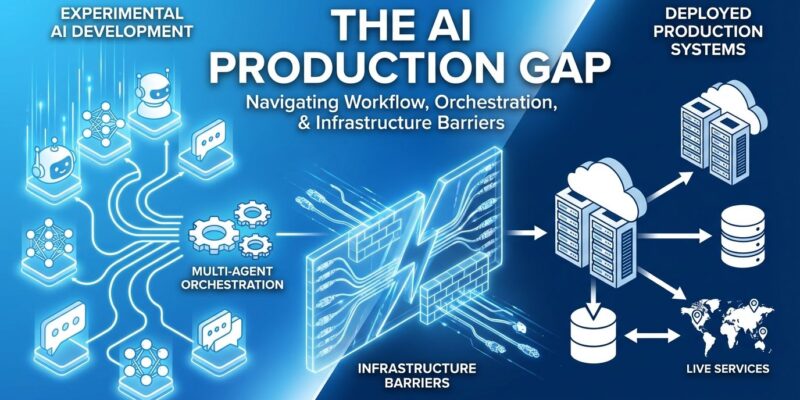

The Production Gap Isn’t Closing—It’s Widening

Deloitte’s 2025 study reveals the uncomfortable truth: 66% of organizations are experimenting with agentic AI, 38% are running pilots, 14% have solutions ready to deploy, but only 11% are actually running agents in production. Even with reported progress—LangChain’s 2026 survey shows 57.3% claim production deployments, up from 51%—the gap between “we’re trying this” and “it’s driving business value” remains massive.

Gartner isn’t pulling punches about what’s coming. Their prediction: over 40% of agentic AI projects will be canceled by the end of 2027 due to escalating costs, unclear business value, or inadequate risk controls. They bluntly state that “agents will fall into the trough of disillusionment in 2026 because they just aren’t generally ready for prime-time business.” Why? Experiments consistently show AI agents make too many mistakes for businesses to rely on them for processes involving serious money.

This gap is a feature, not a bug, of current approaches. Organizations are stuck in what you might call pilot purgatory—spending money, running demos, generating excitement, but failing to extract production value. 2026 is the reckoning year when those who can’t bridge this gap will start pulling the plug.

Workflow Redesign Beats Technology Every Time

Here’s the insight that separates winners from losers: the key differentiator isn’t AI model sophistication—it’s the willingness to redesign workflows rather than layering agents onto broken processes.

Consider HPE’s “Alfred” agent for internal performance reviews. They didn’t automate the existing review process. They reimagined the entire end-to-end workflow first, then built agents around the redesigned system. This approach—process redesign first, automation second—is what distinguishes successful implementations from expensive failures.

Deloitte’s research confirms what leading organizations discovered the hard way: “Organizations try to automate existing processes without reimagining how work should actually be done.” This is agent washing at its finest—rebranding existing automation tools as agents and wondering why production deployment fails. When you treat agents as productivity add-ons rather than transformation drivers, you get pilot-stage demos, not production systems.

What actually works? Specialized agents for specific domains orchestrated together, not monolithic solutions trying to do everything. The hybrid human-digital workforce model exemplified by Mapfre: “It’s hybrid by design. With the high level of autonomy of these agents, it’s not going to substitute for people, but it’s going to change what they do today, allowing them to invest time on more valuable work.”

The data backs this up. Strategic partnerships are twice as likely to reach full deployment compared to internal builds. Why? Because partners force organizations to rethink workflows rather than automating institutional inertia.

Three Infrastructure Barriers Killing Agent Deployments

Even organizations willing to redesign workflows hit three brutal infrastructure realities.

First, legacy system integration. Gartner predicts over 40% of agentic AI projects will fail because legacy systems simply can’t support modern AI execution demands. Traditional enterprise systems lack real-time execution capability, modern APIs, and the modular architectures autonomous agents require. You can’t bolt autonomous agents onto 2015 infrastructure and expect production success.

Second, data architecture constraints plague nearly half of surveyed organizations. 48% cite searchability challenges, 47% cite reusability issues. Traditional ETL processes built for batch analytics don’t cut it for agents needing real-time context. The solution requires shifting to enterprise search and knowledge graphs—essentially making internal data as discoverable as Google made the web.

Third, governance and control frameworks designed for human-operated systems break down when autonomous agents make independent decisions. Quality remains the biggest production blocker—one-third of respondents cite accuracy, consistency, and brand adherence failures. Latency emerges as the second challenge at 20%, while security, privacy, and compliance concerns top the list at 52% for enterprise deployments.

The brutal truth: if your infrastructure was built for 2015, your agents will fail in 2026. Modernization isn’t optional.

Multi-Agent Orchestration and MCP Standardization Change the Game

Here’s what’s actually advancing in 2026: not better foundation models, but standardization and orchestration patterns.

The multi-agent revolution mirrors the microservices shift in software architecture. Single all-purpose agents are being replaced by orchestrated teams of specialized agents. Gartner’s 1,445% surge in multi-agent system inquiries from Q1 2024 to Q2 2025 reflects this pivot. By 2027, they predict 70% of multi-agent systems will use narrowly specialized agents.

Real production examples show the pattern. Salesforce Agentforce uses an orchestrator agent to manage customer interactions while decomposing problems into parallel tasks handled by specialists for billing, logistics, and provisioning. ServiceNow’s case triage embeds agents directly into operational workflows—interpreting intent, classifying issues, enriching context through knowledge retrieval, and routing based on predefined guardrails. A global fintech using Kubiya lets engineers issue natural language commands in Slack, with agents handling provisioning, compliance enforcement, Prometheus monitoring, and auto-ticketing for failed builds.

The breakthrough enabling this orchestration is the Model Context Protocol. Described as “USB-C for AI,” MCP provides a universal interface for AI systems to connect with data sources, tools, and services. What makes this remarkable isn’t the technology—it’s the adoption. OpenAI, Microsoft, Anthropic, Google, AWS, Cloudflare, and Bloomberg have all signed on. In December 2025, Anthropic donated MCP to the Linux Foundation’s Agentic AI Foundation.

This is rare in AI: competitors collaborating on interoperability rather than building proprietary moats. As TechCrunch notes, “With MCP reducing friction, 2026 is likely the year agentic workflows finally move from demos into day-to-day practice.”

For developers, the message is clear: learn MCP now. It’s becoming table stakes. Multi-agent orchestration patterns—not monolithic agents—are the production-ready architecture.

Governance as Enabler, Not Blocker

The critical 2026 shift is recognizing governance not as compliance overhead slowing deployment, but as the confidence enabler for high-value production systems. 75% of organizations now prioritize security, compliance, and auditability as the most critical requirements for agent deployment.

What does production-ready governance look like? Four elements separate working systems from pilot purgatory.

First, bounded autonomy architectures with clear operational limits. Agents know what they can’t do, have escalation paths to humans for high-stakes decisions, and maintain comprehensive audit trails of every action.

Second, observability from day one. LangChain’s 2026 survey found 89% of organizations implement tracing, with 62% maintaining step-level visibility. For production deployments, adoption jumps to 94%. You cannot troubleshoot what you cannot see. Production agents without tracing are production disasters waiting to happen.

Third, human agent supervisors to handle exceptions at designed handoff points. This is the “controlled agency” model—accountability with independence. Real-world example: e& and IBM embedded agentic AI directly into governance, risk, and compliance platforms, creating one of the first enterprise-grade implementations supporting trusted, human-led decisions under regulatory requirements.

Fourth, Zero Trust principles applied to non-human identities. Digital identity systems for agents, continuous verification and authorization, immutable action logs.

The pattern emerging from successful deployments is governance-first design. Organizations building security, compliance, and auditability into agents from the start demonstrate higher confidence deploying agents in high-value scenarios. Governance isn’t the enemy of production deployment—it’s the prerequisite.

What Separates Executors from Experimenters

The data tells a harsh but clear story. Over 40% of agentic AI projects will be canceled by the end of 2027. But that means nearly 60% will succeed. What separates the winners?

For developers: focus on quality and consistency first, implement observability from the start, choose multi-model strategies (75%+ of successful deployments use multiple providers), master multi-agent orchestration frameworks like LangGraph and CrewAI, learn MCP integration, and prioritize bounded autonomy patterns with clear limits and escalation paths.

For CTOs and engineering leaders: redesign workflows before deploying agents, build governance frameworks as enablers not blockers, plan for hybrid human-digital workforce models (change roles, don’t eliminate them), set clear bounded autonomy policies, modernize legacy systems and data architecture, and seriously consider strategic partnerships that are twice as likely to reach production.

The gap between 66% and 11% isn’t closing on its own. Organizations treating 2026 as another year to experiment will find themselves in Gartner’s 40% cancellation bucket. Those who shift from experimentation to execution—workflow redesign, infrastructure modernization, governance as enabler, multi-agent orchestration—are the ones who’ll make agentic development work in production.

The technology exists. The standards are emerging. The question isn’t whether agentic systems can work—it’s whether your organization can bridge the gap from pilot to production before the hype cycle collapses into the trough of disillusionment.