Anthropic launched Claude Cowork on January 12, 2026, promising to automate file management and web tasks for non-technical users through their macOS desktop app. But buried in the company’s own safety documentation is a jarring admission: “The chances of an attack are still non-zero,” and users remain responsible for all actions the AI takes—including data theft, file deletion, or unauthorized communications triggered by prompt injection attacks. When a company’s safety warnings are more honest than their marketing, developers should listen to the warnings.

The “Lethal Trifecta” Cowork Enables

Security researcher Simon Willison coined the term “Lethal Trifecta” in June 2025 to describe three elements that make AI systems unsafe when combined. These include access to private data, exposure to untrusted content from the web or emails, and ability to communicate externally via APIs or network requests. The industry consensus is clear: don’t allow all three in the same system.

Claude Cowork violates this fundamental principle. It can read your files and Google Workspace data (private data), browse the web and process emails (untrusted content), and connect to external services through APIs (exfiltration vector). Consequently, this combination creates vulnerability to prompt injection attacks where a single malicious email or compromised webpage can trick Cowork into stealing data.

Microsoft Copilot’s “EchoLeak” vulnerability demonstrates this exact threat. An attacker sent a crafted email to anyone in an organization. When users later asked Copilot questions, it retrieved the poisoned email, executed embedded instructions, and exfiltrated sensitive data via an image URL—all without a single user click. Moreover, the attack succeeded because Copilot had the same lethal trifecta that Cowork ships with today.

Claude Cowork’s Own Safety Warnings Expose the Risk

Anthropic’s official safety documentation explicitly warns that “Claude can take potentially destructive actions (such as deleting local files) if it’s instructed to” and advises users to “monitor Claude for suspicious actions that may indicate prompt injection.” Security researcher Simon Willison calls this guidance “unrealistic for non-technical users” and “unfair to expect from average users.”

More damning: Anthropic admits they “cannot guarantee future attacks won’t penetrate their defenses, potentially stealing data.” Furthermore, the documentation states users “remain responsible for all actions taken by Claude performed on their behalf, including content published or messages sent, purchases or financial transactions, data accessed or modified.” This is liability shifting dressed up as a safety warning.

“Non-zero attack probability” is marketing speak for “risky.” Anthropic is asking developers to bet their security on probabilistic safety while accepting full responsibility when attacks succeed. This contradicts the promise of automated, worry-free productivity.

Prompt Injection Attacks Aren’t Theoretical

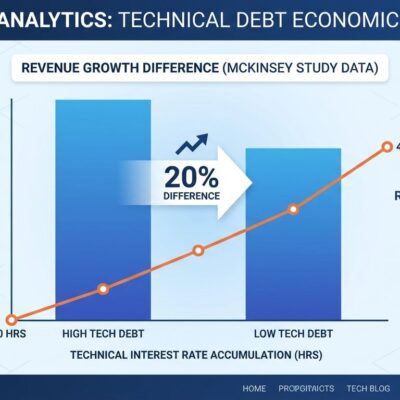

Prompt injection remains the number one security risk for LLM applications according to OWASP’s 2025 Top 10, with 2026 statistics showing 20% of jailbreaks succeed in an average of 42 seconds. Additionally, ninety percent of successful attacks leak sensitive data. These aren’t theoretical vulnerabilities—they’re active exploits happening now.

Recent examples paint a stark picture. IBM’s Bob AI agent was manipulated into executing malware via CLI prompt injection. Slack’s AI assistant inserted malicious links after processing hidden instructions, sending private channel data to attackers. Meanwhile, the EchoLeak zero-click attack against Microsoft Copilot demonstrated that sophisticated attacks don’t even require user interaction.

“Camouflage Attacks” research achieved a 65% success rate across eight different models in 2026 testing. The core problem remains unsolved: models have no ability to reliably distinguish between legitimate instructions and malicious data masquerading as instructions. With success rates this high, prompt injection isn’t a rare edge case—it’s a systemic vulnerability.

The False Choice Between Convenience and Security

Anthropic presents a false choice: powerful productivity features or security. However, better alternatives exist that don’t require this trade-off. Web browsers don’t let websites delete your files without confirmation. Similarly, operating systems require administrator approval for system changes. Why should AI agents have more permissions than traditional software?

Industry best practices for 2026 are well-established. These include scoped permissions with read-only defaults requiring explicit write approval. Additionally, action confirmations for destructive operations, allowlist-based external communication limited to approved domains, and sandboxed execution with no external network access all help prevent unauthorized access. These patterns preserve productivity benefits while eliminating the lethal trifecta vulnerability.

A better design pattern separates concerns: “research” mode with read-only access and web browsing, versus “action” mode with write permissions but no external network access. Don’t combine all three dangerous elements in the same system. Consequently, the current design normalizes unrestricted AI system access, and if Anthropic succeeds, competitors will follow with even fewer security guardrails.

Who’s Locked Out (And Why That Matters)

Claude Cowork is only available to Claude Max subscribers paying $100-200 per month on macOS personal accounts. Moreover, enterprise users with Google Workspace accounts are completely locked out, even if they’re willing to pay. Free, Pro, Team, and Enterprise tier users sit on a waitlist with no timeline. Windows users have no access.

This exclusion is suspicious. Google Workspace integration exists for other Claude features. Team and Enterprise tiers already exist. So why exclude business users from Cowork specifically? Plausible theory: Anthropic is testing on personal accounts first to avoid enterprise security scrutiny. If a high-profile breach happens, better to affect consumers than Fortune 500 companies facing compliance requirements.

The irony is stark. Many developers and tech professionals who would benefit most from file automation use Workspace for better organization and unlimited storage. Furthermore, they’re locked out of a productivity tool designed for their workflows, while personal account holders get exclusive access to a research preview with “non-zero attack probability.”

What Developers Should Demand

Don’t accept “non-zero attack probability” as acceptable for production tools. Any non-zero probability becomes 100% probability over time with enough attack attempts. Therefore, demand scoped permissions and security-by-design from AI vendors. Don’t normalize unrestricted AI system access just because it’s convenient.

If you’re testing Cowork despite the risks, use an isolated sandbox environment with no access to credentials, SSH keys, proprietary code, or client data. Never grant Cowork access to folders containing financial documents or source code repositories. Assume breach will happen and plan accordingly—that’s security engineering, not paranoia.

This is about setting precedent. If developers accept convenience-over-security trade-offs, the next generation of AI agents will have even fewer guardrails. Hold vendors accountable for building secure systems, not just warning users about insecure ones. When Anthropic’s own safety documentation is more honest than their marketing materials, the answer is clear: prioritize security over convenience.