OpenAI signed a $10 billion deal with Cerebras Systems on January 14, 2026, securing 750 megawatts of AI compute capacity through 2028—the largest high-speed inference deployment in history. The energy scale is staggering: 750MW equals the electricity consumption of roughly 600,000 homes, highlighting AI’s transition from research labs to industrial-scale operations. Cerebras’s wafer-scale chips deliver responses up to 15× faster than GPU-based systems at 32% lower cost than Nvidia’s flagship hardware.

Wafer-Scale Speed: 21× Faster Than Nvidia Blackwell

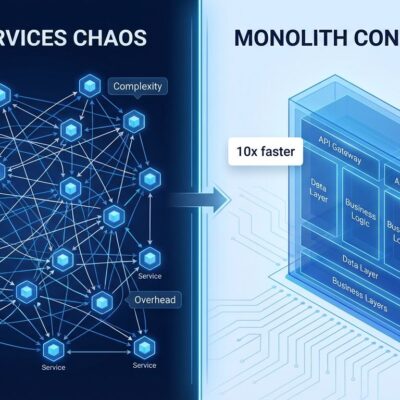

Cerebras’s CS-3 systems deliver 21× faster AI inference than Nvidia’s flagship Blackwell B200 GPU, with 32% lower total cost of ownership and 33% lower power consumption, according to independent SemiAnalysis benchmarks. The secret: wafer-scale architecture that integrates 900,000 AI cores and 44GB of memory on a single chip—46,250 square millimeters versus Nvidia’s multi-GPU configurations that require complex interconnects.

Performance numbers tell the story. Cerebras achieves 3,000 tokens per second on the GPT-OSS-120B model, running 5× faster than Nvidia Blackwell. On Meta’s Llama 4 Maverick, Cerebras hits 2,500+ TPS—more than double Nvidia’s throughput. Memory bandwidth reaches 21 petabytes per second, 7,000× more than Nvidia’s H100. Speed equals competitive advantage in AI: faster inference means better user experience for ChatGPT’s 200+ million weekly users. Natural conversation requires sub-second responses; delays break immersion.

By eliminating communication overhead between chips, Cerebras achieves latency reductions that Nvidia’s multi-GPU architecture can’t match. This is why OpenAI chose Cerebras for inference despite Nvidia’s training dominance. As Sachin Katti from OpenAI put it: “Cerebras provides a dedicated low-latency inference solution enabling faster responses, more natural interactions.”

750MW: AI’s Energy Crisis in One Number

The 750 megawatt deployment equals powering approximately 600,000 homes—a scale that underscores AI’s massive energy requirements. This isn’t an outlier. AI data centers already consume 21-32% of electricity in some regions. Ireland’s data centers use 21% of the country’s electricity, projected to hit 32% by 2026. Virginia allocates 26% of state electricity to data centers. A single generative AI training cluster consumes 7-8× more energy than typical computing workloads.

Energy availability—not chip supply—now constrains AI growth. Companies can order compute, but can’t deploy without power agreements. This explains Google’s 500MW nuclear deal with Kairos Power (delivery by 2035) and why OpenAI’s 750MW deal required extensive power infrastructure planning. Hyperscalers are spending $600 billion in 2026 alone, primarily on AI infrastructure—a 36% increase over 2025. The bottleneck has shifted from silicon to kilowatts.

Part of a $1.4 Trillion Infrastructure Bet

The Cerebras deal is one piece of OpenAI’s $1.4 trillion infrastructure buildout over 8 years, spanning AWS ($38 billion), Nvidia (10 gigawatts), AMD (6GW), Oracle/Stargate ($300B+), CoreWeave ($22.4B), and now Cerebras (750MW). OpenAI is deliberately diversifying to avoid vendor lock-in and supply bottlenecks that have plagued AI companies dependent solely on Nvidia.

Nvidia holds roughly 90% of the AI chip market—a monopoly-like position that creates risk. Supply constraints have bottlenecked companies, and single-vendor dependency gives pricing power to the supplier. OpenAI’s multi-vendor strategy validates what Nvidia hoped wouldn’t happen: viable alternatives exist for specific workloads. For CTOs and infrastructure teams, this confirms the wisdom of avoiding single points of failure in critical infrastructure.

Meanwhile, Anthropic ordered $21 billion in Google TPUs via Broadcom—400,000 TPUs delivering 1 gigawatt of capacity. The industry is moving away from Nvidia dependency, and inference is where alternatives can compete.

Why Inference (Not Training) Is the Real Cost Driver

While Nvidia dominates AI training, inference has emerged as the critical bottleneck—and the real cost driver at scale. OpenAI’s ChatGPT serves 200+ million users weekly; every query costs compute and energy. Inference costs scale linearly with users, while training is a one-time expense. At ChatGPT’s scale, inference bills dwarf training costs. This shift explains why OpenAI chose Cerebras’s inference-optimized architecture over Nvidia’s general-purpose GPUs for this deployment.

This represents a fundamental market shift. Training chips won’t automatically win the inference market—specialized architectures like Cerebras can compete on speed and cost. For developers building AI applications, optimizing inference costs and latency is now more critical than training speed. Expect more companies to emerge with inference-specific chips challenging Nvidia’s dominance. Cerebras raised $1 billion at a $22 billion valuation this month and plans an IPO in Q2 2026—validation that the inference market is real and lucrative.

Key Takeaways

- Inference speed is the new battleground: OpenAI’s $10B+ Cerebras bet signals that inference (not training) determines user experience and competitive advantage at scale.

- Energy constrains AI growth: 750MW equals 600,000 homes worth of electricity. Power availability, not chip supply, now limits where and how fast AI can deploy.

- Nvidia’s monopoly faces pressure: Cerebras’s 21× faster inference at 32% lower cost—plus Anthropic’s $21B Google TPU order—proves viable alternatives exist for specialized workloads.

- Multi-vendor strategies are essential: OpenAI’s $1.4T infrastructure spans 6+ partners (AWS, Nvidia, AMD, Cerebras, Oracle, CoreWeave). Single-vendor dependency creates unacceptable risk.