Cursor published research yesterday proving hundreds of AI coding agents can collaborate on a single codebase for weeks, producing over 1 million lines of code. The company’s January 14 blog post demonstrates agents built a complete web browser from scratch in close to a week—1 million+ lines across 1,000 files. This isn’t incremental progress in AI-assisted coding. It’s a fundamental shift from single-agent assistance to autonomous multi-agent collaboration at unprecedented scale.

The results challenge assumptions about coordination limits. Cursor ran hundreds of concurrent workers pushing to the same branch with minimal conflicts while migrating their own production codebase from Solid to React (266,000 lines added, 193,000 removed over 3+ weeks). What makes this work where previous attempts failed? Architecture.

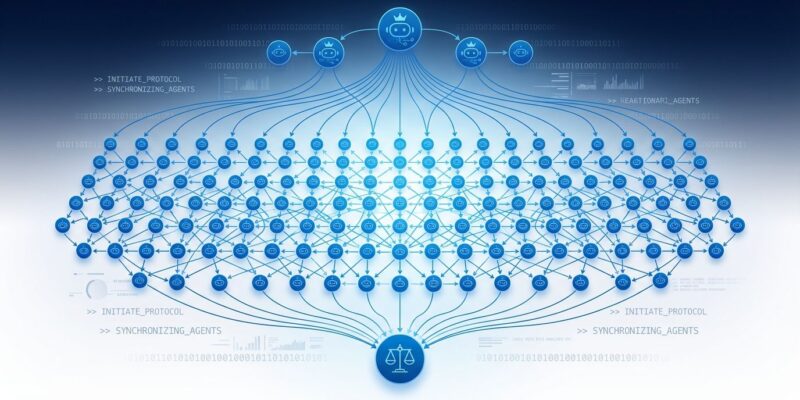

The Architecture That Solved Coordination

Cursor evolved from flat structures that failed spectacularly to a hierarchical planner-worker-judge system. Lock-based coordination systems created bottlenecks: “Twenty agents would slow down to the effective throughput of two or three,” Cursor reported. Optimistic concurrency control reduced brittleness but revealed deeper problems—risk-averse agents avoiding difficult work, preventing end-to-end implementation progress.

However, the working solution assigns clear roles. Planners continuously explore the codebase and generate tasks, spawning sub-planners for specific areas in a parallel, recursive pattern. Workers execute assigned tasks with complete focus, no inter-worker coordination required—they “grind on their assigned task until it’s done, then push their changes.” Judge agents determine cycle continuation after each iteration, enabling fresh starts to combat drift.

Moreover, the key insight is clear: “The harness and models matter, but the prompts matter more” for coordination and behavioral consistency. Hierarchical structure with clear accountability eliminated risk-aversion. Too little structure creates duplication and drift; excessive structure breeds fragility. Cursor found the middle ground.

Real-World Results That Prove Viability

Six major experiments demonstrate what’s now possible with multi-agent AI coding systems:

- Web browser from scratch: Close to a week runtime, 1M+ lines across 1,000 files (GitHub: fastrender). Building a browser is extremely difficult—agents accomplished it.

- Solid to React migration: 3+ weeks, +266K/-193K edits to Cursor’s own production codebase, mergeable per testing.

- Video rendering optimization: 25x performance improvement with Rust rewrite, production-ready.

- Java LSP: 7,400 commits, 550K lines of code for complex language server implementation.

- Windows 7 emulator: 14,600 commits, 1.2M lines for system-level programming.

- Excel implementation: 12,000 commits, 1.6M lines for complex application logic.

Furthermore, the browser project stands out. Agents handled HTML/CSS parsing, JavaScript engine integration, rendering pipeline, network protocols, and security sandboxing—all traditionally requiring experienced human teams months to complete. The Solid to React migration proves agents can tackle real production refactoring at scale, not just greenfield experiments.

Additionally, coordination at this scale was considered impractical. Hundreds of workers pushing to the same branch should create merge chaos. It didn’t. Hierarchical structure with independent workers and periodic judge oversight maintained coherence while enabling massive parallelism.

GPT-5.2 vs Claude Opus 4.5: Performance Gap

Cursor’s experiments revealed a significant performance difference for extended autonomous work. GPT-5.2 models demonstrated superior capability: “much better at extended autonomous work: following instructions, keeping focus, avoiding drift, and implementing things precisely and completely.” The model even outperformed GPT-5.1-codex for planning tasks despite the latter being coding-specialized—role-specific optimization matters.

In contrast, Claude Opus 4.5 showed different characteristics. Cursor found it “tends to stop earlier and take shortcuts when convenient, yielding back control quickly.” Less suitable for sustained autonomous operations requiring week-long focus. Independent benchmarks show Opus 4.5 achieving 80.9% on SWE-bench Verified (first to exceed 80%) versus GPT-5.2’s 80.0%—statistical parity for shorter, interactive tasks.

Consequently, the takeaway is clear: model performance depends on use case. Opus 4.5 excels at agentic workflows with human collaboration (30+ hour stability, 50-75% fewer tool calling errors). GPT-5.2 maintains performance over week-long autonomous tasks with minimal human intervention. Choose accordingly.

What Autonomous Agents Mean for Developers

Nevertheless, industry context tempers the breakthrough. 46% of developers actively distrust AI code accuracy according to 2026 data, with the top frustration being code that’s “almost right, but not quite” (66%). Agents can handle 40-60% of development work with appropriate oversight—not replacement, but significant augmentation. Success rates are projected to reach 70-80% by 2026.

Meanwhile, practical patterns are emerging from agentic development trends. Planner-executor separation is becoming standard. Git worktrees enable task isolation for parallel work. Strong testing acts as a safety net—agents “can fly through projects with good test suites.” Version control serves as the coordination mechanism. Best practices validated by Cursor’s experiments: plan before coding with detailed specs, break work into small chunks within manageable context, provide clear guidelines through style guides and rules files, focus on net productivity across the entire workflow.

Therefore, the architectural blueprint is reusable. Developers building multi-agent systems can apply planner-worker-judge patterns now, not wait for tools to implement them. Model selection matters—GPT-5.2 for extended autonomous work, Opus 4.5 for interactive agentic workflows. Human oversight remains essential. AI-augmented developers are achieving 2-3x productivity versus unaugmented peers, but “the future isn’t fully autonomous AI development—it’s AI-augmented human developers” doing more.

Challenges and Future Evolution

However, Cursor acknowledges current limitations despite breakthrough results. Planners lack wake-on-completion triggers. Agents occasionally run excessively long. Periodic fresh starts are still necessary to combat tunnel vision. Coordination is “nowhere near optimal” according to the team.

Additionally, developer concerns from community discussions highlight real problems. Poorly coordinated multi-agent systems produce code “like 10 devs worked on it without talking to each other”—inconsistency and duplication at scale. Security remains a concern: giving agents autonomous system access is “like letting a robot run freely in your computer without safety protection.” Context management complexity increases with multiple agents.

Looking ahead, future evolution points toward specialized multi-agent systems for design, testing, documentation, and deployment working in parallel. The Linux Foundation launched the Agentic AI Foundation to establish shared standards. Development team structures may shift toward architecture, oversight, and verification as primary human roles. But today’s systems require human oversight, careful prompting, strong testing, and realistic expectations about what agents can and can’t handle autonomously.

Ultimately, Cursor’s research proves week-long autonomous coding projects are viable at unprecedented scale. Hundreds of agents coordinating effectively, millions of lines of code, complex greenfield and migration projects—all demonstrated in production experiments. The architecture pattern works. Model performance characteristics are now data-driven, not speculative. Developers can apply these patterns immediately. Multi-agent collaboration has moved from theory to practice, with both the successes and limitations clearly documented.