DeepSeek just proved billion-dollar annotation budgets aren’t mandatory for frontier AI. Their R1 model achieves OpenAI o1-level reasoning through pure reinforcement learning—no human-labeled reasoning chains required. Built for $5.58 million versus OpenAI’s $6+ billion investment, it matches performance on math, code, and reasoning benchmarks while costing 95% less. The industry assumed expensive human supervision was the only path to advanced reasoning. DeepSeek’s RL-first approach eliminates that bottleneck, democratizes access through open source, and proves AI can spontaneously discover reasoning patterns humans never explicitly taught.

Pure Reinforcement Learning: The Training Breakthrough

DeepSeek R1-Zero represents the first open validation that reasoning capabilities can emerge purely from reinforcement learning, with no supervised fine-tuning as a preliminary step. The model spontaneously developed self-verification, reflection, multi-step reasoning chains, and dynamic strategy adaptation—behaviors that weren’t explicitly programmed but emerged through RL exploring the solution space.

OpenAI’s o1 requires massive human annotation of reasoning chains. Teams must manually label how the AI should think through problems, an expensive and slow process. DeepSeek’s approach skips this entirely. Their four-stage training pipeline starts with a cold-start phase using structured examples, then pivots to large-scale reasoning RL with rule-based evaluation, followed by synthesis fine-tuning where their V3 model judges quality, and concludes with comprehensive RL balancing helpfulness and reasoning preservation.

The key insight: RL-first strategies reduce dependency on human-annotated datasets while uncovering emergent capabilities organically. The AI teaches itself reasoning patterns through trial-and-error exploration, discovering approaches humans didn’t explicitly map. That’s not incremental improvement—it’s a methodological shift.

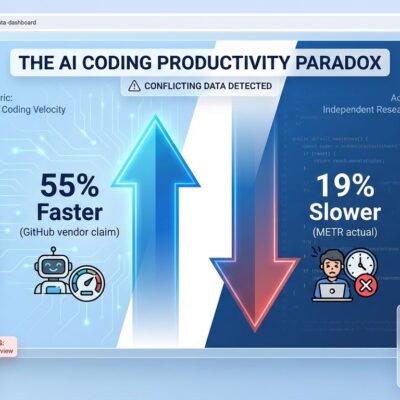

The Cost Disruption Nobody Expected

DeepSeek built R1 for $5.58 million. OpenAI reportedly invested over $6 billion in o1 development. That’s a 95% cost reduction for comparable performance.

The difference stems from annotation economics. Traditional supervised training requires extensive labeled datasets from human annotators—expensive specialists who map out reasoning chains step by step. OpenAI’s infrastructure costs alone hit $700,000 daily in 2023, with 2024 projections nearing $7 billion annually for training and inference combined.

Pure RL sidesteps this bottleneck. No annotation armies, no labeled reasoning trajectories, just reward signals guiding exploration. DeepSeek reportedly allocated a $12 million RL training budget—pocket change compared to OpenAI’s spend.

Operational costs follow the same pattern. On Fireworks AI, DeepSeek R1 runs at $8 per million tokens. OpenAI o1 charges $15 for input and $60 for output tokens. For production workloads, that’s operating expenses between 15% and 50% of OpenAI’s pricing. Reasoning AI no longer requires massive budgets.

Performance: Pure RL Matches Supervised Giants

DeepSeek R1 achieves 79.8% on the AIME 2024 mathematics exam, edging past OpenAI o1’s 79.2%. On the MATH-500 dataset, it hits 97.3% accuracy. Codeforces-style programming challenges yield a 2029 Elo rating, just 32 points behind o1’s 2061. For English comprehension, MMLU-Pro scores 84.0%.

The architecture uses a Mixture of Experts framework with 671 billion total parameters, but only 37 billion activate per forward pass. This sparse activation pattern makes inference faster and more efficient than dense models of similar capability. It’s the MoE advantage: massive capacity without matching compute costs.

Performance parity with radically different training validates the RL-first approach. Expensive supervision isn’t the only path to reasoning—it might not even be the best path.

Open Source Democratization

DeepSeek released R1 under the MIT License. Commercial use, modifications, and distillation are explicitly permitted. Within hours, the model topped HuggingFace download charts.

Six distilled models followed: 1.5B, 7B, 8B, 14B, 32B, and 70B parameter variants based on Qwen and Llama foundations. The 32B distilled version outperforms OpenAI o1-mini across various benchmarks, achieving new state-of-the-art results for dense models. These smaller versions run on consumer GPUs, making reasoning AI accessible to teams without enterprise infrastructure budgets.

Compare that to OpenAI’s approach: o1 remains closed, accessible only through API calls at premium pricing. DeepSeek chose radical openness instead. The community responded with Open-R1, a full reproduction project, and dozens of derivative experiments within days.

MIT licensing eliminates barriers for enterprise adoption of reasoning capabilities. Developers can deploy, modify, and distill without legal uncertainty. That’s how markets democratize.

The Reinforcement Learning Renaissance

DeepSeek R1 signals a broader industry shift toward reinforcement learning methodologies. Research teams are introducing RL objectives earlier in training pipelines through policy pretraining. Companies like Osmosis focus on real-time RL enabling continuous improvement without human-in-the-loop intervention.

The trend extends beyond language models. Reinforcement Learning with Verifiable Rewards (RLVR) replaces subjective alignment with objective, provable correctness criteria. UCLA research demonstrates systems learning directly from physical measurements without detailed models—autonomous learning systems adapting in real time.

DeepSeek proved RL-first approaches work for frontier AI. Expect more models trained this way, fewer annotation bottlenecks, and faster capability development. Pure reinforcement learning just became the practical alternative to supervised training orthodoxy.