Hugging Face launched Open-R1 in late January 2026, creating the first fully open reproduction of DeepSeek-R1 complete with datasets, training code, and validation pipeline. While DeepSeek released model weights under MIT license last month, the training data and code remained closed – preventing researchers from reproducing the breakthrough that matched OpenAI’s o1 at 27x lower cost. Open-R1 fills this gap with a Mixture-of-Thoughts dataset containing 350,000 verified reasoning traces and a systematic 3-step reproduction plan. Step 1 is complete: OpenR1-Distill-7B matches DeepSeek’s distilled model performance, and developers can start building reasoning models today.

“Open Weights” Isn’t Enough – The Reproducibility Gap

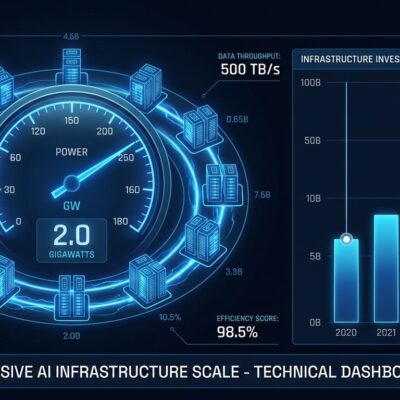

DeepSeek’s January release impressed the AI world with reasoning performance rivaling OpenAI’s o1 while costing $0.55 per million tokens versus $15 – a 27x cost advantage. The MIT license seemed generous. But here’s what DeepSeek actually released: model weights (671 billion parameters), a technical paper, and benchmark results. What they didn’t release: training datasets, training code, or hyperparameters.

Researchers can use the model but can’t reproduce it. You can download the weights and run inference, but you can’t validate DeepSeek’s claims or improve on their training methods. The Hugging Face team put it bluntly: “The goal is to build the missing pieces so that the whole research and industry community can build similar or better models using these recipes and datasets.”

In 2026, “open-source AI” needs to mean more than downloadable weights. Scientific integrity requires reproducibility. DeepSeek’s release was impressive but incomplete. Open-R1 completes the picture.

The 3-Step Reproduction Plan (Step 1 Done)

Open-R1’s systematic approach breaks reproduction into three clear phases, with Step 1 already complete.

Step 1: Distillation (Completed – January 2026)

The team distilled high-quality reasoning datasets from DeepSeek-R1, creating Mixture-of-Thoughts: 350,000 verified reasoning traces covering math, coding, and science tasks. They then trained OpenR1-Distill-7B on this dataset, matching DeepSeek-R1-Distill-Qwen-7B performance. Both the dataset and model weights are now available on Hugging Face Hub.

This is the breakthrough moment. Developers no longer need to train reasoning models from scratch using expensive reinforcement learning. Fine-tune an existing model (Qwen, Llama, Mistral) on Mixture-of-Thoughts and you get reasoning capabilities. The training command is straightforward:

accelerate launch --config_file=recipes/accelerate_configs/zero3.yaml \

src/open_r1/sft.py \

--model_name_or_path open-r1/Qwen2.5-Math-7B-RoPE-300k \

--dataset_name open-r1/Mixture-of-ThoughtsStep 2: Pure RL Pipeline (In Progress)

The second phase replicates DeepSeek’s R1-Zero approach: pure reinforcement learning without supervised fine-tuning. The team is implementing Group Relative Policy Optimization (GRPO) on large-scale datasets. This validates DeepSeek’s claim that reasoning capabilities emerge from RL alone.

Step 3: Multi-Stage Training (Planned Q2-Q3 2026)

The final phase demonstrates the full end-to-end pipeline: base model → supervised fine-tuning → reinforcement learning → production-ready reasoning model. Once complete, researchers will have the complete blueprint for building reasoning AI, not just the final product.

What Developers Can Do Today

Open-R1 democratizes reasoning AI in ways DeepSeek’s weights-only release couldn’t.

Use Mixture-of-Thoughts immediately. The 350,000 verified reasoning examples are available now. Fine-tune your existing models into reasoning models without touching RL training. The barrier to entry just collapsed.

Validate scientific claims independently. DeepSeek reported that pure RL training produces reasoning without supervised fine-tuning. Now researchers can test it themselves instead of trusting benchmarks. Open science requires reproducibility.

Build domain-specific reasoning models. The Hugging Face team emphasized they “don’t want to stop at math datasets… scientific fields such as medicine, where reasoning models could have significant impact.” Medical diagnosis reasoning. Legal case analysis. Code debugging strategies. The training pipeline works; adapt it to your domain.

The cost context matters. DeepSeek-R1 API costs $0.55 per million tokens – already 27x cheaper than OpenAI’s $15. Open-R1 costs only your compute for fine-tuning. Training from scratch cost DeepSeek $5.5 million. Fine-tuning on Mixture-of-Thoughts costs a fraction.

Setting the 2026 Standard for Open AI

Open-R1 establishes what “open-source AI” should mean going forward. There’s now a spectrum of openness, and weights-only releases don’t cut it anymore.

Full transparency (DeepSeek + Open-R1): Weights, datasets, training code, methodology. Open weights (Llama 4): Weights released but no training details. Closed (OpenAI o1): API access only, no transparency.

Red Hat Developer stated in January 2026: “Open science AI models provide transparency and documentation needed for full reproducibility.” That’s the new baseline. Future model releases will face pressure to match Open-R1’s transparency or explain why they won’t.

OpenAI’s o1 delivers superior speed (2x faster) and stronger general knowledge, but at 27x the cost and zero transparency. DeepSeek-R1 offers excellent performance and MIT-licensed weights – but no datasets or training code. Open-R1 provides full transparency, reproducibility, and community-driven development at only compute costs.

Show your work, not just your model. Reproducibility is a feature, not a bug. 2026 is the year open-washing ends.

Getting Started and What’s Next

The Open-R1 repository is live on GitHub with training code, recipes, and documentation. The Mixture-of-Thoughts dataset and OpenR1-Distill-7B model are available on Hugging Face Hub. Requirements: Python 3.11, CUDA 12.4, standard ML libraries. Installation is automated: make install handles dependencies.

The roadmap is clear. Q1-Q2 2026 brings Step 2 completion (pure RL pipeline validated). Q2-Q3 2026 brings Step 3 completion (full multi-stage training proven). Beyond 2026: domain-specific reasoning datasets for medical, legal, and scientific fields.

Open-R1 proves that open-source AI can match closed alternatives when the entire community contributes – not just model weights, but the full recipe. DeepSeek showed the model. Open-R1 showed the work.