January 7, 2026 marked a turning point in AI deployment economics. Microsoft Research’s BitNet.cpp framework went viral with 766 GitHub stars in 24 hours after demonstrating something previously impossible: running 100-billion parameter models on consumer CPUs at human reading speed. Moreover, GPU scarcity and cost have been the primary barrier to AI deployment. A single H100 GPU costs $25,000-$40,000. However, BitNet eliminates that barrier entirely.

The Technical Breakthrough Behind 1-Bit LLMs

BitNet.cpp is Microsoft’s official inference framework for 1-bit LLMs, using 1.58-bit quantization to represent weights with only three values: -1, 0, and +1. Furthermore, this isn’t post-training quantization applied to existing models. BitNet trains models natively in 1.58-bit from scratch, maintaining accuracy while dramatically reducing computational requirements.

The architecture uses what Microsoft calls W1A8: 1-bit weights combined with 8-bit activations. Instead of floating-point multiplication and division, BitNet uses addition-only operations. Multiplying by 1 is identity. Multiplying by 0 is always zero. Multiplying by -1 flips the sign. Consequently, this achieves a 71.4x reduction in arithmetic energy consumption.

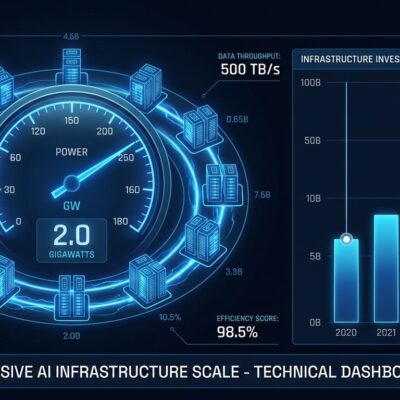

The performance numbers are concrete. On ARM CPUs, BitNet achieves 1.37x to 5.07x speedups with 55-70% energy reduction. On x86 CPUs, speedups range from 2.37x to 6.17x with 72-82% energy savings. Additionally, memory footprint drops from 1.4-4.8GB for traditional models to just 0.4GB for BitNet. Most importantly, BitNet can run a 100-billion parameter model on a single consumer CPU at 5-7 tokens per second—comparable to human reading speed.

GPU Dependency Eliminated: Cost Revolution

The cost implications are straightforward. An NVIDIA H100 GPU costs $25,000-$40,000 for purchase, or $3-$7 per hour for cloud rental. A complete 8-GPU cluster runs $200,000-$400,000, plus $50,000-$200,000 in electrical infrastructure upgrades per rack. Nevertheless, BitNet runs on a $500-$2,000 consumer CPU with zero GPU costs.

This unlocks deployment scenarios that were economically impossible. Edge devices—IoT sensors, mobile phones, embedded systems—can now run frontier AI models. Privacy-sensitive applications in healthcare, finance, and government can keep AI entirely on-device without cloud dependencies. Likewise, startups and small businesses can deploy AI without six-figure infrastructure investments. Developing markets with limited GPU availability can participate in AI development.

The developer community recognized the implications immediately. GitHub trending analysis called January 7 “the day 1-bit LLMs went mainstream.” One developer framed it bluntly: “Microsoft just declared war on the GPU mafia.”

Reality Check: BitNet Limitations and Tradeoffs

BitNet doesn’t eliminate GPUs entirely. Training large models still requires GPU clusters—BitNet optimizes inference only. Models smaller than 3 billion parameters face accuracy degradation. At 3B+ parameters, BitNet b1.58 matches full-precision models, but below that threshold, the 1-bit quantization shows its limits.

Current GPUs aren’t optimized for 1-bit operations—they’re designed for FP16 and FP32. Ironically, CPUs outperform GPUs for BitNet inference. Therefore, you still need GPUs for training, real-time massive-scale inference, and cutting-edge accuracy requirements. BitNet trades slight accuracy loss for massive cost and energy savings.

Market Disruption: What This Means for AI Infrastructure

GPU vendors built their AI dominance on the assumption that frontier models require expensive accelerators. BitNet challenges that assumption directly. Cloud providers structured their economics around GPU rentals. CPU-native AI threatens that model. Meanwhile, CPU vendors like Intel, AMD, and ARM have an opportunity to position CPUs as AI-capable, not just general-purpose.

The timing aligns with broader industry trends. The OpenCode vs Claude Code battle demonstrates developer preference for open-source, customizable tools. The AI efficiency movement is scrutinizing energy costs and sustainability. The democratization trend aims to reduce barriers to AI adoption. Similarly, edge AI deployment is accelerating as organizations move processing closer to data sources.

BitNet fits perfectly into this context. It’s open-source. It reduces energy consumption by 55-82%. It eliminates the GPU cost barrier. It enables edge deployment. Ultimately, the market is shifting from cloud-first, GPU-required architectures to edge-first, CPU-native patterns.

Getting Started with BitNet.cpp

Microsoft released BitNet b1.58 2B4T, a 2.4-billion parameter model trained on 4 trillion tokens, on HuggingFace Hub. The framework supports models from 0.7B to 100B parameters, including Falcon3, Llama3, and other popular architectures. Installation requires Python 3.9+, CMake 3.22+, and Clang 18+, with detailed instructions on the GitHub repository.

The framework is production-ready, not a research prototype. Microsoft Research published peer-reviewed papers. The benchmarks are reproducible. The code is open-source. Developers can start deploying CPU-native AI today.

January 7, 2026 won’t be remembered as the day someone published another AI efficiency paper. It’ll be remembered as the day running 100-billion parameter models on consumer CPUs became normal. That’s the kind of shift that changes what’s possible.