Repository intelligence represents the next evolution in AI-powered development—moving beyond line-by-line autocomplete to systems that understand entire codebases with their history, relationships, and architectural patterns. According to GitHub’s chief product officer Mario Rodriguez, as codebases explode in complexity (GitHub now sees 986 million annual commits), developers need tools that grasp context, not just syntax. The technology analyzes patterns in code repositories to figure out what changed, why, and how pieces fit together—providing what Rodriguez calls “a competitive advantage by providing the structure and context for smarter, more reliable AI.”

The Complexity Crisis

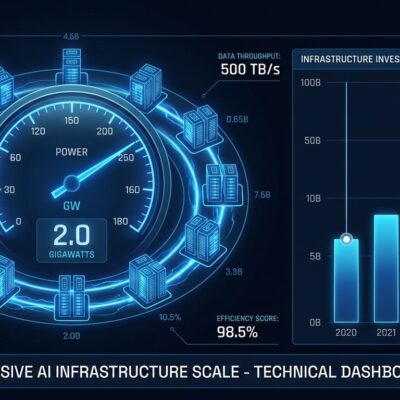

GitHub now processes 986 million commits annually, with developers creating 230+ repositories every minute. Modern codebases fragment into microservices, distributed systems, and polyglot stacks that traditional AI tools can’t effectively navigate.

The result? Developers spend 25-40% of their time wrestling with technical debt, and 79% of tech leaders cite it as a major obstacle. When Siemens applied AI-powered dependency analysis to one of their systems, they uncovered 15,000 hidden dependencies—connections no human could reasonably track. This requires fundamentally different AI.

How Repository Intelligence Works

Repository intelligence platforms use graph databases to map code relationships as first-class data structures. Instead of treating codebases as linear text, they model interconnected networks—tracking how classes, methods, modules, and services depend on each other.

These systems analyze commit history to understand not just what the code does, but why it evolved that way. Platforms like FalkorDB’s Code Graphs and Neo4j-based systems enable queries like “What services write to this table and under what conditions?”—questions that would take hours of manual investigation.

The impact is measurable. AI-powered dependency analysis reduces code analysis time by 70% and cuts errors by 50-80%. Siemens’ case study showed a 45% reduction in regression testing time after uncovering those hidden dependencies.

What Changes for Developers

New developers can understand complex codebases in days instead of months. When refactoring, the system identifies ripple effects before you make changes, preventing the “I broke three services I didn’t know existed” disaster.

GitHub Copilot users report 15% faster code reviews with context-aware analysis. The tool reduced average time to open a pull request from 9.6 days to 2.4 days, and developers keep 88% of AI-generated code in final submissions.

Technical debt becomes visible instead of buried. Tools like CodeScene automatically flag deteriorating code patterns. One case study showed 70% of new code generated during legacy modernization, with 50% reduction in overall technical debt.

The Tools Doing This Now

This isn’t vaporware. Claude Code offers a 1-million-token context window that can ingest entire projects, acting as “the architect”—a thinking partner for complex problems. GitHub Copilot evolved from simple completion to repository-level indexing as “the accelerator” for speed and efficiency.

Emerging players like Qodo bring specialized Codebase Intelligence Engines with 15+ automated PR workflows, while Sourcegraph Cody’s “Amp” agentic layer transforms repository context into an agent that can plan and execute changes. These aren’t just bigger context windows—they’re fundamentally different architectures treating repositories as searchable knowledge graphs.

Over 90 Fortune 100 companies now use GitHub, and AI coding assistants have become standard in major IDEs. The market signal is clear: this technology is moving from early adoption to baseline expectation.

The Real Implications

Here’s what most coverage misses: repository intelligence exposes both opportunity and limitation. These tools excel at pointing to messy code while struggling to understand why that code evolved into its current state. The human context—business decisions, tight deadlines, technical constraints—remains largely invisible to AI.

This matters because the technology augments human judgment; it doesn’t replace it. As one engineering leader put it, “AI tells us where the problems are. Fixing them still requires understanding why they became problems.”

The shift from “AI hype to pragmatism” in 2026 means recognizing repository intelligence as a necessary tool for managing complexity, not a silver bullet. By 2027, repository-aware AI will likely be baseline functionality. The question isn’t whether to adopt it—it’s how to use it without losing sight of the architectural thinking that no AI can fully replicate.

Bottom Line

Repository intelligence represents the maturation of AI-assisted development. Simple autocomplete tools are already obsolete for serious work. But the most successful teams will leverage repository intelligence to augment their architectural understanding, not replace it. The codebases are too complex for humans alone. They’re also too contextual for AI alone. The future belongs to developers who can leverage both.