LinearB’s 2026 Software Engineering Benchmarks Report reveals a counterintuitive problem from analyzing 8.1 million pull requests across 4,800 teams: AI-generated PRs wait 4.6x longer in review queues but get reviewed twice as fast once picked up. Engineering organizations spent 2025 adopting AI coding tools to accelerate development, only to discover they created a verification crisis. Review throughput, not coding speed, now determines delivery velocity.

The data exposes what vendor promises missed. Teams generate 25-35% more code with AI, yet PR review times increased 91%. LinearB’s dataset—8.1 million PRs from 42 countries—provides the first industry-wide evidence of AI’s real impact beyond the hype.

The 4.6x Wait Paradox

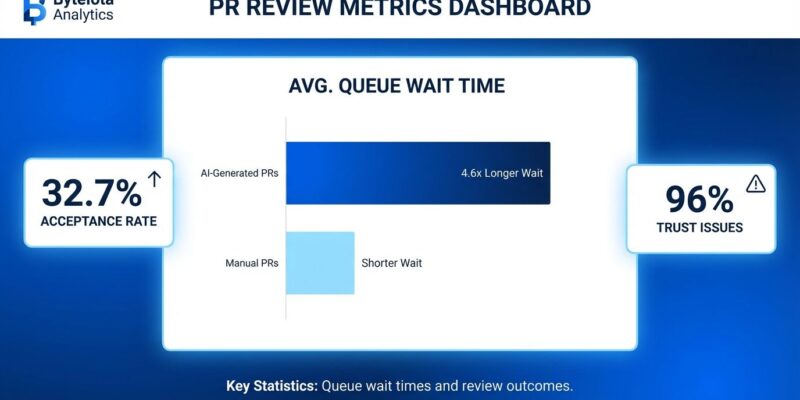

AI-generated pull requests sit in review queues 4.6x longer than manual PRs before reviewers pick them up. Once review begins, however, AI PRs are processed twice as fast. This creates a bottleneck that looks productive on individual metrics but slows teams down.

The numbers reveal the gap between promise and reality. AI PRs have a 32.7% acceptance rate compared to 84.4% for manual code. They average 10.83 issues versus 6.45 for manual submissions. Despite faster individual reviews, the queue delay erases any velocity gains.

This shift matters because it exposes the real cost of AI adoption. Organizations optimized for writing code faster but didn’t prepare for the downstream verification load. Consequently, the constraint moved from “how fast can we write code?” to “how fast can we safely validate it?” Review queue management—not individual productivity—now determines team velocity.

Why Reviewers Deprioritize AI Code

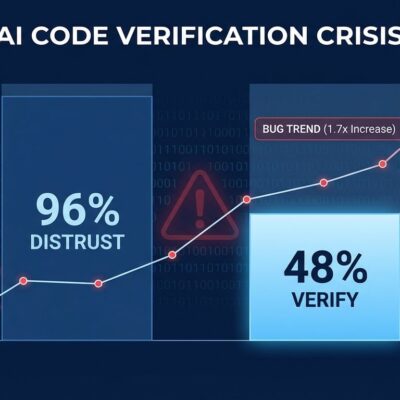

The 4.6x queue delay stems from a documented trust crisis. Sonar’s 2026 survey found 96% of developers don’t fully trust AI-generated code accuracy, yet fewer than half verify it before committing. Developer trust in AI accuracy dropped from 43% in 2024 to just 33% in 2025, according to Stack Overflow data. The deprioritization is rational—reviewers learned AI code needs extra scrutiny.

The “almost right” problem drives reviewer behavior. Sixty-six percent of developers describe AI code the same way: almost right but never quite correct. For refactoring tasks, 65% report AI misses relevant context. Forty-five percent say debugging AI code takes longer than fixing human code. Moreover, when 38% of reviewers report AI code requires more effort to review than human code, deprioritizing becomes a defensive strategy.

This isn’t a tooling problem—it’s a quality signal. With 10.83 issues per AI PR versus 6.45 for manual code, reviewers approach AI PRs with well-earned skepticism. Therefore, the 4.6x wait time reflects reviewers making rational decisions about where to invest their limited capacity.

Volume Overload Without Capacity Scaling

AI adoption created massive PR volume growth in 2025. Monthly pushes hit 82 million, merged PRs reached 43 million, and 41% of commits are now AI-assisted. Eighty-four percent of developers adopted AI coding tools. Furthermore, internal enterprise data shows 25-35% growth in code developed per engineer. Review capacity remained flat.

The result: a projected 40% quality deficit in 2026 where more code enters the pipeline than reviewers can validate with confidence. This is verification debt accumulation that undermines AI productivity gains. The math is brutal: developers complete 21% more tasks with AI and merge 98% more PRs, but PR review time increases 91%. Ultimately, the promise of 20-40% productivity gains disappears in the review queue.

This exposes the fundamental miscalculation in AI adoption strategies. Teams can generate code twice as fast, but if review is the bottleneck, the entire system slows down. The winners optimized for writing; the constraint was always in validation.

What Engineering Leaders Should Do

LinearB’s benchmarks provide the first industry-wide data to guide AI ROI decisions. Engineering leaders need to shift focus from optimizing individual developer speed to optimizing team review throughput. This means addressing trust through automated quality gates for AI code, scaling review capacity to match output, and implementing process changes rather than just buying more tools.

High-performing teams show what works. Elite teams maintain less than 194 code changes per PR compared to over 661 for struggling teams. They set review SLAs—initial feedback within 24 hours. Additionally, they use merge queues and bots for automation. They aim for 200-400 lines per PR and keep review time low, the strongest driver of cycle time.

Organizations spent 2025 adopting AI tools without clear ROI metrics. For many teams, the answer is uncomfortable: AI increased code output but decreased delivery velocity through review bottlenecks. However, fixing this requires process optimization, not additional AI tools. Use LinearB’s benchmarks to measure against the 4,800-team dataset and answer “Are we normal?”

The Broader Shift

The 2026 benchmarks reveal a fundamental change in software engineering constraints. For decades, the bottleneck was writing code. AI solved that, exposing a new constraint: safely verifying code. This shift extends beyond engineering—it affects developer career trajectories where validation skills now matter more than generation skills, organizational structure where review capacity must scale with AI output, and tool priorities favoring verification automation over generation automation.

This isn’t a temporary adjustment. AI didn’t make developers faster; it changed what they do. Senior engineers report spending more time validating AI logic than shaping system design. Indeed, community discussions reflect career anxiety: “Are we just validating AI now?” The process gap is clear—organizations adopted tools without updating review processes.

The winners in 2026 will be organizations that recognize this shift and optimize for the new constraint. Review throughput matters more than coding speed. LinearB’s benchmarks provide the measurement framework to track this transition. The data from 8.1 million PRs across 4,800 teams isn’t comfortable, but it’s real. Engineering leaders now have the numbers to move from AI hype to AI reality.