TanStack AI launched in alpha this January as the team behind TanStack Query and Router challenges Vercel AI SDK’s dominance. Their pitch: “the Switzerland of AI tooling”—pure open-source infrastructure with zero vendor lock-in, no service fees, and runtime provider switching between OpenAI, Anthropic, Gemini, and Ollama. The timing isn’t coincidental: Anthropic just donated Model Context Protocol (MCP) to the Linux Foundation’s Agentic AI Foundation, signaling industry-wide movement toward open, standardized AI development tools. With Gartner predicting 40% of enterprise apps will embed AI agents by end of 2026 (up from under 5% in 2025), the battle for AI development standards is heating up.

The Vendor Lock-in Problem TanStack AI Solves

TanStack AI operates as pure open-source infrastructure—no service layer, no middleman fees, direct connections to your chosen AI provider. This contrasts sharply with Vercel AI SDK’s increasing platform coupling, where Next.js optimizations increasingly tie developers to Vercel’s infrastructure. The architecture is simple: pluggable adapters (openaiText, anthropicText, geminiText, ollamaText) implement a unified interface, enabling runtime provider switching without code changes.

import { chat } from "@tanstack/ai";

import { openaiText } from "@tanstack/ai-openai";

import { anthropicText } from "@tanstack/ai-anthropic";

// Switch providers via environment variable

const adapter = process.env.AI_PROVIDER === "openai"

? openaiText("gpt-4o")

: anthropicText("claude-sonnet-4-5");

const stream = chat({ adapter, messages: [...] });With 2.1k GitHub stars and 110k NPM downloads despite alpha status, developers are betting on vendor neutrality. The value proposition is clear: A/B test models, optimize costs by using cheaper models for simple queries, switch providers when pricing changes, all without rewriting application logic.

Type Safety That Catches Bugs Before Production

TanStack AI provides per-model type safety where TypeScript autocompletes GPT-4 model names, validates Claude-specific options, and surfaces compile-time errors when mixing incompatible features. TypeScript expert Matt Pocock analyzed the SDK: “If they can nail the type safe streaming DX better than the AI SDK (I do think there’s headroom here) it’ll be hard to top.”

The team behind TanStack Query and Router built their reputation on obsessive type safety—their libraries are often described as “how React data fetching should have worked from day one.” That same philosophy applies here. When you type openaiText("gpt-"), your IDE autocompletes valid models. Pass Claude-specific options to an OpenAI adapter? TypeScript catches it at compile time, not when your production app breaks.

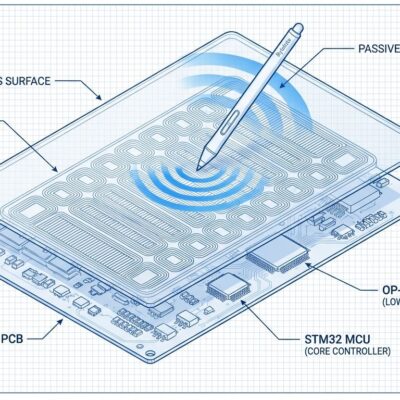

Isomorphic Tools: Define Once, Execute Anywhere

TanStack AI’s isomorphic tool system lets you define tool schemas once with toolDefinition(), then implement with .server() for backend execution or .client() for browser-side operations. Matt Pocock called this “a nice upgrade on Vercel AI SDK’s tool system” because you get type-safe tools across environments, not just schemas.

const databaseTool = toolDefinition()

.server() // Secure backend execution

.client(); // Or client-side for UI updates

// Same schema, different contexts, full type safetyBuilt-in tool approval workflows mean sensitive operations—database writes, API calls with side effects—can require user confirmation before execution. This positions TanStack AI as more than a chat SDK; it’s infrastructure for building AI agents that interact with real systems.

The Alpha Stage Trade-off: Innovation vs Stability

TanStack AI is alpha software. The team acknowledges “bugs, rough edges, things that don’t work.” Matt Pocock’s verdict: “Don’t adopt just yet. It’s got a long way to go to catch up to the AI SDK’s feature set. Duh, it’s an alpha. But for the next two years? TanStack AI is the more interesting bet.”

The limitations are real: 4 core providers versus Vercel’s 30+, less granular streaming control (no custom data parts yet), and a small volunteer team means slower development pace. Yet it ships with built-in AI DevTools panel—Pocock asked “why hasn’t Vercel AI SDK done this already?”—providing real-time visibility into streaming, tool calls, and reasoning traces.

The calculation is simple. Need production stability today? Vercel AI SDK wins. Building for 1-2 years out and refuse to lock into platforms? TanStack AI is the more interesting architecture bet.

Key Takeaways

- Choose TanStack AI if: Vendor neutrality is non-negotiable, you prioritize type safety, building internal tools or greenfield projects with time to evolve

- Choose Vercel AI SDK if: Need production stability today, require 20+ AI providers, tight deadlines without room for alpha bugs

- Industry context matters: MCP joining Linux Foundation’s Agentic AI Foundation with backing from Anthropic, OpenAI, Microsoft, and Google signals standardization is winning—TanStack AI is positioned for this future

- The 2-year bet: With 10,000+ MCP servers launched and 60,000+ projects adopting AGENTS.md, open standards momentum is real; TanStack AI’s vendor-neutral philosophy aligns with where the industry is heading

- Try it now: Alpha means risk, but GitHub repo has examples for React, Solid, and vanilla JS; official blog explains philosophy and roadmap