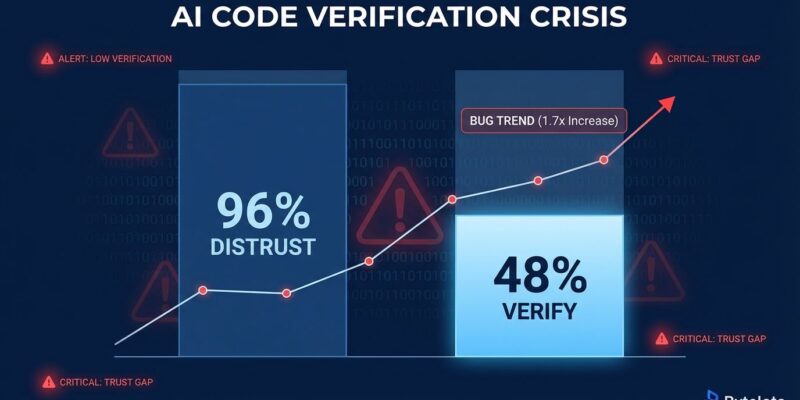

Sonar’s 2026 State of Code Developer Survey, released this week, reveals a striking paradox: 96% of developers don’t fully trust AI-generated code, yet only 48% consistently verify it before committing. With 72% of developers using AI tools daily and AI now contributing 46% of code in active projects, this trust-verification gap creates serious quality and security risks that challenge the “AI makes developers 10x faster” narrative.

The numbers tell a more complicated story than the hype suggests. AI-generated pull requests contain 1.7x more bugs than human code, and 40-50% include security flaws. While developers complete tasks 55% faster with AI, delivery stability drops 7.2%. The productivity promised by code generation is being offset by a new bottleneck: verification.

The Productivity Paradox: Work Shifted, Not Eliminated

AI hasn’t eliminated developer toil—it’s shifted it from code creation to code verification and debugging. Developers still spend 24% of their time on unproductive tasks, unchanged from pre-AI levels. The difference? That time is now spent reviewing, correcting, and debugging AI output instead of writing code from scratch.

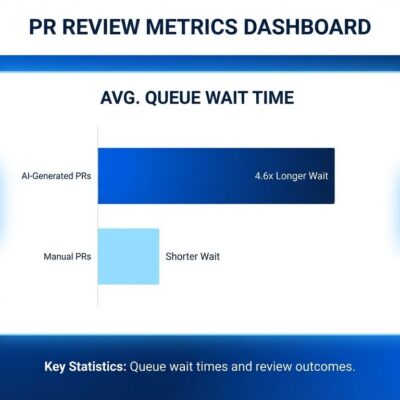

Moreover, 38% of developers report that reviewing AI code takes longer than reviewing human code. PRs are 18% larger with AI adoption, incidents per PR are up 24%, and change failure rates have increased 30%. Google’s 2024 DORA report found that a 25% increase in AI usage correlates with a 7.2% decrease in delivery stability—a clear productivity-quality trade-off.

The “AI productivity boom” is more nuanced than headlines suggest. If verification takes as long as writing would have, where’s the net gain? This explains why many teams feel busy but not more productive despite AI adoption.

The Hidden Cost: 1.7x More Bugs, 40-50% Security Flaws

AI-generated code ships faster but contains significantly more defects. CodeRabbit’s analysis found AI pull requests average 10.83 issues each, compared to 6.45 for human PRs—a 1.7x quality gap. These aren’t minor issues: AI code has 1.4x more critical issues and 1.7x more major issues than human-written code.

Security vulnerabilities are even worse. Stanford University research found that 40% of GitHub Copilot suggestions contain security vulnerabilities. NYU researchers discovered AI code has three times more security flaws than human code in certain scenarios. The vulnerability rates by category are alarming: AI code is 2.74x more likely to introduce XSS vulnerabilities, 1.91x more likely to create insecure object references, and 1.88x more likely to implement improper password handling.

Teams adopting AI for “move fast” velocity are unknowingly accepting higher defect and security risk. Without mandatory verification workflows, AI-generated vulnerabilities reach production. One major security breach from unverified AI code could outweigh years of velocity gains.

Why 52% Skip Verification: Time Pressure and Review Fatigue

Despite recognizing AI’s reliability problems, more than half of developers don’t consistently verify AI-generated code before committing. The primary reason: reviewing AI code takes more time than reviewing human code, yet organizations pressure teams to ship quickly. This creates perverse incentives to skip verification.

PRs are 18% larger with AI adoption, overwhelming reviewers who already struggle with capacity constraints. AWS CTO Werner Vogels coined the term “verification debt” for this accumulated unverified code—similar to technical debt but specifically for code that was never properly validated.

Developers aren’t irresponsible. They’re caught between knowing AI code needs verification and facing organizational pressure to ship fast. The result: verification debt accumulates in codebases, surfacing later as bugs, security incidents, or maintenance nightmares. As one Hacker News commenter noted, “AI code takes longer to review because you need to verify the logic, not just style.”

Technical Debt That Compounds: “Locally Reasonable, Globally Arbitrary”

AI-generated code creates a unique form of technical debt that compounds faster than traditional debt. Ox Security researchers describe it as “locally reasonable, globally arbitrary”—each AI function makes sense in isolation but violates broader architectural patterns, creating codebases that drift into incoherence over time.

GitClear analyzed 211 million lines of code and found AI-driven development is “degrading code quality.” AI tools routinely violate the DRY (Don’t Repeat Yourself) principle, copying similar logic everywhere instead of refactoring properly. Linters catch syntax errors but miss these systematic architectural problems, allowing them to compound undetected.

Forrester predicts 75% of tech decision-makers will face moderate-to-severe technical debt by 2026, largely from unverified AI code. Unlike traditional technical debt—known shortcuts made consciously—AI debt is invisible and accumulates unconsciously. Teams discover the problem months later when refactoring becomes impossible or onboarding new developers takes weeks because the codebase lacks consistent patterns.

The Path Forward: Treat AI Code as Untrusted Input

The solution isn’t abandoning AI tools—it’s building verification into the workflow. Leading teams implement “verification-first” approaches: automated security scanning before commit, mandatory testing with greater than 70% coverage, static analysis integrated into IDEs, and treating AI code as “untrusted input” requiring validation like user-submitted data.

As Google engineer Addy Osmani notes, “Developers who succeed with AI at high velocity aren’t those who blindly trust it; they’re those who’ve built verification systems that catch issues before reaching production.” Tools like SonarQube (supporting 35+ languages), CodeRabbit (detecting 1.7x more issues in AI PRs), and Codacy (with auto-fix capabilities) are becoming essential infrastructure.

Related: GitHub Copilot Multi-Model: Claude Opus 4.5 & GPT-5.2 Now GA

The best-practice workflow looks like this: Generate → Automated Scan → Human Review → Test → Merge. Compare that to the current workflow many teams use: Prompt → Generate → Skip Verify → Merge → Debug in Production. The difference is the gap between shipping fast and shipping well.

Ninety percent of Fortune 100 companies are now investing in AI code review automation. The “AI productivity paradox” is solvable, but it requires investment in verification tooling and process changes. Teams that build robust verification workflows get AI’s speed benefits without the quality trade-offs. Those that don’t accumulate verification debt that eventually slows them down anyway.

Key Takeaways

- The 96% distrust vs 48% verification gap creates “verification debt” that accumulates like technical debt, surfacing later as bugs and security incidents

- AI code ships with 1.7x more bugs and 40-50% security flaw rates, with specific vulnerability types up to 2.74x more common than human code

- Developer toil remains at 24% of weekly time—AI shifted work from creation to verification, not eliminated it

- Mandatory verification workflows are non-negotiable: automated scanning, >70% test coverage, and treating AI code as untrusted input

- The productivity paradox is solvable with investment in verification tools (SonarQube, CodeRabbit, Codacy) and process changes that match review capacity to generation speed