On January 1, DeepSeek released a technical paper that challenges how the AI industry trains large language models. Instead of throwing more compute at the problem—the default strategy for OpenAI, Anthropic, and Google—the Chinese startup’s Manifold-Constrained Hyper-Connections (mHC) architecture uses mathematical constraints to make training more stable and efficient. The proof? DeepSeek-V3.2-Speciale now beats GPT-5 on reasoning benchmarks. At CES 2026, even Nvidia CEO Jensen Huang credited DeepSeek with “activating” the open-source AI movement.

The Training Bottleneck Problem

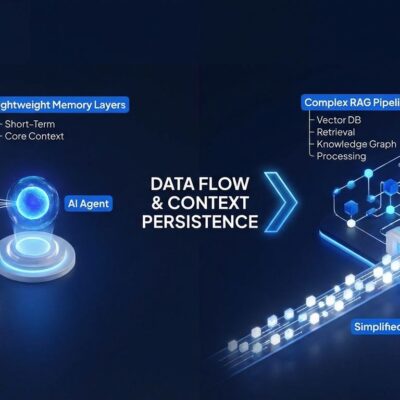

As AI models scale to billions of parameters, they hit a fundamental bottleneck: the single residual stream. During training, gradient signals need to flow backward through hundreds of layers. Residual connections, invented in 2015, create shortcuts that help gradients bypass intermediate layers and prevent training collapse. But when you scale to 27 billion parameters or more, that single stream can’t handle the information flow.

The previous attempt to fix this—Hyper-Connections (HC)—widened the stream and diversified pathways. It worked in theory but caused signal explosion in practice. Training runs would hit “Amax Gain Magnitude” values around 3,000, meaning gradients were blowing up as they propagated through the network. This made large models unstable and expensive to train. HC also had massive memory overhead, making deployment impractical.

How mHC Solves It With Manifolds

DeepSeek’s innovation constrains the mixing matrices to live on a specific mathematical structure: the Birkhoff Polytope manifold. The Sinkhorn-Knopp algorithm enforces this constraint in about 20 iterations, producing doubly stochastic matrices. What does that mean in practice? The matrices normalize rows and columns to sum to one, which prevents signal explosion no matter how deep the model gets.

The result: mHC reduces Amax Gain Magnitude from 3,000 down to 1.6—three orders of magnitude improvement. Training a model with a 4× wider residual stream now adds only 6.7% overhead, compared to the much higher cost of standard Hyper-Connections. As the DeepSeek researchers put it, mHC can “potentially illuminate new pathways for next-generation foundational architectures” by using topology to stabilize optimization.

This isn’t incremental improvement. It’s a fundamental rethinking of how information flows through deep networks during training.

Performance Proof: DeepSeek-V3.2 Results

The mHC architecture isn’t just theory. DeepSeek-V3.2, released alongside the paper, demonstrates it in production. The high-compute variant, V3.2-Speciale, outperforms GPT-5 on reasoning benchmarks and matches Gemini-3.0-Pro. It excels in coding, reasoning, and agentic tasks, performing better across eight different AI benchmarks compared to standard Hyper-Connections.

The technical innovations stack up:

- DeepSeek Sparse Attention (DSA): Reduces computational complexity from O(n²) to O(n), enabling efficient processing of 128K-token contexts.

- Enhanced Reinforcement Learning: Allocates more compute budget to the learning phase, not just pre-training.

- Agentic Task Synthesis: A new pipeline for tool-use capabilities in agent applications.

DeepSeek is transparent about the limitations. V3.2 has narrower world knowledge than GPT-5, ongoing token efficiency challenges, and still lags behind frontier models on complex multi-step reasoning. But here’s the key point: a Chinese startup with a smaller budget is beating OpenAI’s flagship model on reasoning through architectural cleverness, not capital advantage.

The models are open-source and available on Hugging Face (except the Speciale variant, which is API-only). This is frontier performance without the price tag.

Nvidia’s CEO Validates the Open-Source Shift

At CES 2026 in Las Vegas, Nvidia CEO Jensen Huang made DeepSeek a centerpiece of his keynote. He said the company “activated literally this entire movement” toward open-source AI and called DeepSeek R1 “the first open model that’s a reasoning system” that “caught the world by surprise.” According to Huang, open models like DeepSeek-V3.2, Meta’s Llama, and Alibaba’s Qwen are now generating “roughly one in four tokens”—25% of all AI inference workloads.

Open-source models have “reached the frontier,” Huang noted, though they remain about six months behind the most advanced proprietary systems. That gap is closing fast, and architectural innovations like mHC are the reason why. Rather than reducing overall compute demand—as some feared—the proliferation of open models has actually expanded usage. When the CEO of the company selling AI chips says open-source is winning, that’s a market signal.

From Compute-Heavy to Architecture-Smart Scaling

DeepSeek’s mHC represents a broader shift in how AI companies think about scaling. The old playbook was simple: more compute equals better performance. Bigger GPU clusters, longer training runs, larger datasets. This works if you have billions in capital, like OpenAI, Anthropic, or Google.

The new playbook is about efficiency. Can you rethink the fundamental architecture to get better performance without linearly scaling resources? mHC says yes. By using manifold constraints and doubly stochastic matrices, DeepSeek trains larger, more stable models at a fraction of the cost. This is the “moneyball” moment for AI—using smarter math to compete against richer rivals.

It’s also democratizing frontier AI. Smaller teams without access to massive GPU clusters can now train competitive models if they’re willing to innovate on architecture. Open-source accelerates this: instead of hoarding techniques, companies like DeepSeek, Meta, and Alibaba share their innovations. The community builds on them, and the gap with proprietary models shrinks faster.

Regulatory Tensions Don’t Stop Innovation

DeepSeek isn’t operating in a vacuum. Multiple governments—Australia, Germany, the Netherlands, Taiwan, and the United States—are scrutinizing the company over data privacy and security concerns. DeepSeek’s privacy policy states that user data is stored on servers in China, which raises red flags for government and enterprise use in some markets.

But here’s the thing: technical innovation and geopolitical concerns operate on different tracks. mHC’s mathematical foundations are sound regardless of where the servers sit. The open-source nature of the models means anyone can download, audit, and host them locally. Regulatory headwinds may limit DeepSeek’s direct reach in certain markets, but the architectural breakthrough is out there for the world to use.

What Comes Next

The research community is calling for more testing before declaring mHC the new standard. Fair enough. But the early results are compelling: better stability, lower overhead, competitive performance, and open-source availability. If mHC scales to trillion-parameter models—still an open question—it could redefine how the industry approaches training at the largest scales.

DeepSeek typically releases major models before China’s Spring Festival (mid-February). Industry watchers expect another drop soon, likely incorporating mHC more deeply. Whether other AI companies adopt manifold-based architectures or develop competing techniques, the pressure is on. Efficiency matters now. Architectural innovation is a differentiator, not just funding rounds.

The takeaway: throwing compute at problems is the lazy approach. DeepSeek proved that rethinking fundamentals—using classical topology and modern deep learning—can beat brute force. Open-source just closed the gap a little more.