OpenAI launched ChatGPT Health yesterday, January 7, revealing that 230 million ChatGPT users already ask health questions weekly. The dedicated health interface formalizes what millions do unofficially—using ChatGPT for medical advice—but opens a regulatory minefield. The FDA can classify AI that diagnoses disease as a medical device requiring clinical trials, HIPAA mandates strict privacy controls for health data, and liability remains unresolved when AI gives dangerous medical advice. OpenAI hasn’t publicly addressed any of this.

The timing couldn’t be worse for privacy optics. Illinois reported a healthcare data breach affecting 600,000+ patients the day before ChatGPT Health’s announcement, highlighting the risks of sharing health information with tech companies.

FDA, HIPAA, and the Liability Question OpenAI Won’t Answer

ChatGPT Health enters regulatory gray territory. The FDA classifies software that diagnoses or treats disease as a medical device, but “informational tools” like WebMD typically escape oversight. OpenAI hasn’t clarified which category ChatGPT Health falls into. If users describe symptoms and the AI suggests possible conditions, that could constitute diagnosis—triggering FDA authority.

HIPAA compliance is equally murky. Health conversations contain Protected Health Information (PHI), and covered entities must encrypt data, control access, and provide audit trails. OpenAI hasn’t disclosed whether ChatGPT Health meets HIPAA standards or if health data will train future models. Moreover, legal liability is unresolved. If ChatGPT Health misdiagnoses a serious condition and a patient delays treatment, who faces the lawsuit: OpenAI, the user, or both?

Developers building health tech on OpenAI’s platform need answers. California already banned AI chatbots in children’s toys for four years due to safety concerns. If the FDA classifies ChatGPT Health as a medical device, apps using it could face the same regulatory requirements—clinical trials, FDA approval, and continuous monitoring.

Related: California Bans AI Chatbots in Kids’ Toys for 4 Years

AI Hallucinations Meet Healthcare: A Dangerous Combination

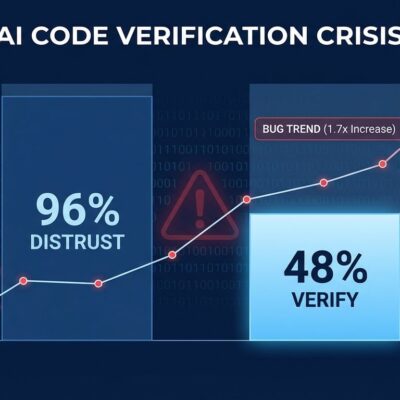

ChatGPT passes the US Medical Licensing Exam at roughly 60-70% accuracy. Human doctors score 95%+. The gap matters because AI mistakes in healthcare can kill people, not just frustrate users. Furthermore, ChatGPT has documented hallucination problems—confidently stating wrong drug dosages, suggesting discontinued medications, and missing contraindications.

The critical issue isn’t just accuracy but confidence. AI presents incorrect medical information with the same authority as correct information, making users trust dangerous advice. A drug that’s safe for most people but lethal for pregnant women? ChatGPT might miss that unless explicitly told about pregnancy. Rare diseases requiring immediate treatment? ChatGPT performs poorly on uncommon conditions, achieving less than 40% diagnostic accuracy compared to specialists’ 80%+.

In healthcare, a 1% error rate could harm thousands of patients daily given the 230 million weekly query volume OpenAI revealed. The question is whether ChatGPT Health includes sufficient guardrails—fact-checking systems, medical disclaimers, emergency escalation protocols—or if it’s just the general model with healthcare marketing.

230M Weekly Queries Reveal Healthcare Access Crisis, Not AI Innovation

The staggering 230 million weekly health queries expose a deeper problem than AI can solve: broken healthcare access. Users bypass doctors due to cost (US doctor visits run $100-$300 vs $20/month for ChatGPT Plus), wait times (weeks for appointments vs instant AI responses), and convenience (3 AM health questions, embarrassing topics easier to ask AI).

People use ChatGPT Health for symptom triage (“is this serious?”), medication questions, lab result interpretation, and second opinion research. The risk is that “ChatGPT said it’s fine” could delay necessary medical care. OpenAI is formalizing unofficial behavior, but the underlying issue is inaccessible, unaffordable healthcare systems—not a lack of AI chatbots.

Consequently, developers should view this as both market opportunity and ethical responsibility. AI health tools serve underserved populations, but building them requires understanding regulatory requirements and accuracy limitations. The temptation to prioritize speed over safety is real when 230 million users are asking for help.

Illinois Breach, HIPAA Questions, and Regulatory Reckoning

ChatGPT Health launched the same week Illinois health systems reported breaching 600,000+ patient records. Healthcare data is the most sensitive personal information, and major breaches like Anthem and Change Healthcare have affected over 100 million patients in recent years. Trust in tech companies handling health data is low, and OpenAI hasn’t explained its privacy practices.

HIPAA requires encryption, access controls, audit trails, and patient rights to data access and deletion. Unknown factors include whether OpenAI stores health conversations, uses them for AI training, or shares data with third parties. De-identification is challenging because health conditions plus demographics can re-identify patients even without names.

The regulatory reckoning is coming. Prediction: FDA issues guidance on AI health tools within 6-12 months, classifying diagnostic AI as medical devices requiring clinical trials. Physicians’ groups will lobby against unlicensed AI diagnosis. First lawsuits will emerge when patients are harmed by AI advice. OpenAI is likely prepared for this fight, but smaller health tech startups using ChatGPT Health API may not be.

Related: AI Coding Assistants: 19% Slower Despite 20% Faster Feel

Key Takeaways

- ChatGPT Health enters a regulatory gray zone where FDA medical device classification, HIPAA compliance, and legal liability remain unresolved—developers building health tech on OpenAI’s platform should plan for regulatory changes

- AI medical accuracy (60-70% on USMLE) lags human doctors (95%+), and hallucination risks in healthcare can cause patient harm at scale given 230 million weekly queries

- The massive user demand reveals healthcare access failures—cost, wait times, and convenience drive AI adoption, but AI doesn’t fix the underlying broken system

- Privacy concerns intensify after Illinois 600,000-patient breach the day before launch—OpenAI hasn’t disclosed HIPAA compliance details or data usage policies

- FDA regulation is inevitable within 6-12 months, likely classifying diagnostic AI as medical devices requiring clinical trials and continuous monitoring

OpenAI formalizes what 230 million users already do, but healthcare regulators won’t ignore AI medical advice much longer. The question isn’t whether regulation comes, but how quickly and how strictly. Developers betting on ChatGPT Health should prepare for compliance requirements, not just product features.