DeepSeek dropped R1 on January 20, 2025—a 671-billion parameter reasoning model that matches OpenAI’s O1 on math and coding benchmarks while costing 95% less to train and deploy. Released under an MIT license with full commercial rights, this Chinese AI firm with limited resources just proved you don’t need billion-dollar budgets to build frontier AI. The narrative that only well-funded US labs can compete just got shattered.

The Cost Disruption That Actually Matters

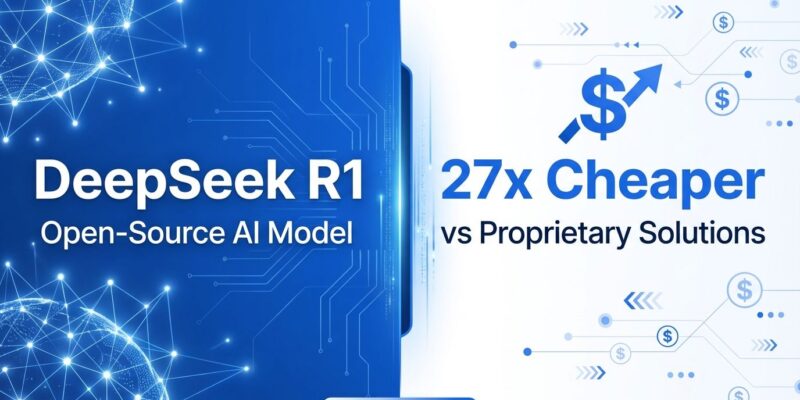

Here’s the pricing reality: DeepSeek R1’s API costs $0.55 input and $2.19 output per million tokens. OpenAI’s O1? $15 input, $60 output. That’s 27x cheaper—or 50x when you factor in total deployment costs. This isn’t a minor optimization. This removes the primary barrier preventing indie developers and startups from integrating reasoning AI into their products.

The impact is already real. AWS integrated R1 into Bedrock and SageMaker, Microsoft added it to Azure AI Foundry, and IBM plugged it into watsonx.ai. When enterprise platforms move this fast, the economics are validated. But the real win is for individual developers who can now experiment freely without burning through cash reserves.

MIT License Means You Actually Own It

Cost savings don’t matter if you’re locked into a vendor’s terms of service. DeepSeek R1’s MIT license changes the game: full commercial use, modifications allowed, distillation permitted. Unlike O1’s API-only access, you download R1’s weights from GitHub or Hugging Face, fine-tune it for your domain, and deploy wherever you want.

For healthcare companies, this means keeping patient data on-premise. For financial firms, it means regulatory compliance without vendor negotiations. For startups, it means building intellectual property without licensing fears. You control the stack. OpenAI controls nothing.

Performance: Where R1 Actually Stands

The benchmarks matter because they show R1 isn’t a budget compromise—it’s competitive. On AIME 2024 math problems, R1 scored 79.8% versus O1’s 79.2%. On the MATH-500 dataset, R1 hit 97.3%. Coding on Codeforces shows near parity: R1 at 96.3% percentile, O1 at 96.6%. General knowledge (MMLU) lags slightly—R1 at 90.8%, O1 at 91.8%—but not enough to justify a 27x price premium for most real-world use cases.

Honesty check: O1 scored 26% higher on specific reasoning puzzles in one study. But for code generation, math-heavy logic, and technical problem-solving—the tasks developers actually face—R1 delivers equivalent results. Perfect is the enemy of good enough, especially when “good enough” costs 5% of the alternative.

Run It Locally in Five Minutes

DeepSeek released distilled versions at 1.5B, 7B, 8B, 14B, 32B, and 70B parameters. The 7B/8B models run on consumer hardware with 16GB RAM. Deployment via Ollama takes one command: ollama run deepseek-r1:7b. No API keys. No cloud setup. No vendor account. Your data never leaves your network.

This changes what’s possible. Build offline applications that work without internet. Prototype reasoning features without incurring API costs. Deploy in air-gapped environments for security-critical applications. LM Studio offers a GUI alternative for those who prefer visual tools over CLI.

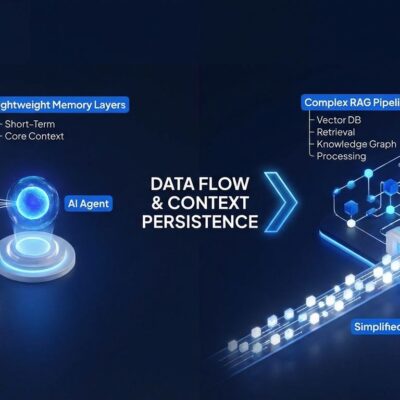

The Architecture That Makes It Work

R1 uses a Mixture of Experts (MoE) architecture: 671 billion total parameters, but only 37 billion activate per forward pass. That’s 5.5% sparse activation. Each of the model’s 58 layers routes inputs to 8 of 256 specialized experts, processes them in parallel, and aggregates the results. This is why a 671B model runs with the efficiency of a 37B dense model.

The training innovation matters too. DeepSeek’s R1-Zero proved reasoning capabilities emerge from pure reinforcement learning without supervised fine-tuning—overturning conventional wisdom. The production R1 adds supervised refinement to fix readability and language mixing, but the core breakthrough stands: smart design beats brute force scaling. The “bigger is always better” AI narrative is dead.

What This Actually Means

R1 validates the “efficient models” trend IBM predicted for 2026. While OpenAI, Anthropic, and Google race toward trillion-parameter behemoths, DeepSeek proved you can match their performance with smarter architectures at 5% of the cost. This economic disruption will force pricing recalibration across the industry—OpenAI’s API monopoly just ended.

Developer impact is immediate: reasoning AI is no longer exclusive to well-funded projects. The MIT license removes legal barriers. The distilled models remove hardware barriers. The 27x cost reduction removes budget barriers. If you’ve been waiting for reasoning AI to become practical, this is the moment.

Try It, But Know the Limits

R1 optimizes for English and Chinese—other languages degrade. It may hallucinate on implausible premises, constructing elaborate but fictional answers. Language mixing happens in edge cases. Some developers report benchmark overfitting concerns: stellar AIME performance might not fully generalize to novel real-world problems.

These limitations are acceptable trade-offs for 27x cost savings and full ownership. The developer community’s emerging consensus: use DeepSeek V3 for general tasks, escalate to R1 when you need deep reasoning. That workflow makes sense. Compare the benchmarks yourself, test the distilled models locally, and decide if R1 fits your use case. For most developers tired of OpenAI’s pricing, it will.