OpenCode (48,000+ GitHub stars) and Claude Code (47,000+ stars) are locked in the defining AI coding agent battle of early 2026. In just five days this January, OpenCode surged from 44,714 to 48,324 stars—explosive growth that signals serious developer interest. The twist? OpenCode’s creator openly admits to “reverse engineering Claude Code and re-implementing almost the exact same logic.” The competition is so close that developers report they “really can’t tell which is better.” This isn’t another feature checklist—it’s a fundamental debate about what you value: open-source flexibility with cost control (OpenCode) or proprietary polish with proven performance (Claude Code).

The Core Split: Transparency vs Performance

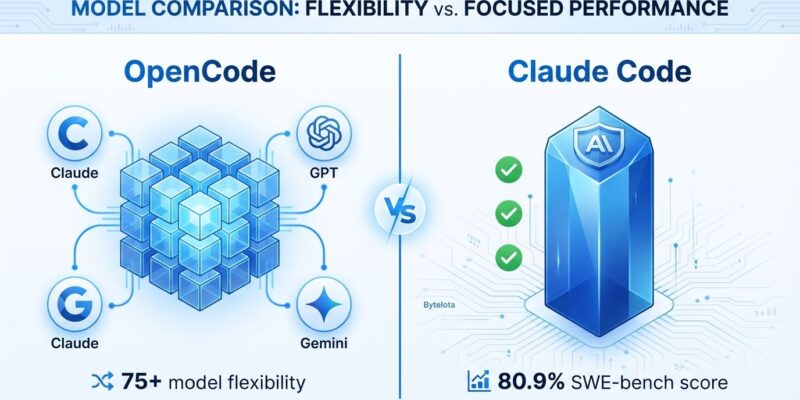

OpenCode and Claude Code embody competing philosophies. OpenCode supports 75+ AI model providers—Claude, OpenAI, Google Gemini, AWS Bedrock, Groq, Azure OpenAI, even local models. You pay nothing for the tool, just API usage to your chosen provider. It stores no code, making it ideal for regulated industries like finance and healthcare.

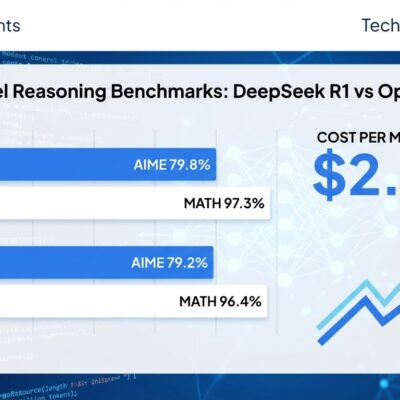

Claude Code takes the opposite approach: Claude models only (Opus 4.5, Sonnet 4.5, Haiku 4.5). You need a Claude subscription—$17 per month for Pro with usage limits, or $100 per month for Max if you’re a heavy user. In exchange, you get state-of-the-art performance: 80.9% accuracy on SWE-bench Verified with Opus 4.5, the first model to crack 80% on this benchmark. That’s not just a number—it means the agent successfully resolves real GitHub issues at a higher rate than any competitor.

The choice isn’t about which tool is “better.” It’s about which trade-offs you accept. OpenCode gives you control—model flexibility, cost transparency, privacy-first design. Claude Code gives you optimization—peak performance, advanced features, polished workflows. Both are legitimate priorities.

Performance Parity (With a Catch)

Here’s the surprise: when using the same underlying model, OpenCode matches Claude Code’s output quality. Multiple developers who tested both report they “can’t tell the difference” in code quality. Andrea Grandi, who ran extensive comparisons, concluded: “Claude was hands down the best overall… but if you have time to experiment, use OpenCode with Sonnet-4. Otherwise, use Claude Code.”

This performance parity exists because OpenCode’s creator reverse engineered Claude Code’s logic and rebuilt it. Transparency at its finest—and proof that open source can compete with proprietary AI tooling when the playing field levels.

The catch? Claude Code’s exclusive access to Opus 4.5 unlocks capabilities OpenCode users can’t replicate without paying Claude anyway. Opus 4.5 enables thinking mode (extended reasoning for complex problems), checkpoint systems (instant rewind to previous states), and parallel subagent workflows (build frontend and backend simultaneously). These aren’t gimmicks—Doctolib used Claude Code’s subagents to replace legacy testing infrastructure in hours instead of weeks, shipping features 40% faster.

So yes, OpenCode with Sonnet 4 matches Claude Code with Sonnet 4. However, Claude Code with Opus 4.5 pulls ahead—assuming you’re willing to pay for it.

The Real Cost of “Free”

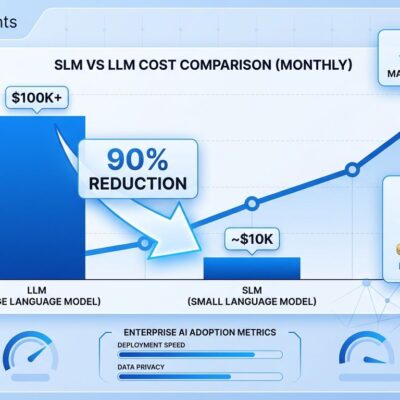

OpenCode costs zero dollars. Claude Code costs $17-100 per month per developer, plus API usage on top. For one developer, that’s manageable. For a team of 50? That’s $850-5,000 monthly before API costs. With 40% of enterprise applications expected to feature AI agents by the end of 2026 (up from less than 5% in 2025), these per-seat expenses add up fast.

However, OpenCode isn’t truly free—you still pay for API usage. The difference is transparency and control. With OpenCode, you can switch providers if costs spike, experiment with cheaper models for simple tasks, or negotiate volume discounts directly. With Claude Code, you’re locked into Anthropic’s pricing structure.

The ROI question matters here. Nevertheless, if Claude Code makes your team 40% more productive (like Doctolib), the $100/month subscription pays for itself in hours. Meanwhile, if you’re a budget-conscious startup experimenting with AI coding agents, OpenCode’s zero-tool-cost model removes a significant barrier to entry. Match the cost model to your constraints.

Features: Advanced vs Flexible

Claude Code’s unique features justify its premium for teams working on complex systems. Checkpoints let you rewind instantly to any previous state—experiment freely, roll back mistakes without git archaeology. Thinking mode on Opus 4.5 shows the model’s reasoning process, crucial for debugging why an agent made specific choices. Parallel subagents orchestrate multi-component refactors: one agent handles backend schema changes while another updates frontend queries simultaneously.

OpenCode counters with flexibility, not advanced orchestration. Planning Mode (/plan command) disables changes and suggests implementation only—perfect when you want to review before execution. LSP (Language Server Protocol) integration automatically detects your languages and spins up the right servers for code intelligence. Custom MCP server support lets you extend functionality for project-specific workflows. Furthermore, the terminal buffer system—built by Neovim users—handles window resizing and unlimited scrolling better than standard terminal output.

Neither tool is clearly “better” on features. Claude Code offers sophistication for complex work. OpenCode offers adaptability for varied workflows.

Decision Framework: Match Tool to Values

Choose OpenCode if you need model flexibility, have budget constraints, require privacy-first design for regulated environments, prefer terminal-focused workflows (especially Vim/Neovim users), or value open-source philosophy. OpenCode makes sense for startups testing AI coding agents, individual developers unwilling to commit to subscriptions, or teams that want to experiment with multiple AI providers.

Choose Claude Code if you need proven top-tier performance (that 80.9% SWE-bench score matters for complex projects), work on large codebases requiring superior context management, want advanced features like thinking mode and subagents, or already have enterprise Claude subscriptions. Claude Code fits performance-critical projects, enterprise teams with budget for productivity tools, and developers who value polished experiences over DIY flexibility.

Skip both if you’re learning to code (agents hinder skill development), working on security-critical systems without expert review, building simple scripts where autocomplete suffices, or need offline-only environments (both require API access). GitHub Copilot’s autocomplete model still makes more sense for lightweight assistance.

What This Competition Means

The OpenCode vs Claude Code race is healthy for developers. OpenCode’s rapid iteration (3,610 stars in five days) forces Claude Code to stay sharp on features and pricing. Claude Code’s benchmark-topping performance pushes OpenCode to close capability gaps. We win through choice.

The broader question: can open source compete with proprietary AI? OpenCode’s reverse engineering approach says yes—when someone rebuilds the logic, performance equalizes. The remaining differentiation is business model (subscriptions vs direct API) and exclusive features (Opus 4.5 capabilities). That’s a narrower moat than most proprietary AI tools enjoy.

Moreover, expect this competition to accelerate. OpenCode launched less than three months ago and already rivals Claude Code in usage. Feature parity will tighten further. Pricing pressure will intensify as enterprise adoption scales. The winner? Developers who get powerful coding agents at competitive prices with genuine alternatives.

Key Takeaways:

- Performance parity with same model: OpenCode + Sonnet 4 matches Claude Code quality

- Philosophy over features: Choose based on values—transparency/cost (OpenCode) or polish/performance (Claude Code)

- No universal winner: Budget-conscious, privacy-first teams prefer OpenCode; performance-critical teams prefer Claude Code

- Rapid evolution: OpenCode gained 3,610 stars in five days—competition is accelerating

- Enterprise impact: 40% of apps adopting AI agents by 2026 makes this choice matter at scale