Nvidia CEO Jensen Huang stood on stage at CES 2026 and declared: “The ChatGPT moment for robotics is here.” The company just released Cosmos and GR00T—open-source foundation models for physical AI that promise to democratize robot development the way ChatGPT democratized text generation. With Boston Dynamics, Caterpillar, and other industry giants already building on the stack, and integration with Hugging Face bringing the tools to 13 million AI developers, the technology is undeniably real. But calling this the “ChatGPT moment” might be 3-5 years premature.

What Are Cosmos and GR00T Models?

Nvidia released three foundation models targeting physical AI:

Cosmos Predict 2.5 is a world foundation model that generates video predictions of physical environments. Trained on 200 million clips, it produces sequences up to 30 seconds long with spatial-temporal coherence. The practical application? Synthetic data generation for robot training. Instead of spending thousands of dollars running a robot through scenarios in the real world, developers can simulate those scenarios and generate training data. The model also supports multi-view camera setups, which is critical for autonomous vehicles and multi-sensor robots.

Cosmos Reason 2 is a vision-language model (VLM) that helps robots understand the physical world. It acts as the text encoder for Cosmos Predict and enables semantic grounding—translating language instructions into physical actions.

Isaac GR00T N1.6 is the headline model: a 3-billion parameter vision-language-action (VLA) model for humanoid robots. It takes multimodal input (language and images) and outputs full-body control commands for manipulation tasks. The model is cross-embodiment, meaning it works across different robot types without retraining from scratch. It’s already available on Hugging Face for developers to fine-tune.

The technical innovation here is real. These models are cutting-edge, and releasing them open-source is a meaningful step toward accessibility. But accessibility for developers is not the same as accessibility for everyone—which is where the “ChatGPT moment” comparison falls apart.

Nvidia’s “Android of Robotics” Play

Nvidia isn’t trying to build the best robot. It’s trying to power all robots. The strategy mirrors Google’s Android playbook: provide the platform, let hardware manufacturers compete on top of it, and capture the ecosystem.

The company integrated GR00T and Cosmos into Hugging Face’s LeRobot framework, which now connects 2 million Nvidia robotics developers with 13 million Hugging Face AI builders. Robotics is the fastest-growing category on Hugging Face, with Nvidia’s models leading downloads. The company also released Isaac Lab-Arena for robot evaluation and OSMO, an edge-to-cloud compute framework that simplifies training workflows.

Industry adoption is already happening. Boston Dynamics integrated Nvidia’s Jetson Thor into the production-ready Atlas humanoid unveiled at CES. Caterpillar is expanding its AI collaboration for construction and mining equipment. Franka Robotics and NEURA Robotics are using GR00T-enabled workflows to train new behaviors. These aren’t garage projects—these are billion-dollar companies betting on Nvidia’s stack.

But there’s a catch. Tesla built Full Self-Driving (FSD) with a closed, proprietary approach and has 10 million videos of real-world driving data. Nvidia’s open approach democratizes access but lacks the vertical integration and proprietary data moat that Tesla has. The trade-off is reach vs. control. Nvidia wins on ecosystem size; Tesla wins on data quality and iteration speed.

Why This Isn’t the ChatGPT Moment (Yet)

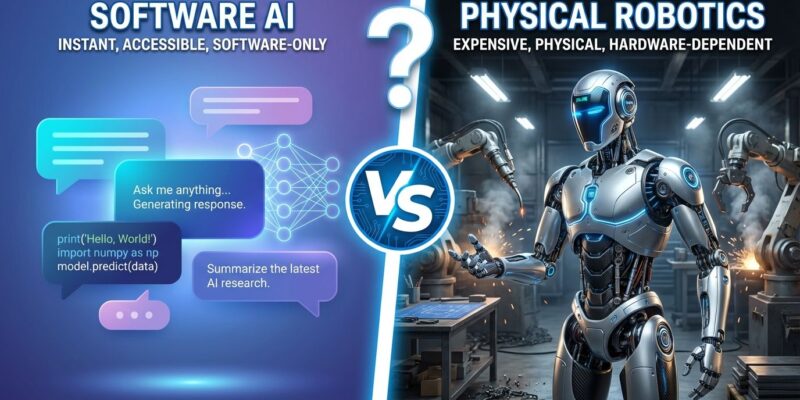

ChatGPT democratized AI for text generation because anyone with an internet connection could use it instantly. You type a prompt, you get a response. No hardware, no setup, no expertise required. That’s not the case here.

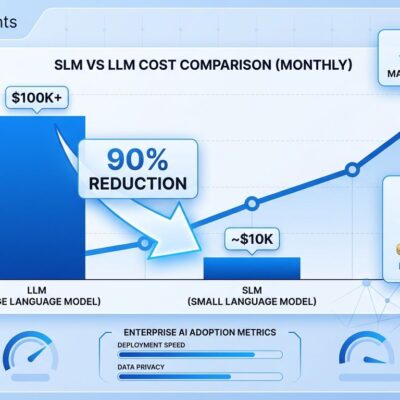

Robots require expensive hardware. A humanoid robot costs $10,000 to $100,000+. Even lower-cost robotic arms run several thousand dollars. ChatGPT worked on any device—laptop, phone, tablet. Physical AI requires physical infrastructure.

Robotics demands deep expertise. ChatGPT was usable by non-technical users on day one. Robot development requires ML/robotics knowledge, understanding of VLA architectures, simulation workflows, and real-world deployment considerations. The Hugging Face integration helps, but this isn’t plug-and-play.

Simulation isn’t reality. Cosmos Predict enables synthetic training data, which lowers costs. But you can’t train a robot purely in simulation any more than you can become a chef by watching cooking videos. Real-world deployment still requires expensive physical testing, safety certifications, and hardware iterations.

Deployment takes years, not months. ChatGPT went from zero to 100 million users in two months. Physical AI requires manufacturing, real-world testing, regulatory approval, and infrastructure buildout. Even with these models available today, widespread deployment is 3-5 years out.

This isn’t the ChatGPT moment. It’s the GPT-2 API moment—powerful tools for developers, a foundation for future breakthroughs, but not yet mass consumer adoption. Industry adoption is in early stages with beta partners and CES demos. The real test will be deployment numbers 12 months from now, not handpicked announcements from partners with existing Nvidia relationships.

What Developers Should Do

The opportunity is real, even if the timeline is overstated. If you’re a robotics developer, explore the stack: GR00T N1.6 on GitHub, Cosmos Predict 2.5 for synthetic data, Isaac Lab-Arena for evaluation. If you’re an AI/ML engineer looking to expand into physical AI, this is a high-growth field—the humanoid robot market is projected to grow from $2.92 billion (2025) to $15.26 billion (2030), a 39.2% CAGR.

But temper expectations. Open-source tools lower software barriers, but hardware costs remain a moat. Startups won’t win on software alone—they’ll need differentiated hardware or vertical integration (like Tesla). For most developers, the play is building applications on top of existing robot platforms, not building robots from scratch.

Practical next steps: Try GR00T on Hugging Face, experiment with Cosmos Predict for synthetic environments, and join the LeRobot community. Watch for real-world deployment announcements beyond CES demos—that’s where the signal is.

2026 is an inflection point for physical AI. Industry leaders from IBM to AT&T Ventures are calling it. But an inflection point isn’t the same as explosive adoption. Nvidia’s announcement is technically impressive, but the “ChatGPT moment” claim is marketing hype designed to ride the AI wave. The foundation is laid. Now we wait to see what gets built on top.