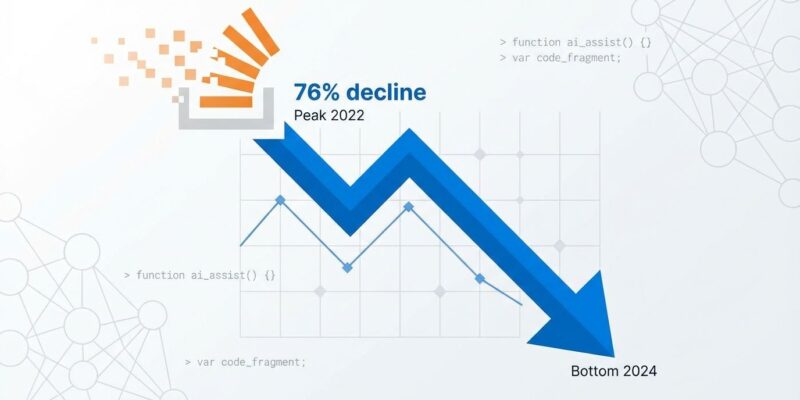

Stack Overflow monthly questions collapsed 76% since ChatGPT launched in November 2022—from 108,563 questions to just 25,566 by December 2024. That’s 2009 levels despite having 10x more developers today. The platform that defined developer problem-solving for over a decade is bleeding questions at an alarming rate.

Here’s the paradox: 84% of developers now use AI tools, yet trust in AI accuracy dropped from 43% (2024) to 33% (2025) according to the 2025 Stack Overflow Developer Survey published December 29. The top frustration? 66% cite “AI solutions that are almost right, but not quite.”

This isn’t just a website losing traffic. It represents institutional knowledge creation stopping, junior developers losing their best learning resource, and a debugging crisis headed our way in 2-3 years. We’re trading knowledge infrastructure for convenience—and we’ll pay for it.

Using Tools We Don’t Trust

Developers are locked in a toxic relationship with AI. The 2025 survey found 84% use or plan to use AI tools (up from 76% in 2024), yet only 33% trust their accuracy. 46% actively distrust AI output. Only 3% “highly trust” these tools.

Experienced developers are the most skeptical. Those with actual accountability—senior engineers, architects, tech leads—report the lowest “highly trust” rate (2.6%) and the highest “highly distrust” rate (20%). They know what everyone’s learning the hard way: you can’t build robust software on a foundation you don’t trust.

Positive sentiment toward AI tools dropped from over 70% in 2023-2024 to just 60% in 2025. When asked why they’d still ask a person for help in a future with advanced AI, 75% of developers said: “When I don’t trust AI’s answers.” They’re using tools they openly admit they don’t trust. That’s not sustainable.

Why “Almost Right” Is Worse Than Wrong

The number-one developer frustration with AI isn’t that it’s slow or expensive—it’s that it generates code that looks correct but isn’t. 66% cite dealing with “AI solutions that are almost right, but not quite.” 45% say debugging AI-generated code takes more time than expected.

AI generates plausible-looking code that passes syntax checks and feels correct at first glance. Then it fails in production because of subtle logic bugs or edge cases the model didn’t consider. Unlike obviously broken code, these bugs are insidious—hard to spot immediately, time-consuming to debug, and particularly dangerous for junior developers who lack the experience to catch them.

Here’s the productivity mirage: a rigorous METR study found developers using AI were actually 19% slower—yet they believed they were 20% faster. That’s a 39-percentage-point perception gap between feeling productive and being productive. You gain speed in code generation but lose it (and more) in debugging and verification. The net result: slower delivery, lower quality, frustrated teams.

The Institutional Knowledge Crisis

When Stack Overflow questions collapse 76%, institutional knowledge creation stops. This matters more than most developers realize. Stack Overflow isn’t just Q&A—it’s the collective memory of software development. Millions of curated, peer-reviewed solutions to real-world problems, searchable and versioned.

Community knowledge compounds over time. AI answers don’t. Every Stack Overflow thread represents multiple expert perspectives, edge cases documented by practitioners, and gotchas learned the hard way. When question volume collapses, that knowledge creation stops. The platform is no longer growing the knowledge base for emerging frameworks, new tools, or novel problems.

Here’s the crisis ahead: AI models are trained on old Stack Overflow data. When new problems arise with new frameworks in 2026-2028, there will be no Stack Overflow answers for AI to reference. The models can’t generate solutions for problems that don’t exist in their training data. We’re burning the bridges we’ll need when AI can’t help.

The data already shows this limitation. Advanced questions on Stack Overflow have doubled since 2023—problems AI can’t solve. 35% of developers now visit Stack Overflow specifically after AI-generated code fails. The platform is becoming the safety net for when AI doesn’t work, but if question volume keeps collapsing, that safety net disappears.

The Junior Developer Skill Crisis Ahead

Software developer employment for ages 22-25 declined nearly 20% from its late 2022 peak. AI has made much of what junior developers did redundant—tenured developers now ask AI instead of delegating to juniors. But the deeper problem isn’t job displacement. It’s that AI is preventing junior developers from learning how to think.

AI gives answers, but the knowledge gained is shallow. Many junior developers can’t explain how their AI-generated code works or handle follow-up questions about edge cases. They’re copy-pasting without understanding—and without the practice debugging that builds systematic problem-solving skills.

Stack Overflow’s learning value was irreplaceable: developers had to read multiple expert discussions to get the full picture. It was slower, but they came out understanding why solutions worked, not just what worked. That struggle built debugging skills, taught how to read documentation, and developed the systematic thinking that separates engineers from code generators.

In 2-3 years, companies will struggle to find developers who can debug systematically, understand fundamentals, and solve problems AI can’t handle. We’re creating a two-tier market: scarce, expensive pre-AI seniors who learned to think versus abundant, cheap AI-native juniors with limited problem-solving ability. That’s not sustainable.

This Is Progress Backwards

Stack Overflow’s collapse isn’t a sign of AI’s success—it’s a warning about knowledge loss and the developer crisis we’re racing toward. We’re trading long-term infrastructure for short-term convenience.

AI speed is seductive. Instant answers feel like progress. But “almost right” isn’t good enough for production code. 66% of developers are frustrated, 45% are spending more time debugging, and trust is declining year over year. We know these tools are unreliable, yet we keep using them because speed feels like productivity.

The value of Stack Overflow was never just instant answers. It was peer review, curation, searchability, and continuous knowledge creation. AI provides none of that. It gives you code that looks right, then leaves you to debug why it isn’t. It can’t explain edge cases, can’t learn from mistakes, and can’t tell you when it’s hallucinating.

Advanced questions on Stack Overflow have doubled since 2023 because AI has hit its limit. 35% of developers visit Stack Overflow when AI fails. 80% still visit regularly. The platform isn’t dying—it’s evolving into what AI can’t replace: the place for complex, context-dependent problems and verified expert knowledge.

What developers should do: keep contributing to Stack Overflow for new and niche problems. Use AI for speed and boilerplate, Stack Overflow for learning, verification, and edge cases. Teach junior developers to debug systematically, not just copy-paste AI output. Don’t let community knowledge die because instant answers are convenient.

We need both: AI for generation speed, Stack Overflow for depth and reliability. Choose short-term convenience over long-term knowledge infrastructure, and we’ll pay for it when the next generation can’t debug and new problems have no solutions.

Key Takeaways

- Stack Overflow questions collapsed 76% since ChatGPT launch (Nov 2022)—from 108,563 to 25,566 monthly, matching 2009 levels despite 10x more developers

- 84% of developers use AI tools, yet only 33% trust their accuracy (down from 43% in 2024); 46% actively distrust AI output

- 66% frustrated by AI solutions “almost right, but not quite”; debugging AI code takes more time than expected, creating a productivity mirage

- Institutional knowledge creation stopped—AI trained on old data won’t help with new frameworks; crisis coming in 2-3 years when new problems have no Stack Overflow answers

- Junior developer skill crisis ahead: AI prevents learning fundamentals, employment (ages 22-25) down 20%, next generation can generate code but can’t debug or explain it